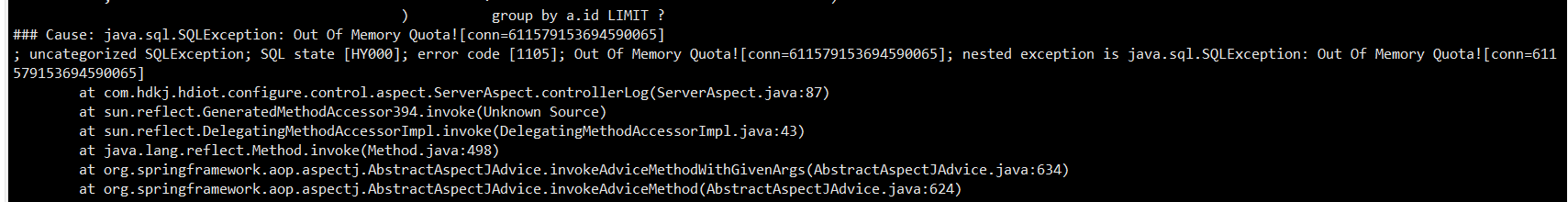

The conditions in your program should all be passed from the frontend, while the conditions you execute separately through the client are specified by you. Therefore, it is reasonable for the execution time and execution plan to be different. You should test whether the conditions passed from your frontend can filter out the most data, and whether the number in the LIMIT clause can be very large. For example, like this:

SELECT

a.id,

a.name,

a.sn,

a.code,

a.kind,

a.address,

a.longitude,

a.latitude,

a.factory,

a.category,

a.model,

a.created,

a.aid,

a.enable,

a.sort,

a.rid,

a.last_data_time,

a.status,

a.meter_no,

a.cust_no,

a.comm_no,

a.dev_type,

b.name AS areaName,

b.code AS areaCode,

d.NAME AS modelName,

c.NAME AS appName,

c.fetchFreq AS fetchFreq,

c.offlineDelay AS offlineDelay,

c.uploadFreq uploadFreq,

c.offline_cycle offlineCycle,

'device' AS TYPE

FROM

hd_device a

JOIN hd_device_area b

ON b.id = a.category

JOIN hd_area_relation r

ON r.des = b.id

LEFT JOIN hd_area_relation r1

ON r.anc = r1.des

JOIN hd_iot_app c

ON c.id = a.aid

JOIN hd_iot_model d

ON c.mid = d.id

LEFT JOIN hd_treenode_cate t

ON t.tid = a.id

LEFT JOIN hd_treenode n

ON n.id = t.nid

LEFT JOIN hd_tag g

ON g.tid = a.id

WHERE 1 = 1

GROUP BY a.id LIMIT 10000000000

Additionally, if you have configured prepstmt in the connection string of your program, all SQL statements will follow the same execution plan. You need to consider whether it is still appropriate to follow this execution plan when you choose the most general conditions.