Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: Lightning导入文件到tidb耗时太久,是配置问题吗?有更好的建议吗?

[TiDB Usage Environment] Production Environment / Testing / Poc

[TiDB Version] v7.1.0

[Reproduction Path] What operations were performed to encounter the problem

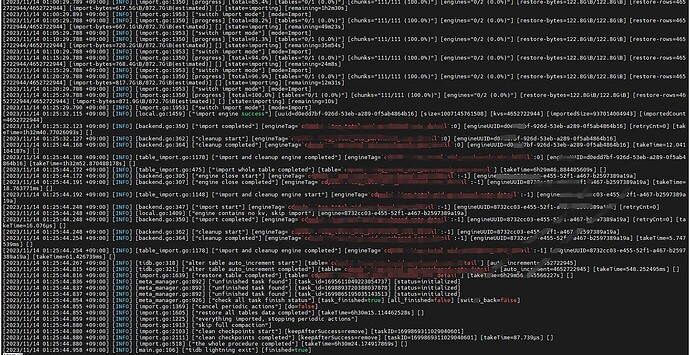

[Encountered Problem: Problem Phenomenon and Impact] Importing a file of about 128GB from HDFS to TiDB took more than 6 hours. Seeking advice from experts on where the issue might be.

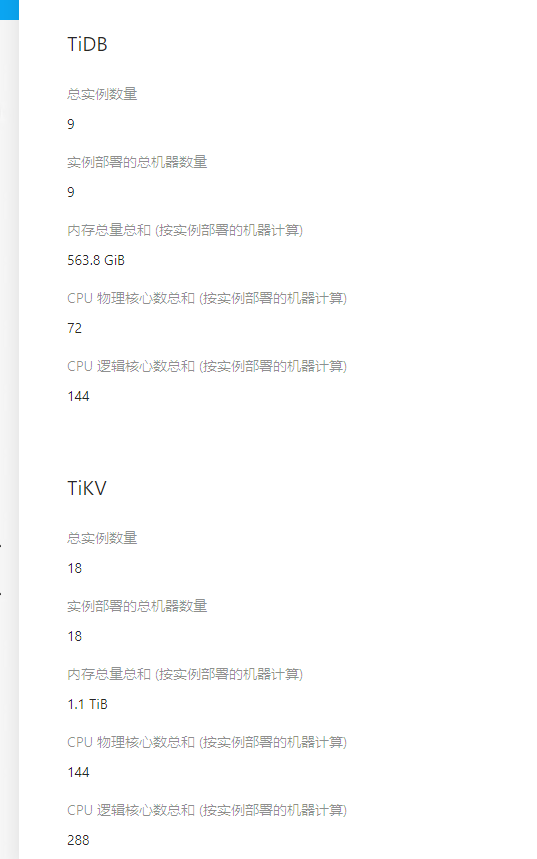

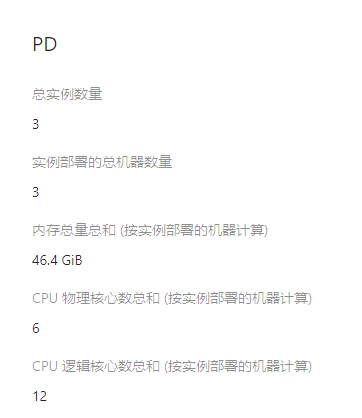

[Resource Configuration]

TIKV: 8 (16 vCore) 64g

pd: 2 (4 vCore) 16g

TIDB: 8 (16 vCore) 64g

[Attachments: Screenshots/Logs/Monitoring]

Configuration Parameters:

[lightning]

check-requirements = true

#index-concurrency = 4

#table-concurrency = 8

#region-concurrency = 32

level = “info”

file = “/home/hive/data/cdp_lightning_logs”

max-size = 256 # MB Log file size

max-days = 28

#io-concurrency = 5

max-error = 0

meta-schema-name = “lightning_metadata”

[tikv-importer]

backend = “local”

incremental-import = true

sorted-kv-dir = “/home/hive/data/cdp_lightning_kv”

#range-concurrency = 16

#send-kv-pairs = 98304 #32768

on-duplicate = “replace”

duplicate-resolution = “remove”

compress-kv-pairs = “gz”

[mydumper]

#read-block-size = “256MiB” # Default value

no-schema = true

Value range is (0 <= batch-import-ratio < 1).

batch-import-ratio = 0.75

data-source-dir = “/home/hive/data/cdp_lightning_data”

character-set = “auto”

data-character-set = “binary”

data-invalid-char-replace = “uFFFD”

strict-format = true

max-region-size = “256MiB” # Default value

[checkpoint]

enable = true

[post-restore]

checksum = “false”

analyze = “false”

[cron]

TiDB Lightning Automatic

switch-mode = “5m”

Print import progress in logs

log-progress = “5m”