Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: PD节点的etcd数据是存储在文件中吗?通过raft保证个节点的强一致性

【TiDB Usage Environment】Production, Testing, Research

【TiDB Version】

【Encountered Problem】

【Reproduction Path】What operations were performed that led to the problem

【Problem Phenomenon and Impact】

【Attachments】

Please provide the version information of each component, such as cdc/tikv, which can be obtained by executing cdc version/tikv-server --version.

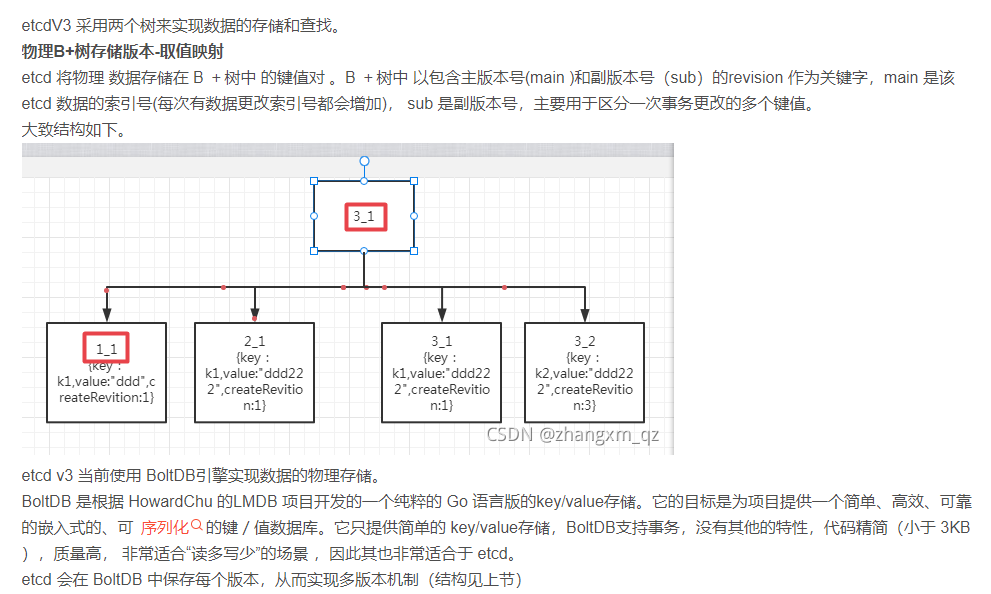

ETCD storage mechanism

- etcd is a distributed key-value store developed by CoreOS based on Raft, which can be used for service discovery, shared configuration, and consistency guarantees (such as database election, distributed locks, etc.)

- etcd is a distributed, highly available, consistent key-value storage system written in Go language

- etcd is based on the Raft protocol and ensures strong data consistency through log replication

- By default, etcd persists data to disk as soon as it is updated. Data persistence storage uses the WAL (write-ahead log) format. WAL records the entire process of data changes, and in etcd, all data must be written to WAL before being committed; etcd’s snapshot file stores all data at a certain point in time, with the default setting being to take a snapshot every 10,000 records, after which the WAL file can be deleted

- etcd has a certain degree of fault tolerance. Assuming the cluster has n nodes, even if (n-1)/2 nodes fail, as long as the remaining (n+1)/2 nodes reach a consensus, operations can still succeed. Therefore, it can effectively cope with the risk of data loss caused by network partitions and machine failures

In distributed systems, managing the state between nodes has always been a challenge. etcd seems to be specifically designed for service discovery and registration in cluster environments. It provides features such as data TTL expiration, data change monitoring, multi-value, directory watching, and atomic operations for distributed locks, making it easy to track and manage the state of cluster nodes.