Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: 因优化器问题导致TPCH的Q13语句参数调优后结果集错误问题

[TiDB Usage Environment] Test

[TiDB Version] 5.7.25-TiDB-v6.5.2

When testing the TPCH Q13 statement, it was found to be slow. The main reason is that under the default parameter configuration, hashAgg is not pushed down to join. Refer to: 系统变量 | PingCAP 文档中心

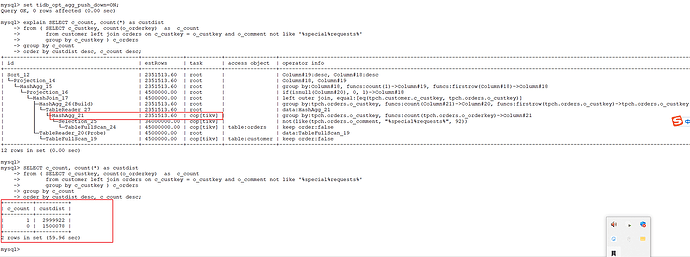

After setting tidb_opt_agg_push_down=ON, it was found that the result set had issues. The specific test process is as follows.

TPCH sf=30, the Q13 statement and execution plan are as follows:

SELECT c_count, count(*) as custdist

from ( SELECT c_custkey, count(o_orderkey) as c_count

from customer left join orders on c_custkey = o_custkey and o_comment not like '%special%requests%'

group by c_custkey ) c_orders

group by c_count

order by custdist desc, c_count desc;

+-------------------------------------+-------------+-----------+----------------+-------------------------------------------------------------------------------------+

| id | estRows | task | access object | operator info |

+-------------------------------------+-------------+-----------+----------------+-------------------------------------------------------------------------------------+

| Sort_10 | 4459520.00 | root | | Column#19:desc, Column#18:desc |

| └─Projection_12 | 4459520.00 | root | | Column#18, Column#19 |

| └─HashAgg_13 | 4459520.00 | root | | group by:Column#18, funcs:count(1)->Column#19, funcs:firstrow(Column#18)->Column#18 |

| └─HashAgg_14 | 4459520.00 | root | | group by:tpch.customer.c_custkey, funcs:count(tpch.orders.o_orderkey)->Column#18 |

| └─HashJoin_17 | 36326779.56 | root | | left outer join, equal:[eq(tpch.customer.c_custkey, tpch.orders.o_custkey)] |

| ├─TableReader_19(Build) | 4500000.00 | root | | data:TableFullScan_18 |

| │ └─TableFullScan_18 | 4500000.00 | cop[tikv] | table:customer | keep order:false |

| └─TableReader_22(Probe) | 36000000.00 | root | | data:Selection_21 |

| └─Selection_21 | 36000000.00 | cop[tikv] | | not(like(tpch.orders.o_comment, "%special%requests%", 92)) |

| └─TableFullScan_20 | 45000000.00 | cop[tikv] | table:orders | keep order:false |

+-------------------------------------+-------------+-----------+----------------+-------------------------------------------------------------------------------------+

10 rows in set (0.00 sec)

--SQL execution result is as follows:

+---------+----------+

| c_count | custdist |

+---------+----------+

| 0 | 1500078 |

| 10 | 199159 |

| 9 | 196056 |

| 11 | 187248 |

| 8 | 175435 |

| 12 | 168100 |

| 13 | 149095 |

| 7 | 140482 |

| 19 | 140168 |

| 18 | 139500 |

| 20 | 136896 |

| 14 | 135608 |

| 17 | 135290 |

| 16 | 130640 |

| 15 | 130288 |

| 21 | 127811 |

| 22 | 114514 |

| 6 | 98056 |

| 23 | 97466 |

| 24 | 80386 |

| 25 | 63250 |

| 5 | 58644 |

| 26 | 47643 |

| 27 | 35048 |

| 4 | 29380 |

| 28 | 24198 |

| 29 | 16366 |

| 3 | 11487 |

| 30 | 10775 |

| 31 | 6947 |

| 32 | 4152 |

| 2 | 3398 |

| 33 | 2529 |

| 34 | 1474 |

| 35 | 798 |

| 1 | 711 |

| 36 | 482 |

| 37 | 215 |

| 38 | 123 |

| 39 | 56 |

| 40 | 28 |

| 41 | 11 |

| 42 | 5 |

| 44 | 2 |

| 43 | 2 |

+---------+----------+

45 rows in set, 1 warning (1 min 24.36 sec)

It can be seen that the two tables use hash_join. Since c_custkey is the primary key, the aggregation operation count(o_orderkey)…group by o_custkey before the join can be pushed down to the storage layer for execution optimization. However, the premise is to set tidb_opt_agg_push_down=ON. After adjustment, it is as follows:

mysql> set tidb_opt_agg_push_down=ON;

Query OK, 0 rows affected (0.00 sec)

--Execution plan is as follows:

+------------------------------------------+-------------+-----------+----------------+---------------------------------------------------------------------------------------------------------------------------------+

| id | estRows | task | access object | operator info |

+------------------------------------------+-------------+-----------+----------------+---------------------------------------------------------------------------------------------------------------------------------+

| Sort_12 | 2351513.60 | root | | Column#19:desc, Column#18:desc |

| └─Projection_14 | 2351513.60 | root | | Column#18, Column#19 |

| └─HashAgg_15 | 2351513.60 | root | | group by:Column#18, funcs:count(1)->Column#19, funcs:firstrow(Column#18)->Column#18 |

| └─Projection_16 | 4500000.00 | root | | if(isnull(Column#20), 0, 1)->Column#18 |

| └─HashJoin_17 | 4500000.00 | root | | left outer join, equal:[eq(tpch.customer.c_custkey, tpch.orders.o_custkey)] |

| ├─HashAgg_26(Build) | 2351513.60 | root | | group by:tpch.orders.o_custkey, funcs:count(Column#21)->Column#20, funcs:firstrow(tpch.orders.o_custkey)->tpch.orders.o_custkey |

| │ └─TableReader_27 | 2351513.60 | root | | data:HashAgg_21 |

| │ └─HashAgg_21 | 2351513.60 | cop[tikv] | | group by:tpch.orders.o_custkey, funcs:count(tpch.orders.o_orderkey)->Column#21 |

| │ └─Selection_25 | 36000000.00 | cop[tikv] | | not(like(tpch.orders.o_comment, "%special%requests%", 92)) |

| │ └─TableFullScan_24 | 45000000.00 | cop[tikv] | table:orders | keep order:false |

| └─TableReader_20(Probe) | 4500000.00 | root | | data:TableFullScan_19 |

| └─TableFullScan_19 | 4500000.00 | cop[tikv] | table:customer | keep order:false |

+------------------------------------------+-------------+-----------+----------------+---------------------------------------------------------------------------------------------------------------------------------+

12 rows in set (0.00 sec)

It can be seen that the grouping and aggregation operation of the orders table has been pushed down to tikv for execution. However, checking the execution result reveals that there are issues with the result set:

May I ask where the defect causing the issue with the result set after enabling tidb_opt_agg_push_down=ON lies? Additionally, why is this parameter not automatically adjusted by the database, allowing the optimizer to choose whether to push down based on cost?