Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: Placement Rules 配置不生效问题

【TiDB Environment】Production

【TiDB Version】v6.1.0

【Issue Encountered】Placement rules configuration not taking effect

【Reproduction Path】Operations performed that led to the issue

【Issue Phenomenon and Impact】

- Initially, when using tiup, the region option for tikv was not configured. This option was added later.

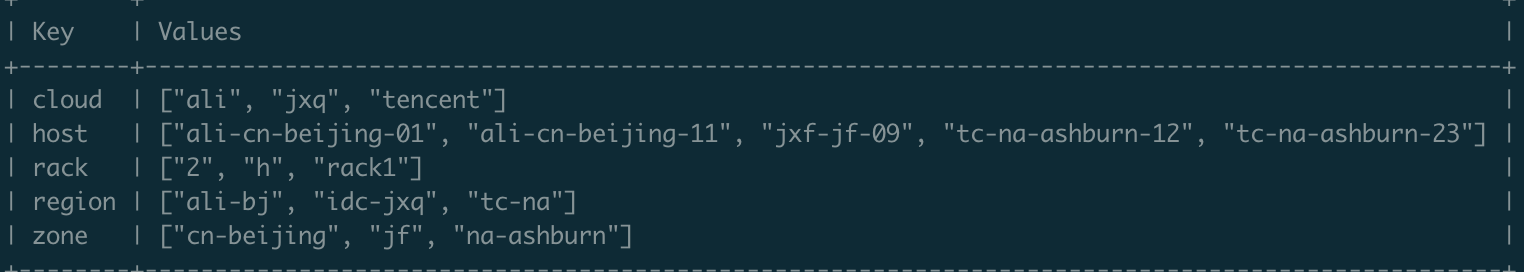

Current configuration:

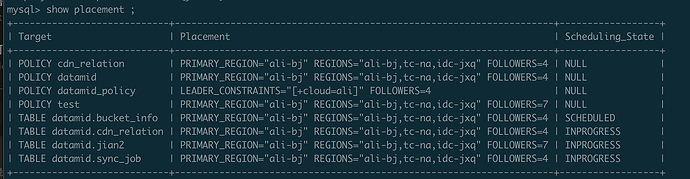

The datamid rule is applied to the bucket_info and cdn_relation tables,

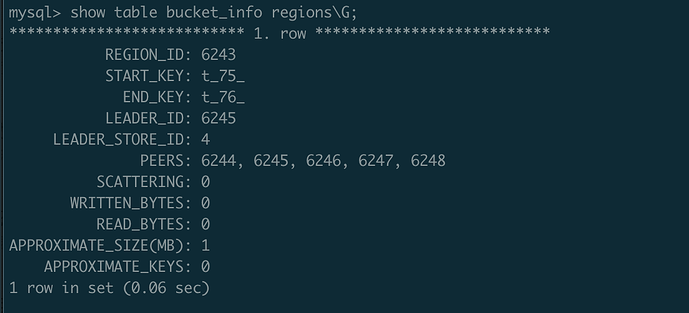

For the bucket_info table, the LEADER_STORE_ID has been scheduled (not sure if it was scheduled or due to frequent modifications and restarts of tikv), but the number of PEERS is still 5.

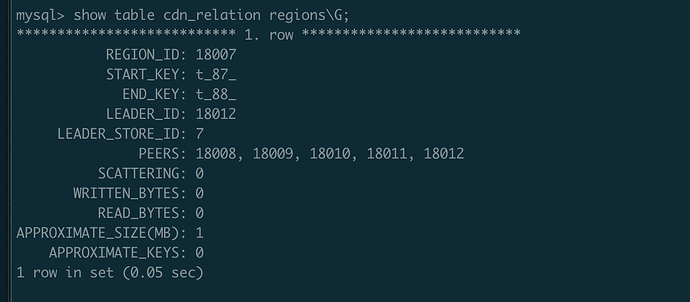

For the cdn_relation table, the LEADER_STORE_ID is still on tikv nodes in other regions.

This is the output of pd-ctl config show:

{

“replication”: {

“enable-placement-rules”: “true”,

“enable-placement-rules-cache”: “false”,

“isolation-level”: “”,

“location-labels”: “cloud,zone,rack,host”,

“max-replicas”: 5,

“strictly-match-label”: “false”

},

“schedule”: {

“enable-cross-table-merge”: “true”,

“enable-joint-consensus”: “true”,

“high-space-ratio”: 0.7,

“hot-region-cache-hits-threshold”: 3,

“hot-region-schedule-limit”: 4,

“hot-regions-reserved-days”: 7,

“hot-regions-write-interval”: “10m0s”,

“leader-schedule-limit”: 4,

“leader-schedule-policy”: “count”,

“low-space-ratio”: 0.8,

“max-merge-region-keys”: 200000,

“max-merge-region-size”: 20,

“max-pending-peer-count”: 64,

“max-snapshot-count”: 64,

“max-store-down-time”: “30m0s”,

“max-store-preparing-time”: “48h0m0s”,

“merge-schedule-limit”: 8,

“patrol-region-interval”: “10ms”,

“region-schedule-limit”: 2048,

“region-score-formula-version”: “v2”,

“replica-schedule-limit”: 64,

“split-merge-interval”: “1h0m0s”,

“tolerant-size-ratio”: 0

}

}