Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: resource_control优先级的问题

[TiDB Usage Environment] Poc

[TiDB Version] 6.1.2

[Problem Encountered] The resource_control configured in global overrides the resource_control at each service level in server_configs. Shouldn’t it be the other way around?

[Resource Configuration]

global:

user: hadoop

group: hadoop

ssh_port: 22

ssh_type: builtin

deploy_dir: /home/hadoop/apps/em-deploy

data_dir: /home/hadoop/apps/tidb-data

resource_control:

memory_limit: 112G

os: linux

arch: amd64

monitored:

node_exporter_port: 9100

blackbox_exporter_port: 9115

deploy_dir: /home/hadoop/apps/em-deploy/monitor-9100

data_dir: /home/hadoop/apps/tidb-data/monitor-9100

log_dir: /home/hadoop/apps/em-deploy/monitor-9100/log

server_configs:

tidb:

log.level: info

oom-use-tmp-storage: true

performance:

memory-usage-alarm-ratio: 0.8

txn-total-size-limit: 10737418240

resource_control:

memory_limit: 112G

tmp-storage-path: /home/hadoop/apps/tidb-data/tidb-temp

tmp-storage-quota: 26843545600

pd:

log.level: info

replication.enable-placement-rules: true

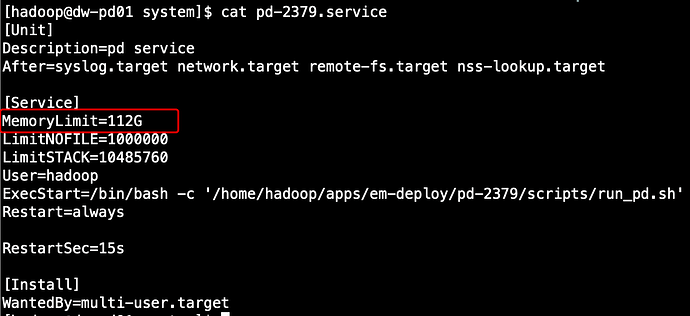

resource_control:

memory_limit: 12G

schedule.leader-schedule-limit: 4

schedule.region-schedule-limit: 2048

schedule.replica-schedule-limit: 64

[PD’s systemd configuration]

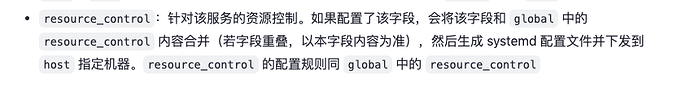

[TiDB documentation screenshot]