Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: k8s部署tidb pvc不能绑定

[Version]

OS: 4.19.90-17.ky10.aarch64, k8s: v1.24.9, operator: 1.4.0, tidb 6.1.2

dyrnq/local-volume-provisioner:v2.5.0

3 nodes, one of which is master

[Configuration Information]

https://raw.githubusercontent.com/pingcap/tidb-operator/v1.4.0/examples/local-pv/local-volume-provisioner.yaml

Storage configured according to the above configuration file, see the attachment tidb_pv.yaml for details:

tidb_pv.yaml (5.1 KB)

[Problem]

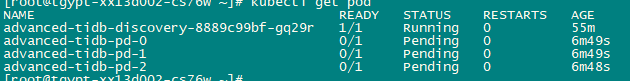

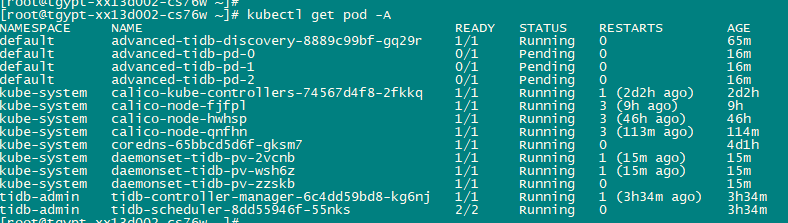

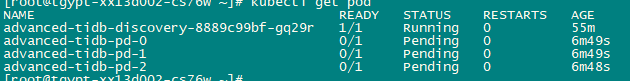

After applying the tidb configuration file, PD is in a pending state. The describe information is as follows (tried changing bindmode to Immediate in between)

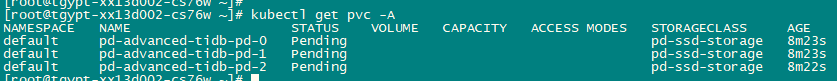

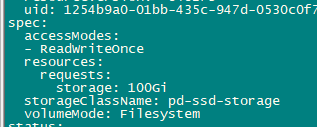

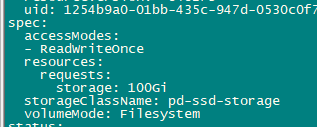

Check pvc is in pending state:

pvc describe as follows:

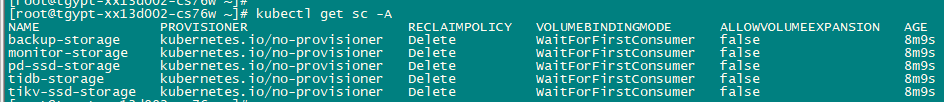

Check storage class exists

-

How to handle the above issue?

-

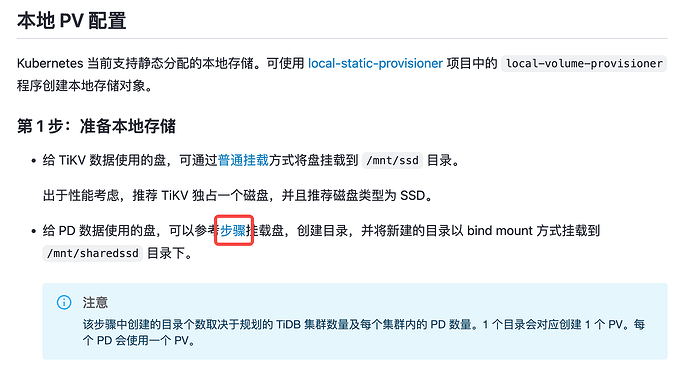

The official documentation describes as follows: Kubernetes 上的持久化存储类型配置 | PingCAP 文档中心

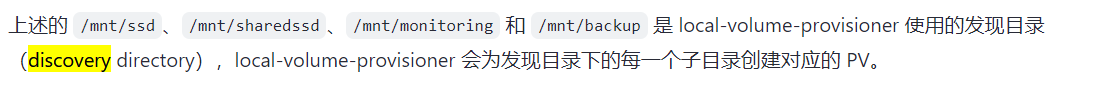

The directory configured in the example is used as the discovery directory, and a pv is created for each subdirectory, but there are no subdirectories here. How to create pv?

Here it says to create a pv for each directory

This step involves k8s operations, so it is omitted in the documentation. You can refer to the k8s documentation here: 配置 Pod 以使用 PersistentVolume 作为存储 | Kubernetes

Check the sc-related logs to confirm the cause of the issue. If it is local disk local-static-provisioner, refer to the steps in the linked documentation to mount the directory in advance.

@Lucien-卢西恩 Applying the TiDB cluster configuration will automatically create a PVC and PV, but currently, the PVC reports that it cannot find the storage class.

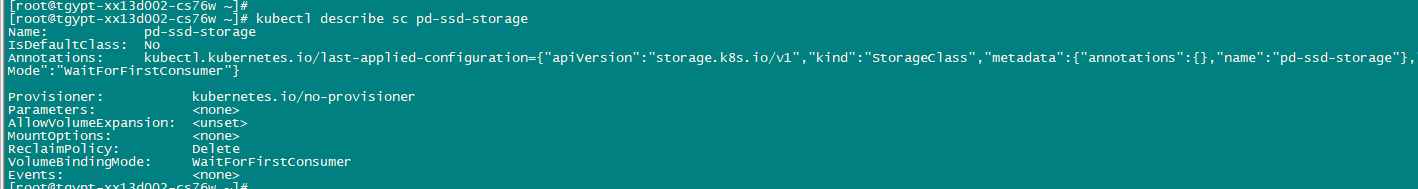

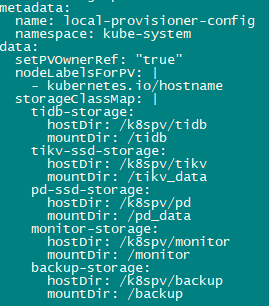

@yiduoyunQ When configuring, I referred to the official documentation and customized the directory name, PV name, etc. The SC information is as follows:

The daemonset log of localvolume can be found in the attachment:

sc.txt (9.9 KB)

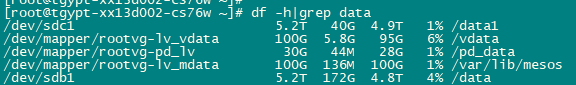

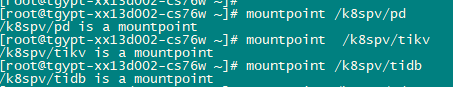

Run mount -l | grep pd_data to check if there are any mount points under the directory.

Where can I check this? The pd is in a pending state, so I can’t enter the pod to check the mount point, and the pv hasn’t succeeded yet.

Confirm the mount point on the node, refer to the official documentation.

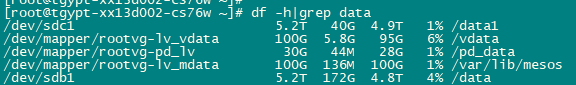

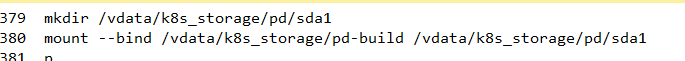

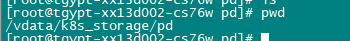

This step is to mount a specific independent disk for PD use. I am using the subdirectory k8s_storage/pd under the already mounted directory /vdata.

Does it have to be mounted with the --bind option?

Could you provide a screenshot of the output of kubectl get pv -A?

There is no PV yet, PVC is in pending state, run the local volume daemonset pod to see the previous logs.

PV was not created, you should check the reason why discovery did not create the PV.

You can check if there are any errors.

As mentioned above, refer to the steps for local-static-provisioner linked in the official documentation. Mount 3 directories under the xxxx directory, then (on this node) setting mount path = xxxx will generate 3 available PVs. The mounting method is not the focus (please avoid issues like repeated mounting of the same disk, but you can refer to the official documentation link “Sharing a disk filesystem by multiple filesystem PVs”). The key is to mount under the mount path.

It feels like there is an issue with the configuration file. The storeclass exists but cannot be detected. If you have checked the configuration file and there are no issues, you might want to try downgrading the version.

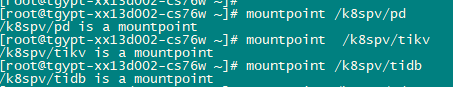

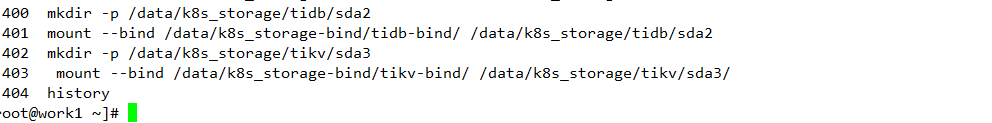

I still don’t quite understand. The sample in the official documentation uses separate disk mounts instead of component directories. The hostpath and mountpath content are consistent. I followed the sharefilesystem method and did several bind mounts, mounting the original directory /vadata/k8s_storage/pd to /k8spv/pd (mount --bind /vdata/k8s_storage/pd /k8spv/pd), but it is still in a pending state.

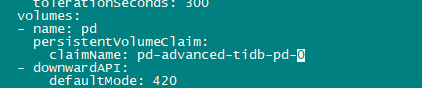

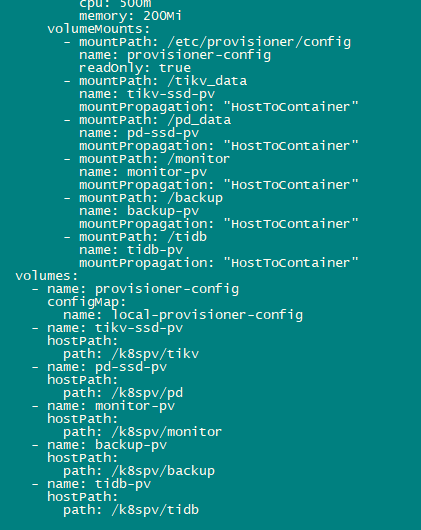

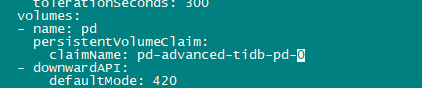

daemonset pod’s volume and mounts

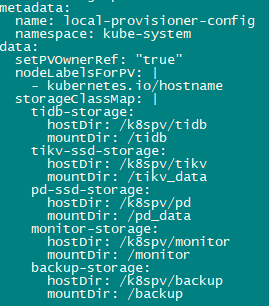

storageclass configmap

The above directory configurations should be correct: the path and hostdir of volumes are the mount points on the node, and the mountpath and mountDir are the mount points inside the pd pod.

- Discovery: The discovery routine periodically reads the configured discovery directories and looks for new mount points that don’t have a PV, and creates a PV for it.

It says to create a PV for the mount points under the discovery directory. Which layer is this discovery directory?

pd-ssd-storage:

hostDir : /k8spv/pd

mountDir: /pd_data

Then create mount points in the /k8spv/pd/ directory, such as /k8spv/pd/vol1, /k8spv/pd/vol2, /k8spv/pd/vol3, so that a total of 3 available PVs are generated on this node.

Refer to the local-static-provisioner documentation for details: sig-storage-local-static-provisioner/docs/operations.md at master · kubernetes-sigs/sig-storage-local-static-provisioner · GitHub

You can confirm the currently available PVs with kubectl get pv. Normally, before deploying the TiDB cluster, you can see how many PVs are available after applying the local-static-provisioner.

Looking at the configuration example, the UUID is specified on the shared filesystem. Does local-static-provisioner have such a mandatory requirement that it must be in this format?

The key point is not the form of mounting, but the need to mount under the hostDir directory. You can first refer to the method in the official documentation to mount, and then after applying local-static-provisioner, you will see available PVs. If you don’t see the expected PVs, it means there is a configuration issue.

This afternoon, I used the tidb_pv.yaml you provided to create a test, and it worked. The issue was that it wasn’t mounted correctly. I manually mounted a directory using mount --bind.

Could it be an issue with my K8s? What version of the local volume provisioner are you using, and where is your bind path directed to?