Hey TiDB folks,

I’ve noticed that restore when running on K8s can be incredibly slow - often taking ~4 hours to restore a 1 GB DB.

Any idea why?

I’m following these directions and using this config:

# See:

# https://docs.pingcap.com/tidb-in-kubernetes/stable/restore-from-s3

apiVersion: pingcap.com/v1alpha1

kind: Restore

metadata:

name: restore

namespace: tidb-cluster

spec:

backupType: full

to:

host: basic-tidb.tidb-cluster.svc.cluster.local

port: 4000

user: root

secretName: tidb-secret

s3:

provider: aws

region: us-west-2

secretName: s3-secret

path: s3://bagel-tidb/backup-2023-04-07T17:01:10Z.tgz

storageSize: 10Gi

Details:

- The cluster is 3 nodes distributed in different regions, but all with gigabit internet.

- The table has about 40M rows, most of them very small (4 bytes).

- I’m using TiDB v6.5.0 and TiDB Operator v1.4.4.

Thanks!

TiDB Dashboard Diagnosis Report.html (645.3 KB)

Hi, can you share the log of lightning?

Thanks for the response!

This is the log I see in K8s - not sure if there’s anything else:

restore-restore-7bpt2.log (1.4 KB)

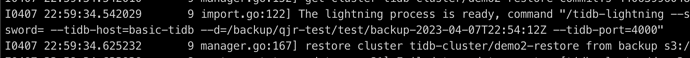

see in the log, last message is “The lightning process is ready”, then no continue messages, please also check the status of lightning pod, is it still running?

Yes, it has been running without errors for about 3 hours, and it looks like the DB is about 70% restored.

I’m not sure why there aren’t more logs.

I test in local env, it seems only output the lightning start and end log

I think you can directly use job to do the dumpling & lightning

for dumpling example using AK/SK:

---

apiVersion: batch/v1

kind: Job

metadata:

name: ${name}

namespace: ${namespace}

labels:

app.kubernetes.io/component: dumpling

spec:

template:

spec:

containers:

- name: dumpling

image: pingcap/dumpling:${version}

command:

- /bin/sh

- -c

- |

/dumpling \

--host=basic-tidb \

--port=4000 \

--user=root \

--password='' \

--s3.region=us-west-2 \

--threads=16 \

--rows=20000 \

--filesize=256MiB \

--database=${db} \

--filetype=csv \

--output=s3://xxx

env:

- name: AWS_REGION

value: ${AWS_REGION}

- name: AWS_ACCESS_KEY_ID

value: ${AWS_ACCESS_KEY_ID}

- name: AWS_SECRET_ACCESS_KEY

value: ${AWS_SECRET_ACCESS_KEY}

restartPolicy: Never

backoffLimit: 0

for lightning example using AK/SK:

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ${name}-sorted-kv

namespace: ${namespace}

spec:

storageClassName: ${storageClassName}

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 250G

---

apiVersion: v1

kind: ConfigMap

metadata:

name: ${name}

namespace: ${namespace}

data:

config-file: |

[lightning]

# 转换数据的并发数,默认为逻辑 CPU 数量,不需要配置。

# 混合部署的情况下可以配置为逻辑 CPU 的 75% 大小。

# region-concurrency =

level = "info"

[checkpoint]

# 是否启用断点续传。

# 导入数据时,TiDB Lightning 会记录当前表导入的进度。

# 所以即使 TiDB Lightning 或其他组件异常退出,在重启时也可以避免重复再导入已完成的数据。

enable = true

# 存储断点的数据库名称。

schema = "tidb_lightning_checkpoint"

# 存储断点的方式。

# - file:存放在本地文件系统。

# - mysql:存放在兼容 MySQL 的数据库服务器。

driver = "mysql"

[tidb]

# 目标集群的信息。tidb-server 的监听地址,填一个即可。

host = "basic-tidb"

port = 4000

user = "root"

password = ""

# 表架构信息在从 TiDB 的“状态端口”获取。

status-port = 10080

# pd-server 的地址,填一个即可

pd-addr = "basic-pd:2379"

---

apiVersion: batch/v1

kind: Job

metadata:

name: ${name}

namespace: ${namespace}

labels:

app.kubernetes.io/component: lightning

spec:

template:

spec:

containers:

- name: tidb-lightning

image: pingcap/tidb-lightning:${version}

command:

- /bin/sh

- -c

- |

/tidb-lightning \

--status-addr=0.0.0.0:8289 \

--backend=local \

--sorted-kv-dir=/var/lib/sorted-kv \

--d=${data_dir} \

--config=/etc/tidb-lightning/tidb-lightning.toml \

--log-file="-"

env:

- name: AWS_REGION

value: ${AWS_REGION}

- name: AWS_ACCESS_KEY_ID

value: ${AWS_ACCESS_KEY_ID}

- name: AWS_SECRET_ACCESS_KEY

value: ${AWS_SECRET_ACCESS_KEY}

volumeMounts:

- name: config

mountPath: /etc/tidb-lightning

- name: sorted-kv

mountPath: /var/lib/sorted-kv

volumes:

- name: config

configMap:

name: ${name}

items:

- key: config-file

path: tidb-lightning.toml

- name: sorted-kv

persistentVolumeClaim:

claimName: ${name}-sorted-kv

restartPolicy: Never

backoffLimit: 0

Thank you for those very detailed responses!

However, I got it to work by simply switching to BR.

It now runs in 3 minutes instead of 4 hours!

Maybe the docs should steer people to use BR and not dumpling / lightning?

Here’s my config:

# See:

# https://docs.pingcap.com/tidb-in-kubernetes/stable/backup-to-s3

apiVersion: pingcap.com/v1alpha1

kind: Restore

metadata:

name: restore

namespace: tidb-cluster

spec:

cleanPolicy: Delete

br:

cluster: basic

clusterNamespace: tidb-cluster

sendCredToTikv: true

s3:

provider: aws

region: us-west-2

secretName: s3-secret

bucket: bagel-tidb

prefix: backup-full/basic-pd.tidb-cluster-2379-2023-04-08t01-10-00

storageClass: INTELLIGENT_TIERING

you are right

-

it’s suggest use BR snapshot to do backup and restore

-

if customer said he want backup and maybe only restore specific table, or something like this, he can do this

Something you need to know:

-

Dumpling logic backup and Lightning import in Operator will auto do the archive / unarchive by design, so it will consume more

- archive / unarchive time and cpu

- temporary disk to presist tgz file

-

Lightning import in Operator only support

Logic import mode, which is much slower than physical import mode