Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: tikv在scan最新插入的数据的时候性能低。

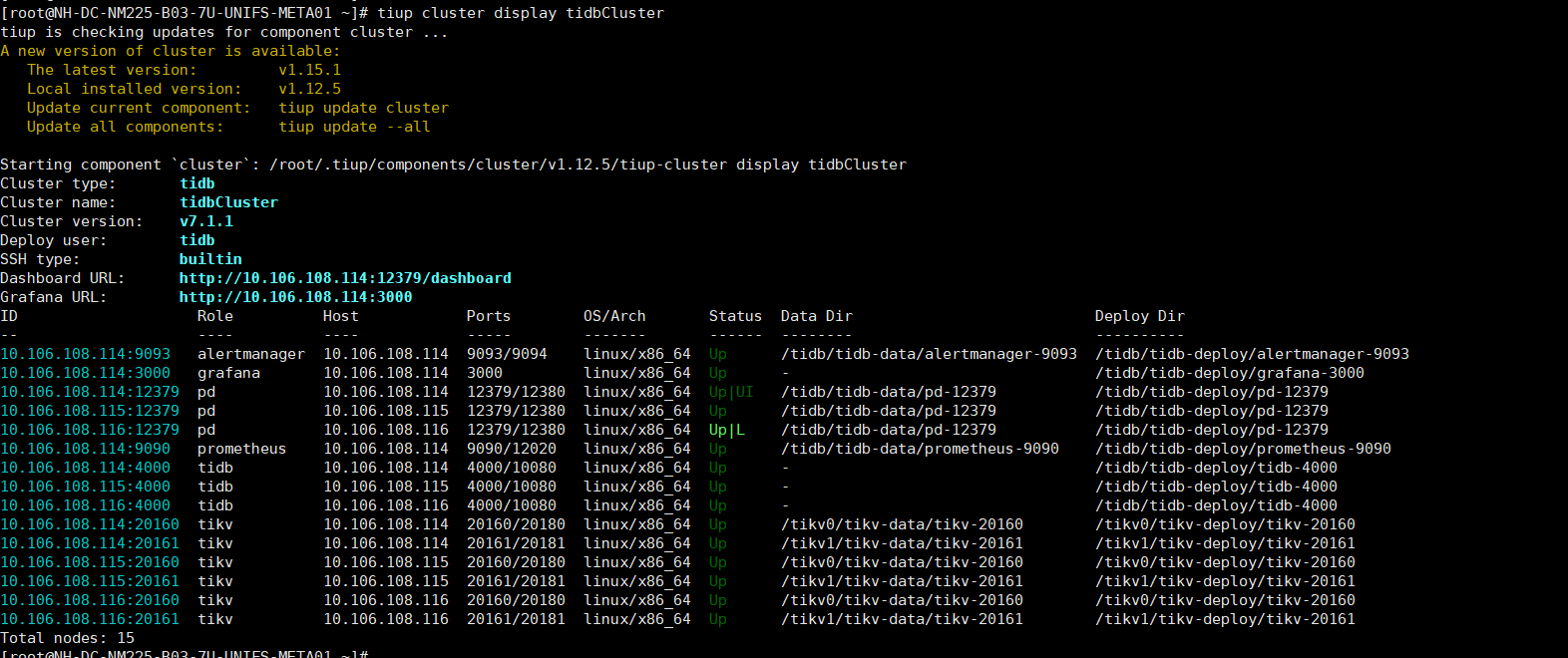

[TiDB Usage Environment] Production Environment

[TiDB Version]

Starting component cluster: /root/.tiup/components/cluster/v1.12.5/tiup-cluster display tidbCluster

Cluster type: tidb

Cluster name: tidbCluster

Cluster version: v7.1.1

[Reproduction Path] TiKV is used as the metadata database for JuiceFS. After integration and mounting, I created three folders: test20w, test20w2, and test20w3. Then, I created 200,000 files in each of the three folders and performed a txn scan operation on TiKV to scan the files in the three folders. I found that the folder where the last 200,000 files were created took the longest time. If another folder subsequently executes the command to create a large number of files, the scan command for the previous folder will run normally.

[Encountered Problem: Problem Phenomenon and Impact]

[Resource Configuration] Go to TiDB Dashboard - Cluster Info - Hosts and take a screenshot of this page

[Attachments: Screenshots/Logs/Monitoring]

Starting component cluster: /root/.tiup/components/cluster/v1.12.5/tiup-cluster display tidbCluster

Cluster type: tidb

Cluster name: tidbCluster

Cluster version: v7.1.1

It’s going to be a bit difficult with a mixed cluster of just three machines…

Are the configurations of the three physical machines high? Is the disk IO sufficient?

I estimate that it may not necessarily be related to mixed deployment. We are not familiar with the internal implementation of TiKV, we just use it and found this performance issue. It’s quite strange.

The information provided is still too little.

First, check in Grafana → fast tune to see if you can quickly find some valuable information.

This is the manual.

- Deploying so many nodes on three machines will almost inevitably lead to resource contention issues. Please check the resource usage of the machines when problems occur.

- Confirm the cluster heatmap situation, which can be viewed on the Dashboard panel.

- For slow access statements, focus on analyzing slow query situations.

Investigate the cluster access logs and monitoring charts, and confirm each of the above directions one by one.

It should not be a resource issue, as this cluster is not busy. Can you reproduce the issue on your end?

This is the reference for JuiceFS. After successfully mounting, create files in several directories of JuiceFS.

#! /bin/bash

for i in $(seq 0 200000)

do

touch test$i

done

Then perform a search operation on each directory:

time ls -l /mnt/unifs-h001/dros/test/ | grep test11111

It was found that the directory where the files were created last is the slowest.

I am not a storage R&D personnel, but an upper-level business personnel, and I just discovered this issue. The company also does not have proper storage R&D.

I remember juicefs uses TiKV raw KV. Deploying a cluster like this may have issues.

There probably aren’t many people using juicefs in the TiDB community. Not many people might be able to help with your usage.

Cluster resource consumption is not high.