Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: raft store cpu 利用率低,tikv写入速度慢

[TiDB Usage Environment] Test Environment

[TiDB Version] v6.5.0

[Reproduction Path]

DataX executes a job to collect Oracle table data into TiDB. The table has 1 million rows, with an average insertion speed of 1024 rows per second.

[Encountered Problem: Problem Phenomenon and Impact]

During the job, the raft store CPU remains below 8% for a long time, indicating low utilization. Is this the reason for the slow TiKV write speed? Seeking help π_π

[Resource Configuration]

4 virtual machines, each with 7-core CPU and 16GB memory. 3 KV, 1 PD, 1 DB

[Attachments: Screenshots/Logs/Monitoring]

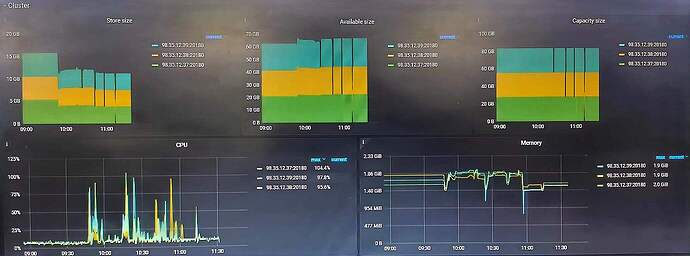

TiKV-Details Cluster:

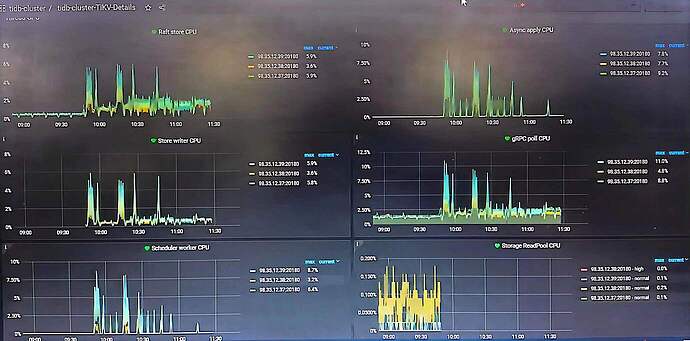

TiKV-Details Thread CPU:

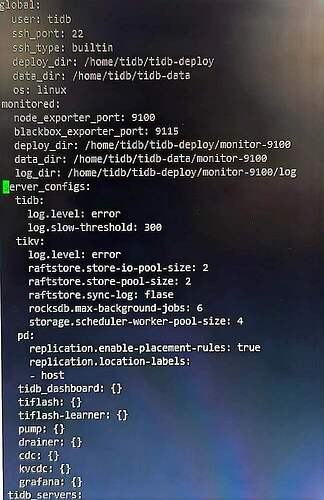

server_configs configuration:

DataX JDBC link, all necessary configurations are added:

jdbc:mysql://127.0.0.1:4000/tidb_test?allowMultiQueries=true&rewriteBatchedStatements=true&useConfigs=maxPerformance&useServerPrepStmts=true&useUnicode=true&characterEncoding=utf8&serverTimezone=GMT

No write hotspots, and the traffic visualization view does not show skewed yellow lines:

SQL statement added SHARD_ROW_ID_BITS = 4 PRE_SPLIT_REGIONS=3

Your TiDB cluster seems to be under very little pressure, right? What’s the tp99 for SQL? How many connections are there?

The number of connections has been 3 for a long time.

Try increasing the number of connections. You can’t expect TiDB’s latency to be as low as MySQL’s. If the latency is high and you have few connections, the QPS will be low.

I did not limit the number of connections to TiDB.

show global variables like ‘max_connections’

The value is 0, which means the number of connections is not limited by default.

What I meant was to increase the number of connections on your end, essentially increasing concurrency.

If you want to improve speed, there are two approaches:

- Increase the QPS (Queries Per Second) of a single connection. This requires optimizing TiDB to reduce its latency. However, considering your cluster doesn’t seem to be under much pressure, this approach might not yield significant benefits.

- Increase the number of connections. It’s like moving goods from a truck to a warehouse. If the route is long and a single trip takes a while, one worker might take a minute per trip, limiting the overall speed of moving goods. If you hire more workers, wouldn’t it be faster?

My Greenplum cluster and TiDB cluster are both deployed on those four virtual machines, and only one cluster is activated during testing. Using DataX to collect a table with millions of rows from Oracle to GP or TiDB, the average insertion speed for GP is 15,000 rows per second, while for TiDB it is 1,024 rows per second. I have followed the tuning methods from online sources or documentation to resolve write hotspots and added some configurations in server_configs (as shown in the picture in the post), but no matter how I optimize, the write speed of TiDB remains at 1,024 rows per second.

I have been monitoring with Grafana and noticed that my monitoring data is very different from the screenshots online. Their Raft store CPU peaks are mostly between 60%-80%, while mine has never exceeded 8%. I always feel that TiKV is not effectively utilizing resources, leading to disappointing insertion speeds.

3 connections, write speed of 1024 entries per second, each connection handles 341 entries per second.

Converted to latency, it’s 1000/341=2.9 milliseconds.

That’s TiDB for you  (Too many network interactions, so the latency is relatively high. It’s suitable for high concurrency business.)

(Too many network interactions, so the latency is relatively high. It’s suitable for high concurrency business.)

Increase the number of connections. Let 6 connections do the work and see if it reaches 2048.

I’ll give it a try, thank you.

Bro, does this mean that I increased the connections and improved the concurrency? At the same time, I collected data from multiple tables into TiDB, and overall the write speed improved, but the write speed for a single table with a single connection is still very slow.

That’s the idea.

Or, you can split a single table into segments and process them with multiple threads.

DataX can run concurrently. Try enabling some concurrency.

I followed the official DataX documentation to enable parallelism and also tried increasing optimization parameters, such as increasing the channel and batchSize. I also tried changing the version of the mysql-connect jar package for mysqlwriter, testing both version 5.0 and 8.0. However, the write speed to TiDB is still 1024-1228 rows per second, with no improvement at all. Sigh.

Check the DataX log. Is the size of each record very large?

Writing to TiDB: 334 KB/s 1075 rows/s

Take a look at the TiDB monitoring in Grafana.

Check the connection count, CPU, QPS, TP99, etc.

You should test the network between TiDB and Oracle, there might be some issues. Mine spans multiple network segments, yet the speed is almost the same as yours.

tidb → query summary → check the monitoring of connection idle time