Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: 6.1.0 内存悲观锁不丢失

[Version] 6.1.0 arm

[Steps]

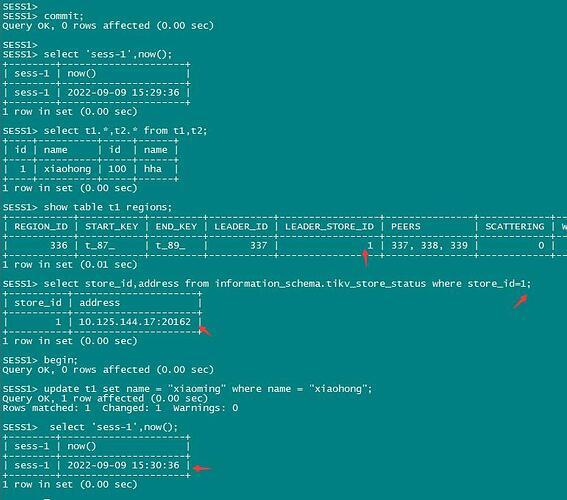

Create t1 (id int primary key, name varchar);

insert into t1 values(1, “xiaohong”);

Create t2 (id int primary key, name varchar);

insert into t2 values(100, “hha”);

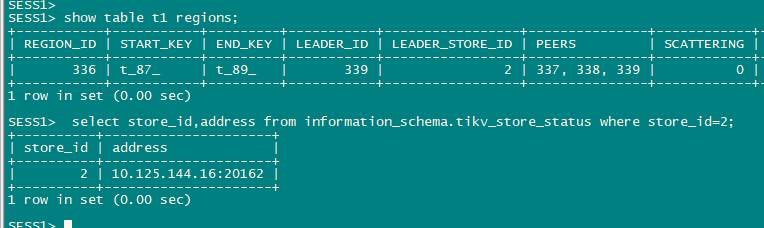

Find the store where the region leader of table t1’s data is located, assuming tikv1.

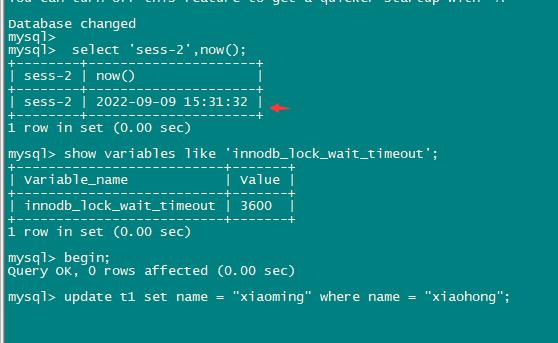

tx1, begin;

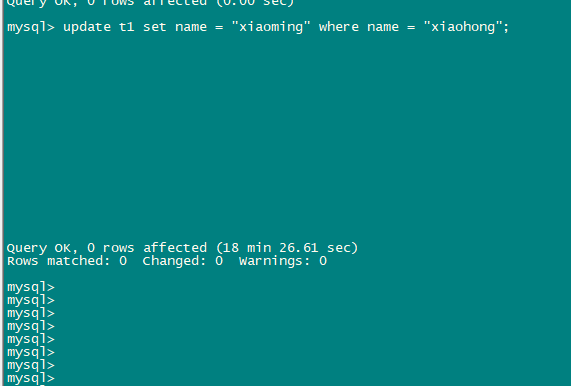

tx1: update t1 set name = “xiaoming” where name = “xiaohong”; Let tx1 hold the in-memory pessimistic lock.

tx2: session 2 executes update t1 set name = “xiaohua” where name = “xiaohong”; It will be blocked.

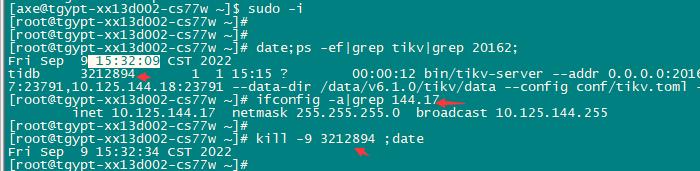

Kill tikv1; Let tx1’s lock be lost.

Wait for a certain period, session 2 executes successfully.

txn3: session3 executes update t2 set name = “xxxx” where name=“hha”; Successfully committed.

tx1: Execute update t2 set name = “yyy”; Refresh tx1’s forupdatets to make it > tx2’s commitTs.

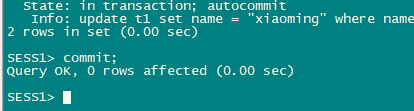

tx1 executes commit, check if it can commit successfully.

[Result]

-

Session 1 updates t1 and finds the leader.

-

Session 2 updates the same row, blocked.

-

Kill tikv on the leader.

After killing the leader tikv, the in-memory lock is not lost, and session 2 remains blocked.

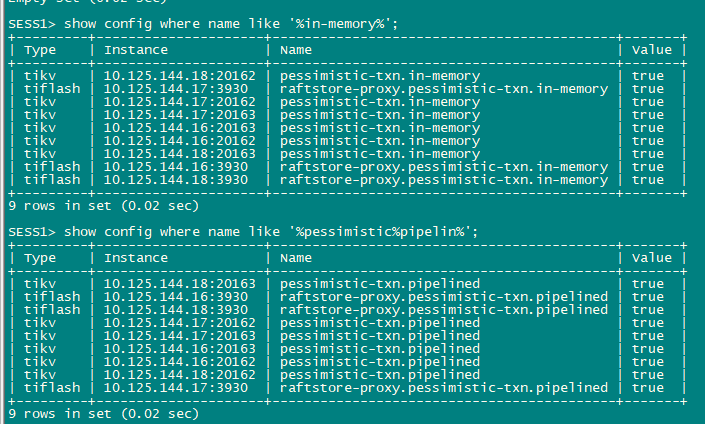

Parameter configuration:

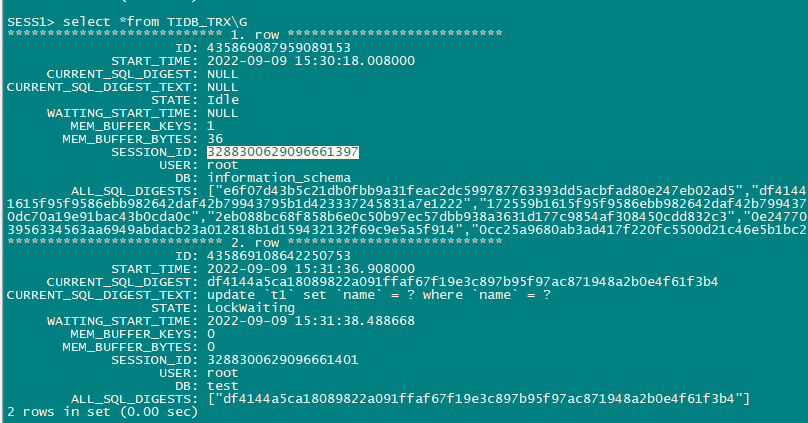

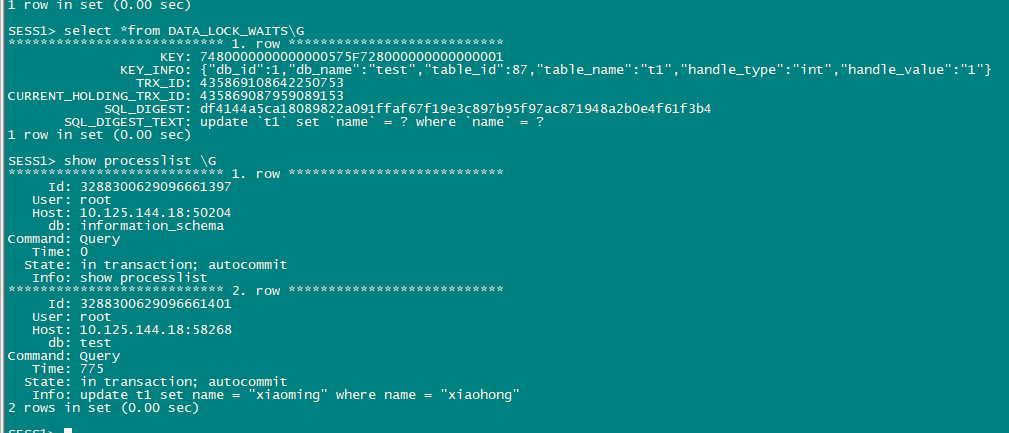

System view:

- After session 1 commits, session 2 executes successfully.

The leader is now store 2.

[Questions] - The in-memory pessimistic lock is not released or lost after killing the leader tikv node (it seems to be synchronized and switched to store 2), and the blocked session remains blocked, which does not meet expectations (should not have killed the wrong leader).

- Is there a more intuitive way to see the current lock holder and related information?