Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

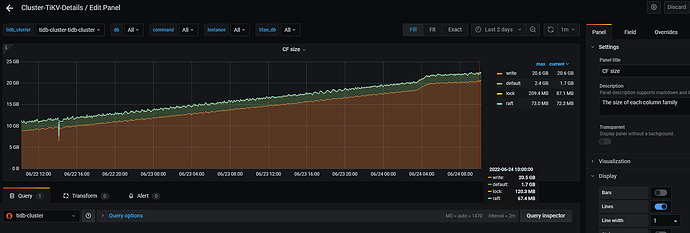

Original topic: cf size write占用内存空间不断上升

[TiDB Usage Environment]

V5.4

[Overview] The computing program runs periodically, continuously processing data, and the buffer space in the system memory is increasingly occupied. Monitoring reveals that the space occupied by the write module of CF size is continuously rising.

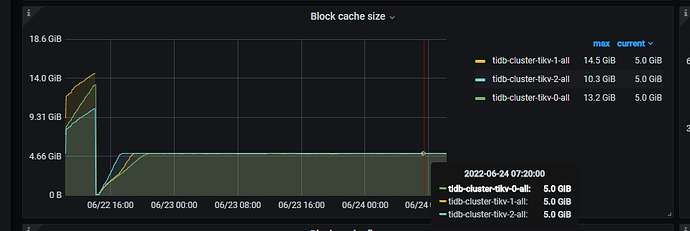

[Background] The storage.block.cache size is limited to 5G, with a total of three TiKV nodes. The configuration file was modified and TiKV was restarted.

[Phenomenon] CF size continues to rise.

The CF size does not occupy memory space, but disk space. As the amount of data you write into TiDB increases, both the default CF and write CF will grow accordingly.

Both the default CF and the write CF occupy disk space, not memory, right?

In memory, there are BlockCache and memtables for each CF. Refer to the following document:

CF refers to Column Family in RocksDB, which is composed of SST files on the disk.

So, without modifying the memtable and the maximum number of memtable files, the memory of a single TiKV node should be equal to storage.block.cache + 2.5G. In my environment, the memory usage of a single TiKV should be around 7.5G. However, I actually found that the memory usage of a single TiKV can reach 8G or even more. Besides these two parts, are there any other modules that consume a lot of memory?

When TiKV returns data to the TiDB Server, is the data returned all at once after being completely processed, or is it returned incrementally in an asynchronous manner?

What you mentioned should be a large batch, such as select * from xxx returning tens of thousands of rows. Batch return.

It writes and sends simultaneously. The coprocessor continuously writes to memory, and gRPC reads from memory and sends it to the TiDB instance. If the coprocessor writes faster than gRPC sends, then the memory will accumulate.

This topic will be automatically closed 60 days after the last reply. No new replies are allowed.