Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: minio集群删数据慢

Is everyone using MinIO to back up their TiDB cluster data? I have a question.

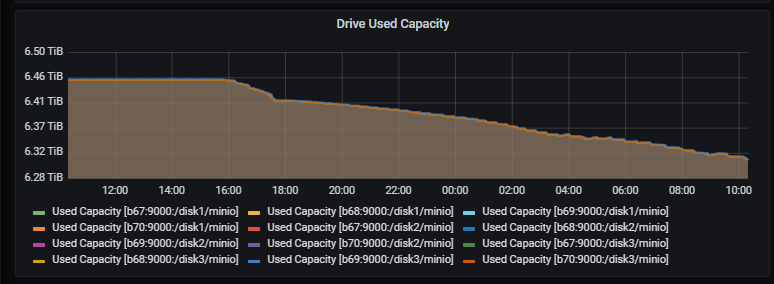

My MinIO cluster consists of 8TB * 3 disks * 4 nodes, and I use BR to back up the TiDB data. There were no issues during the backup, but deleting the data is extremely slow, and the disk IO utilization is at 100% during the deletion process. Is this a problem, or is it naturally this slow?

It took about 14 hours overnight to delete less than 1TB of data, which is too slow.

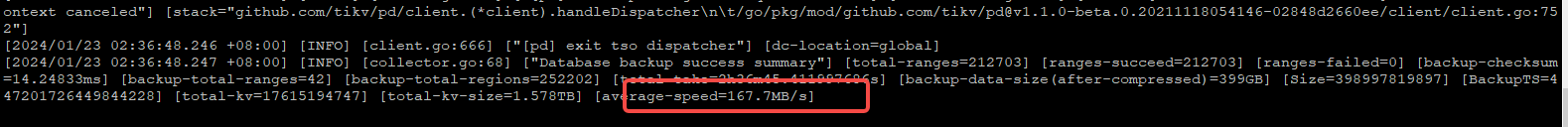

However, the backup speed seems quite fast, reaching up to 167MB/s according to the backup logs. Why is the deletion so slow?

I want to ask if this is normal? Is it a problem, or is it supposed to be like this?

My MinIO version is 2023-03-20T20-16-18Z (I initially thought it was a version issue, but I recently upgraded to address the information leakage vulnerability). The previous version was also this slow.

Try using rm on the directory and see how long it takes.

How did you delete it? Using rm? Is there disk I/O monitoring?

Your drive is a mechanical drive, which is normal.

Deleting with mc rm is slower than deleting through the console.

You can’t just delete data files directly. What if something goes wrong?

You need to quickly switch to an SSD. Minio is object storage. That’s how it is.

I still feel it’s a disk performance issue. I have a single SSD disk with 8.5T of df space, and it’s not this slow.

Yes, it’s a problem with the disk.

The main issue is not slow writing, but slow deletion.

That might still be a limitation of the file system, similar to how deleting files in a large directory on Linux can be very slow, and even running ls can be slow.

There are too many fragmented files.

Test your disk. Some disks are particularly slow with small data blocks. Check if the difference is significant.

time dd if=/dev/zero of=test bs=8k count=10000 oflag=direct

time dd if=/dev/zero of=test bs=8M count=1000 oflag=direct

Minio’s performance in handling fragmentation has never been good, and now the protocol has been changed to AGPL…

What does it mean? What does changing the protocol indicate?

Are these data files in use? Or should we directly go to the machine and delete the temporary files to check if it’s a disk issue?

There are services using it and data backed up, so I don’t dare to randomly delete data files.

The main issue is that writing data is quite fast, but deleting it is slow. Since writing is fast, it should rule out hard drive issues.

I still feel it’s a file system-level problem. Deleting operations under large directories consume a lot of IO, causing the slowness.

It’s not necessarily a disk issue; sometimes having too many processes can also overwhelm the I/O, leading to slow deletions.

Why not just delete the data directly? After using drop to delete the data, the space will be automatically released after exceeding the GC time.

How do you drop it? I don’t quite understand, I’m using MinIO.