Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: ON DUPLICATE KEY UPDATE 插入慢

To improve efficiency, please provide the following information. A clear problem description can help resolve the issue faster:

[TiDB Usage Environment] Production

[TiDB Version] 5.4

[Encountered Problem]

Our business has a scenario of batch insert and update, so we use the ON DUPLICATE KEY UPDATE feature. If the unique index does not exist, it inserts; if it exists, it updates.

The SQL is as follows:

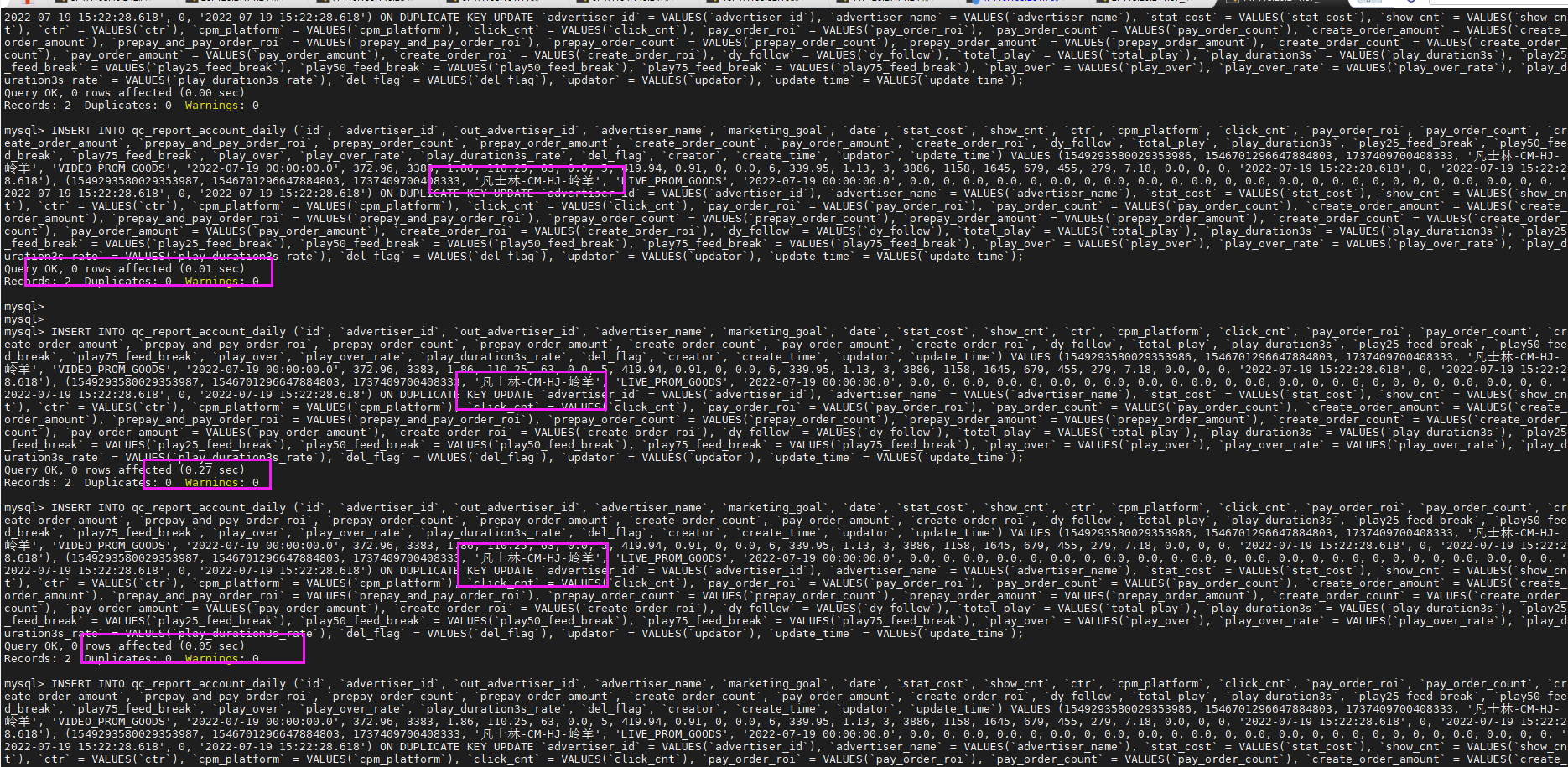

INSERT INTO qc_report_account_daily (`id`, `advertiser_id`, `out_advertiser_id`, `advertiser_name`, `marketing_goal`, `date`, `stat_cost`, `show_cnt`, `ctr`, `cpm_platform`, `click_cnt`, `pay_order_roi`, `pay_order_count`, `create_order_amount`, `prepay_and_pay_order_roi`, `prepay_order_count`, `prepay_order_amount`, `create_order_count`, `pay_order_amount`, `create_order_roi`, `dy_follow`, `total_play`, `play_duration3s`, `play25_feed_break`, `play50_feed_break`, `play75_feed_break`, `play_over`, `play_over_rate`, `play_duration3s_rate`, `del_flag`, `creator`, `create_time`, `updator`, `update_time`) VALUES (1548995354009075714, 1480189607163617287, 1719743539933197, 'Group 2 - City - Mingdi - Linfeng Small Shop - KN', 'VIDEO_PROM_GOODS', '2022-07-18 00:00:00.0', 0.0, 0, 0.0, 0.0, 0, 0.0, 0, 0.0, 0.0, 0, 0.0, 0, 0.0, 0.0, 0, 0, 0, 0, 0, 0, 0, 0.0, 0.0, 0, 0, '2022-07-18 19:37:25.995', 0, '2022-07-18 19:37:25.995'), (1548995354009075715, 1480189607163617287, 1719743539933197, 'Group 2 - City - Mingdi - Linfeng Small Shop - KN', 'LIVE_PROM_GOODS', '2022-07-18 00:00:00.0', 2.95, 124, 0.81, 23.79, 1, 0.0, 0, 0.0, 0.0, 0, 0.0, 0, 0.0, 0.0, 0, 108, 40, 28, 12, 6, 3, 2.78, 0.0, 0, 0, '2022-07-18 19:37:25.995', 0, '2022-07-18 19:37:25.995') ON DUPLICATE KEY UPDATE `advertiser_id` = VALUES(`advertiser_id`), `advertiser_name` = VALUES(`advertiser_name`), `stat_cost` = VALUES(`stat_cost`), `show_cnt` = VALUES(`show_cnt`), `ctr` = VALUES(`ctr`), `cpm_platform` = VALUES(`cpm_platform`), `click_cnt` = VALUES(`click_cnt`), `pay_order_roi` = VALUES(`pay_order_roi`), `pay_order_count` = VALUES(`pay_order_count`), `create_order_amount` = VALUES(`create_order_amount`), `prepay_and_pay_order_roi` = VALUES(`prepay_and_pay_order_roi`), `prepay_order_count` = VALUES(`prepay_order_count`), `prepay_order_amount` = VALUES(`prepay_order_amount`), `create_order_count` = VALUES(`create_order_count`), `pay_order_amount` = VALUES(`pay_order_amount`), `create_order_roi` = VALUES(`create_order_roi`), `dy_follow` = VALUES(`dy_follow`), `total_play` = VALUES(`total_play`), `play_duration3s` = VALUES(`play_duration3s`), `play25_feed_break` = VALUES(`play25_feed_break`), `play50_feed_break` = VALUES(`play50_feed_break`), `play75_feed_break` = VALUES(`play75_feed_break`), `play_over` = VALUES(`play_over`), `play_over_rate` = VALUES(`play_over_rate`), `play_duration3s_rate` = VALUES(`play_duration3s_rate`), `del_flag` = VALUES(`del_flag`), `updator` = VALUES(`updator`), `update_time` = VALUES(`update_time`);

out_advertiser_id + date form a unique index

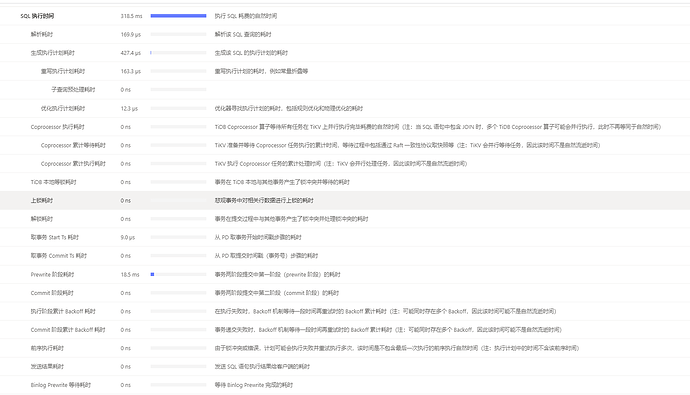

Executing this SQL takes about 350ms.

Experts, please take a look at what the problem might be. Thank you.

Machine configuration: 2 TiDB: 16C 32G, 3 TiKV: 16C 32G