Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: 求优化建议

【TiDB Usage Environment】

pd * 3 (4c8g)

tidb * 3(4c8g)

tikv * 3(4c8g256g)

【Overview】 Scenario + Problem Overview

**There are only 230,000 records.**

Table creation statement:

CREATE TABLE group_info (

group_id bigint(20) NOT NULL COMMENT ‘Group ID’,

parent_group_id bigint(20) NOT NULL COMMENT ‘Parent Group ID’,

parent_group_creator_id varchar(100) NOT NULL COMMENT ‘Parent Group Creator ID’,

group_title varchar(220) NOT NULL COMMENT ‘Group Name’,

group_type tinyint(4) NOT NULL COMMENT ‘Group Type’,

group_state tinyint(4) NOT NULL COMMENT ‘Group State’,

start_time datetime NOT NULL COMMENT ‘Start Time’,

end_time datetime NOT NULL COMMENT ‘End Time’,

group_creator_id varchar(100) NOT NULL COMMENT ‘Creator ID’,

referee_id varchar(100) NOT NULL DEFAULT ‘’ COMMENT ‘Referee ID’,

notice_type tinyint(4) NOT NULL DEFAULT ‘0’ COMMENT ‘Notice Type’,

is_delete tinyint(4) DEFAULT ‘0’ COMMENT ‘Is Deleted’,

create_time datetime NOT NULL COMMENT ‘Creation Time’,

update_time datetime NOT NULL COMMENT ‘Update Time’,

create_user varchar(50) NOT NULL COMMENT ‘Creator’,

update_user varchar(50) NOT NULL COMMENT ‘Updater’,

ts timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP COMMENT ‘Timestamp’,

PRIMARY KEY (group_id) /*T![clustered_index] CLUSTERED */,

KEY idx_parent_group_id (parent_group_id) COMMENT ‘Parent Group Index’,

KEY idx_group_creator_id (group_creator_id) COMMENT ‘Group Creator ID Index’,

KEY idx_parent_group_id (parent_group_creator_id) COMMENT ‘Parent Group Creator ID Index’

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_bin COMMENT=‘Group Basic Information Table’

Query statement

Execution plan SQL: select group_id, group_creator_id from group_info where group_creator_id in ( ? , ? , ? , ? , ? , ? , ? , ? , ? , ? , ? , ? , ? , ?) and group_state = 1 and is_delete = 0 order by group_id desc limit 10

Stress test SQL: select group_id, group_creator_id from group_info where group_creator_id in ( ? , ? , ? , ? , ? , ? , ? , ? , ? , ? , ? , ? , ? , ?) and group_state = 1 and start_time < now() and end_time > now() and is_delete = 0 order by group_id desc limit 11

【Background】 What operations have been performed

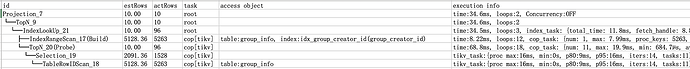

Execution plan:

【Phenomenon】 Business and database phenomenon

Stress test can only reach 85QPS, with only one TiKV CPU maintaining above 90%, while the other six machines are below 15%

【Problem】 Current issues encountered

Performance is much lower than expected, seeking optimization suggestions

One point of confusion: In the second phase of TiKV, condition filtering, it seems like it should stop after finding 10 records, why does it find all 1528 records that meet the conditions?

Understand that too much data retrieval can degrade performance, but even if it only hits a single TiKV, would it be this bad?

Because normal execution SQL takes about 30ms, it feels like the performance is good

(New user on trial, but have learned a lot of principles, should be able to understand)

【Business Impact】

【TiDB Version】

5.3

Company cluster, unable to execute scripts