Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

[TiDB Usage Environment] Test Environment

- PD 3 nodes: 16C32G

- TiDB 3 nodes: 16C32G

- TiFlash 3 nodes: 16C32G

- TiKV 20 nodes: 16C32G

[TiDB Version] v6.1.0

[Reproduction Path] Perform a stress test on the TiDB database using sysbench

- sysbench --db-driver=mysql --time=300 --threads=100 --report-interval=1 --mysql-host= --mysql-port=4000 --mysql-user= --mysql-password= --mysql-db=test --tables=10 --table_size=1000000 oltp_read_write prepare

[Encountered Issue: Phenomenon and Impact]

-

Background: To evaluate cluster performance, a stress test was conducted on the TiDB cluster using sysbench, generating 10 tables with 100 million rows of data each.

-

Phenomenon: An error occurred during the prepare phase,

FATAL: mysql_drv_query() returned error 2013 (Lost connection to MySQL server during query) for query 'INSERT INTO sbtest9(k, c, pad) VALUES(50300166, '16357275439-41970985209-34833281730-07150732211-32256237037-94842996031-08714086735-83899234046-58786808990-64628079874', '79244697413-69968263748-31322533223-94195053462-84736177096') (...) FATAL: 'sysbench.cmdline.call_command' function failed: /usr/share/sysbench/oltp_common.lua:230: db_bulk_insert_next() failed

The prepare phase was then interrupted. -

Investigation Results:

-

Using

select count(*) from test.sbtest<id>to query each test table at the time revealed that the number of inserted rows was around 4.5 million to 5 million, less than half of the expected number of rows. -

Using

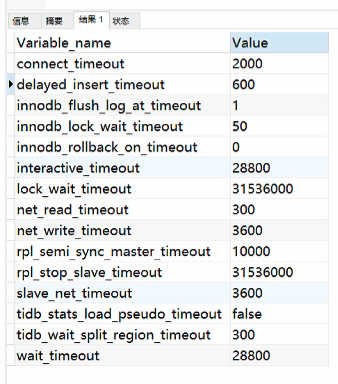

show variables like '%timeout'yielded the following results:

-