Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: tikv磁盘大小、region数实践

[TiDB Usage Environment] Production Environment / Testing / PoC

Production Environment

[TiDB Version]

tidb v6.5.5 and v5.4.0

[Reproduction Path] What operations were performed when the issue occurred

[Encountered Issues: Issue Phenomenon and Impact]

Consultation:

- Are there any production practices using HDD disks to deploy clusters?

- What is the maximum size of a single TiKV using HDD disks? Disk usage rate and number of regions (30GB memory TiKV configuration)

- What is the performance difference between HDD and SSD?

- When using large HDDs, what is the recommended number of regions for a single TiKV, and the recommended region size?

[Resource Configuration] Go to TiDB Dashboard - Cluster Info - Hosts and take a screenshot of this page

[Attachments: Screenshots/Logs/Monitoring]

Having worked with numerous TiDB clusters, here are my personal views:

- In production, I haven’t encountered any deployments of TiKV using HDDs. TiDB has lower requirements, but since PD and TiDB are often co-located, HDDs are rarely used. I once encountered a customer deploying on virtual machines with NAS disks, and PD and TiKV frequently had inexplicable issues, all pointing to insufficient disk performance. Eventually, all such clusters had their disks replaced.

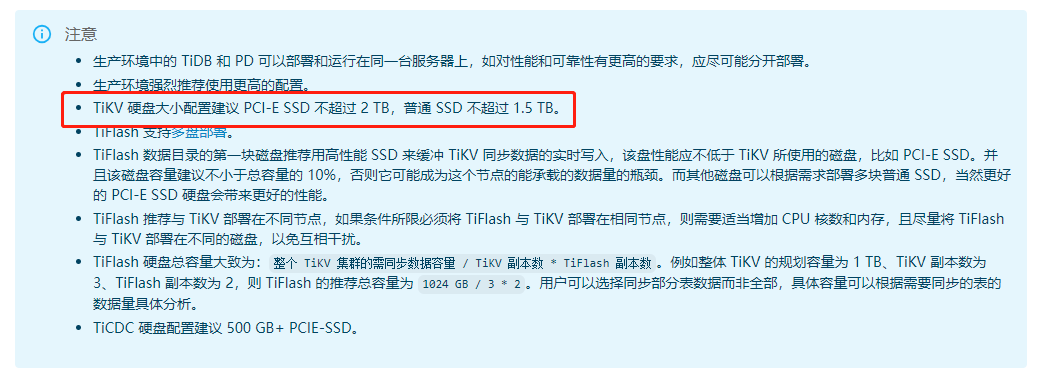

- For your TiKV node with 30GB of memory, I estimate the CPU allocation to be around 8-12 cores at most. If using SSDs, even 1TB might be more than enough, but with HDDs, the capacity would need to be smaller (due to the bottleneck principle).

- The performance difference is significant. SSDs are generally categorized into three levels: SATA SSD (around 500MB/s), SAS SSD (around 800MB/s), and NVMe SSD (2000MB/s and above). Regular HDDs achieving 300MB/s is already impressive.

- Generally, larger disks require more CPU and memory, unless it’s a cold archive scenario with more writes and fewer reads. Based on your needs, HDDs are generally not recommended.

In summary, it’s not that TiDB can’t run on HDDs, but the risk is extremely high, leading to various odd issues. After extensive troubleshooting, you’ll likely end up replacing the disks anyway, so there’s no need to waste time on unnecessary experiments.

He can accept lowering the operating frequency and reducing the data processing size to adapt to the reduced configuration as well.

In terms of single IO

Generally, a single IO on an SSD can be done in 0.1ms-1ms, while the time for a single IO on an HDD is 4-10ms.

The performance gap between SSD and HDD is like night and day. You can perform an IO test yourself.

IOPS test reference command

fio -group_reporting -thread -name=iops_test -rw=randwrite -direct=1 -size=8G -numjobs=8 -ioengine=psync -bs=4k -ramp_time=10 -randseed=0 -runtime=60 -time_based

Read/write bandwidth test reference commands

fio -group_reporting -thread -name=iops_test -rw=randwrite -direct=1 -size=8G -numjobs=8 -ioengine=psync -bs=1m -ramp_time=10 -randseed=0 -runtime=60 -time_based

fio -group_reporting -thread -name=iops_test -rw=randread -direct=1 -size=8G -numjobs=8 -ioengine=psync -bs=1m -ramp_time=10 -randseed=0 -runtime=60 -time_based

NVMe drives typically have microsecond-level latency, but issues arise when the latency reaches milliseconds.

There is definitely a big difference between IO HDD and SSD. I have also tested the IO of single disk SSD and HDD. The read and write IO of a single disk theoretically meets the requirements. If there are no hotspot issues and there are many TiKV instances, the read and write seem to be only a few dozen MB. So I want to see if there has been any production practice of deploying TiKV on HDD.

Those who deployed with HDD all regretted it.

Users of TiDB generally have large amounts of data, and HDDs can’t keep up in terms of performance. Slow queries are mostly inserts, so they all eventually switched to SSDs.

Is there an approximate write volume and total storage level?

NVMe is faster than regular SSDs. Previously, the price was relatively high, but now it seems to be about the same. However, the price of SSDs and regular mechanical drives is now almost the same, so if you are making a new purchase, SSDs are still better.

I haven’t encountered a database using HDDs in the past 5 years.

The clusters I’ve seen generally have fewer than 20k regions per single TiKV, and I haven’t used HDDs. If storing cold data, HDDs can also be considered.

The performance gap between HDD and SSD is 10-20 times. The size of the disk is related to the amount of data, usually 2-3 times the data volume. REGIONS should be adjusted according to the business situation and type. If the business leans towards large tables and large transactions, the REGION size can be appropriately increased.

The size of the region is determined by the amount of data.

Most people use SSDs now, right?

What are the approximate IOPS and read/write bandwidth of the SSDs you produce? Could you provide some test results so I can compare them with our current environment?

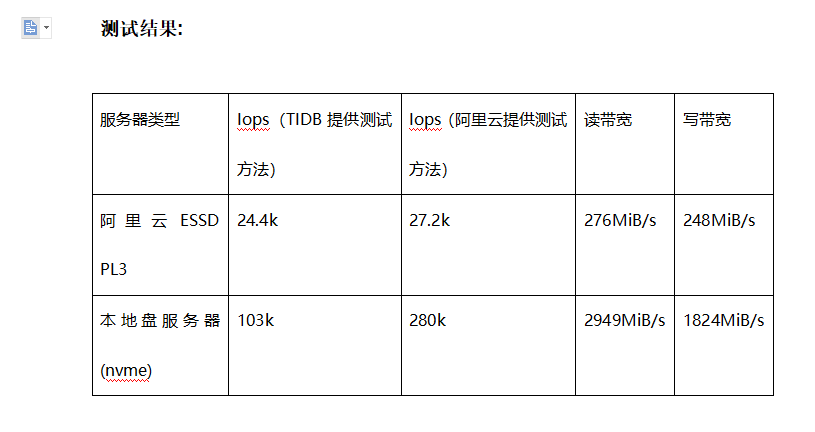

I am using Alibaba Cloud’s local SSD, and I have previously compared it with Alibaba Cloud’s ESSD cloud disk. There are two testing methods, one provided by Alibaba Cloud and the other by TiDB. The method provided by Alibaba Cloud is somewhat idealized.

280k IOPS already exceeds the speed of a typical single NVMe SSD, right?