Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: pd切换leader流程及注意事项

[TiDB Usage Environment] Production Environment / Testing / Poc

[TiDB Version] v5.4.0

[Reproduction Path] Planning to scale down and remove the old servers, now only the PD nodes are left. How to switch the PD leader, and what should be noted during the process?

[Encountered Issues: Problem Phenomenon and Impact]

[Resource Configuration]

[Attachments: Screenshots/Logs/Monitoring]

It switches automatically, right? Just maintain 3 instances and switch them one by one.

Is it okay to first scale down the two old PDs that are not leader nodes, and then switch the last PD after adding three new PDs?

During business downturns, you can directly reduce it by 3, and it will automatically switch the leader.

Directly scaling down may cause short-term errors in the business. It is recommended to first scale down the two old PD nodes that are not leader nodes, then switch the last PD. After the switch is complete, take the last PD offline. This way, the business will not be affected.

You can directly use the command to switch:

tiup ctl:v{version} pd member

to check the name of each member. Then,

tiup ctl:v{version} pd member transfer {target pd name}

Switch to the new cluster and scale down the old one.

If you want to be more meticulous, you can set a higher priority for the new PD. Once you set the priority, any future elections will likely occur on the new PD, unless all the new PDs fail.

tiup ctl:v{version} pd member leader_priority {pd name} {priority number}

The priority for those without a set priority should be 0. Set all new PDs to 1, so any future elections will only produce a PD leader from the new PDs. With the priority set, the leader should automatically switch to the new PD, and you won’t need to transfer it again manually.

Keep the PD leader node, scale-in the other nodes, then scale-out new PD nodes, move the leader node to the new node, and finally delete the last old node.

You can directly scale up or down, there shouldn’t be any issues.

It’s already version 5+, just go ahead and do it.

Or you can forcefully remove the leader from the current member:

member leader resign

On my side, everything is handed over to the cluster for automatic switching, without manual switching. It will automatically switch during scaling down.

On my side, everything is handed over to the cluster for automatic switching, without manual switching. It will automatically switch during scaling down.

It switches automatically.

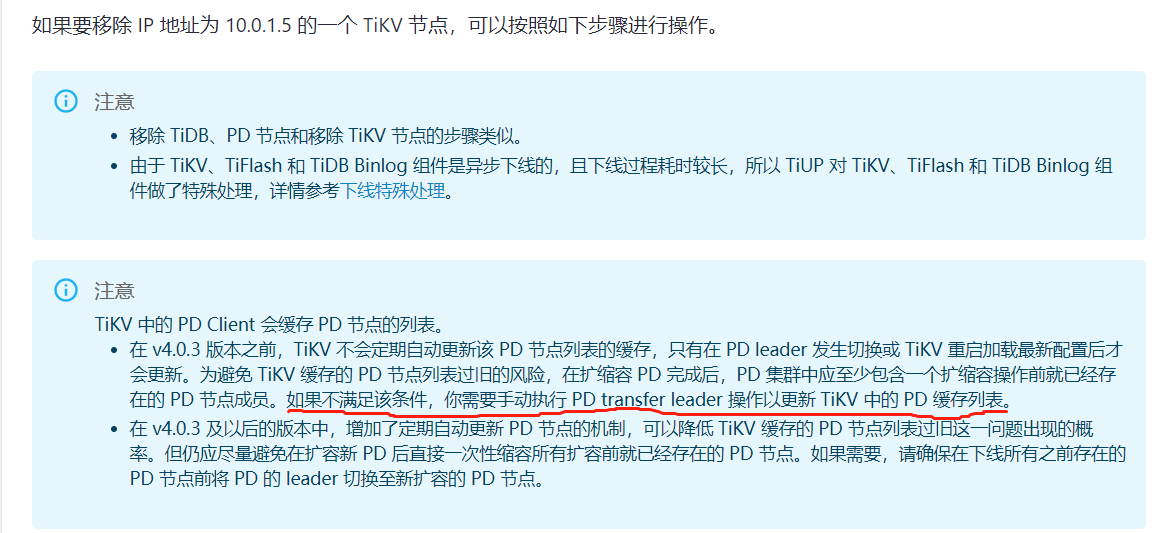

Whether to switch manually depends on the version. As I mentioned earlier, v5.x can switch automatically. Here’s a picture for reference:

Upgrade the version, and it will be fine.

Sure, previously tested switching PD by first scaling out and then scaling in, no issues.

Leave an old node, start a new node, switch the L node to the new node, stop the old node, shrink it, and then restart the entire cluster.

I haven’t paid attention to this. During low business peak periods, we directly scale down, and it switches on its own. There’s no requirement for who must be the leader; the cluster can decide on its own.