Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: 关于TiKV实例内存使用的疑问?

[TiDB Usage Environment] Production Environment

[TiDB Version] 5.3.0

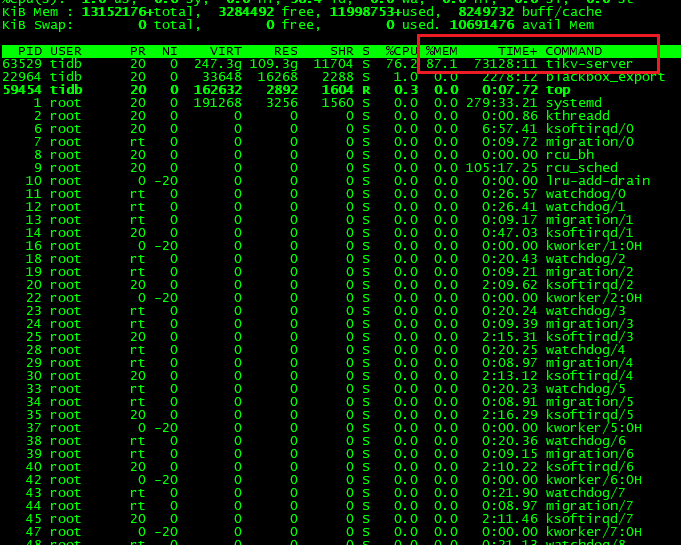

[Encountered Problem] The storage.block-cache.capacity parameter has been set to limit the Block Cache Size to 90G. However, the Grafana panel shows the Block Cache Size as 75G. The system’s top command shows that the TiKV-Server is using 111G of memory.

[Problem Phenomenon and Impact]

- Below is the TIKV configuration returned by

tiup cluster edit-config <cluster_name>

tikv:

raftdb.defaultcf.block-cache-size: 4GiB

readpool.unified.max-thread-count: 38

rocksdb.defaultcf.block-cache-size: 50GiB

rocksdb.lockcf.block-cache-size: 4GiB

rocksdb.writecf.block-cache-size: 25GiB

server.grpc-concurrency: 14

server.grpc-raft-conn-num: 5

split.qps-threshold: 2000

storage.block-cache.capacity: 90GiB

-

Below is the

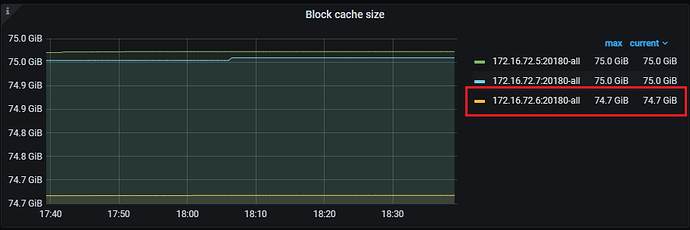

Grafana->TiKV-Detail->RocksDB-KV->Block Cache Sizepanel,

-

Below is the

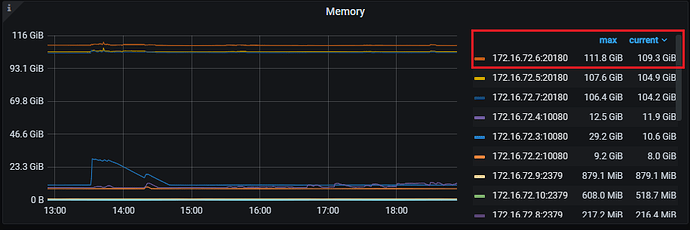

Grafana->TiKV-Detail->Cluster->Memorypanel, which is consistent with the system’s top display.

Questions

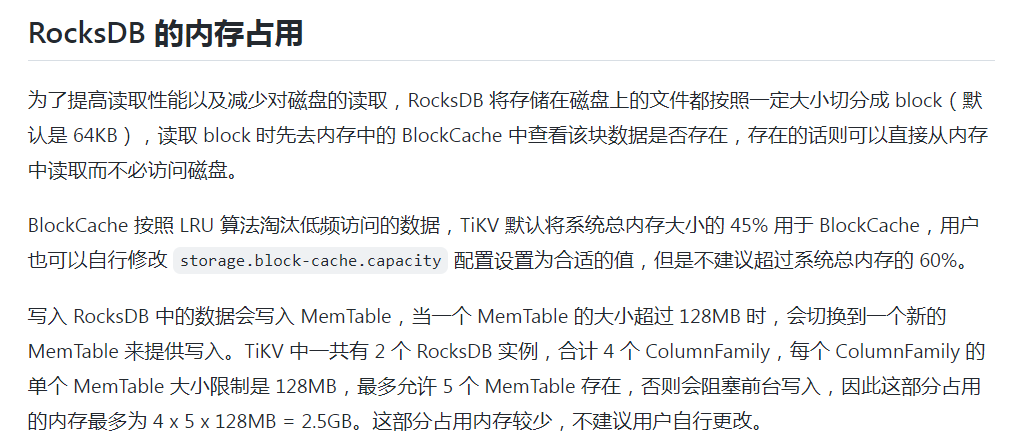

- Shouldn’t the value in the

Grafana->TiKV-Detail->RocksDB-KV->Block Cache Sizepanel be 90G? Why is it 75G? - The

Grafana->TiKV-Detail->Cluster->Memorypanel shows that TiKV is using a total of 111G of memory. Who is using the remaining 36G (i.e., 111-75)? Which panel can I check this on?