Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: 关于 TiKV OOM 现象的疑问

[Usage Environment] Production (3 TiDB/3 PD/3 TiKV)

[TiDB Version] 5.3.0

[Encountered Problem] Some memory limit parameters of TiKV are not well understood

- TiKV instances are deployed independently, with the following memory configuration (host physical memory 128G,

free -gshows 125G):

tikv:

raftdb.defaultcf.block-cache-size: 4GiB

readpool.unified.max-thread-count: 38

rocksdb.defaultcf.block-cache-size: 50GiB

rocksdb.lockcf.block-cache-size: 4GiB

rocksdb.writecf.block-cache-size: 25GiB

server.grpc-concurrency: 14

server.grpc-raft-conn-num: 5

split.qps-threshold: 2000

storage.block-cache.capacity: 90GiB

mysql> show config where name like 'storage.block-cache.capacity';

+------+---------------------+------------------------------+-------+

| Type | Instance | Name | Value |

+------+---------------------+------------------------------+-------+

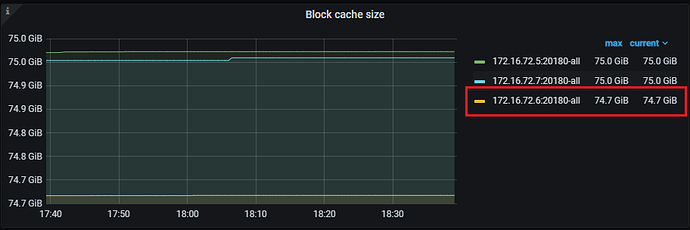

| tikv | 192.168.3.225:20160 | storage.block-cache.capacity | 75GiB |

| tikv | 192.168.3.224:20160 | storage.block-cache.capacity | 75GiB |

| tikv | 192.168.3.226:20160 | storage.block-cache.capacity | 75GiB |

+------+---------------------+------------------------------+-------+

3 rows in set (0.01 sec)

-

Grafana->TiKV-Detail->RocksDB-KV->Block Cache Size panel

-

THP (Transparent HugePage) is not disabled

[tidb@idc1-offline-tikv2 scripts]$ cat /proc/meminfo|grep Huge

AnonHugePages: 4096 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

[tidb@idc1-offline-tikv2 scripts]$ cat /sys/kernel/mm/transparent_hugepage/defrag

[always] madvise never

- Before OOM, the TiKV log is as follows:

[2022/09/15 21:02:37.837 +08:00] [WARN] [config.rs:2650] ["memory_usage_limit:ReadableSize(134217728000) > recommanded:ReadableSize(103432923648), maybe page cache isn't enough"]

[2022/09/15 21:02:37.880 +08:00] [WARN] [config.rs:2650] ["memory_usage_limit:ReadableSize(137910564864) > recommanded:ReadableSize(103432923648), maybe page cache isn't enough"]

[2022/09/15 21:02:37.882 +08:00] [INFO] [server.rs:1408] ["beginning system configuration check"]

[2022/09/15 21:02:37.882 +08:00] [INFO] [config.rs:919] ["data dir"] [mount_fs="FsInfo { tp: \"ext4\", opts: \"rw,noatime,nodelalloc,stripe=64,data=ordered\", mnt_dir: \"/tidb-data\", fsname: \"/dev/sdb1\" }"] [data_path=/tidb-data/tikv-20160]

[2022/09/15 21:02:37.883 +08:00] [INFO] [config.rs:919] ["data dir"] [mount_fs="FsInfo { tp: \"ext4\", opts: \"rw,noatime,nodelalloc,stripe=64,data=ordered\", mnt_dir: \"/tidb-data\", fsname: \"/dev/sdb1\" }"] [data_path=/tidb-data/tikv-20160/raft]

[2022/09/15 21:02:37.898 +08:00] [INFO] [server.rs:316] ["using config"] [config="{\"log-level\":\"info\",\"log-file\":\"/tidb-deploy/tikv-20160/log/tikv.log\",\"log-format\":\"text\",\"slow-log-file\":\"\",\"slow-log-threshold\":\"1s\",\"log-rotation-timespan\":\"1d\",\"log-rotation-size\":\"300MiB\",\"panic-when-unexpected-key-or-data\":false,\"enable-io-snoop\":true,\"abort-on-panic\":false,\"memory-usage-limit\":\"125GiB\",\"memory-usage-high-water\":0.9,....

A colleague increased the Block Cache Size to 90G to increase the amount of data cached in memory. I personally believe that the parameter is set too high, which is the main cause of the OOM.

According to the description in https://asktug.com/t/topic/933132/2.

When Block Cache Size is set to 90G:

memory-usage-limit= Block Cache * 10/6 = 150G, since physical memory is 128G,memory-usage-limit=128G.memory-usage-high-wateris 0.9, somemory-usage-limit* 0.9 = 128G * 0.9 = 115G.Page-Cache= physical memory * 0.25 = 128G * 0.25 = 32G

Questions

- What is the role of

memory-usage-high-waterhere? It seems to have no effect? - Can the size of

Page-Cachebe adjusted? Is it by default 1/4 of the physical memory? - In the future, can we set a total memory limit parameter for TiKV, like Oracle’s SGA, such as

tikv_memory_target? Then, TiKV can automatically adjust the memory limits of its internal components based on the ratio or running status. At the same time, the memory limits of TiKV’s internal components can also be explicitly specified? - After one TiKV OOM restarts, the memory usage drops. The memory usage of the other two also drops. What is the reason for this?

- By setting storage.block-cache.capacity=75G through the set command, but the TiKV configuration file sets it to 90G. Grafana monitoring shows that storage.block-cache.capacity is 75G. Is it because the system variable set through the set command has a higher priority than the setting in the TiKV cluster configuration file? Will the value set by the set command not be persisted to the cluster configuration file?