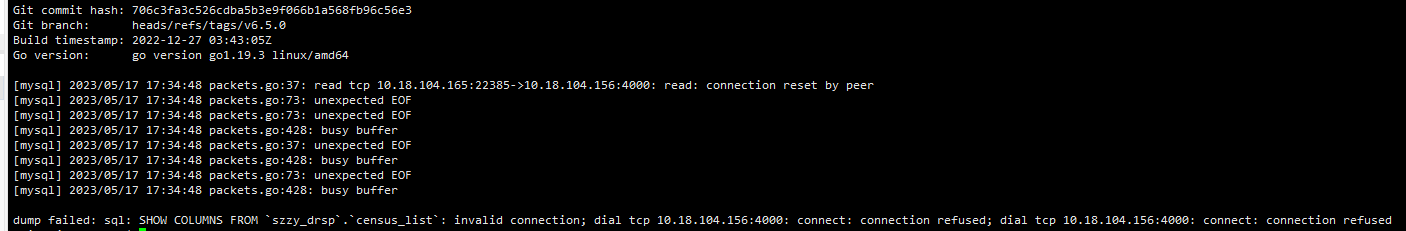

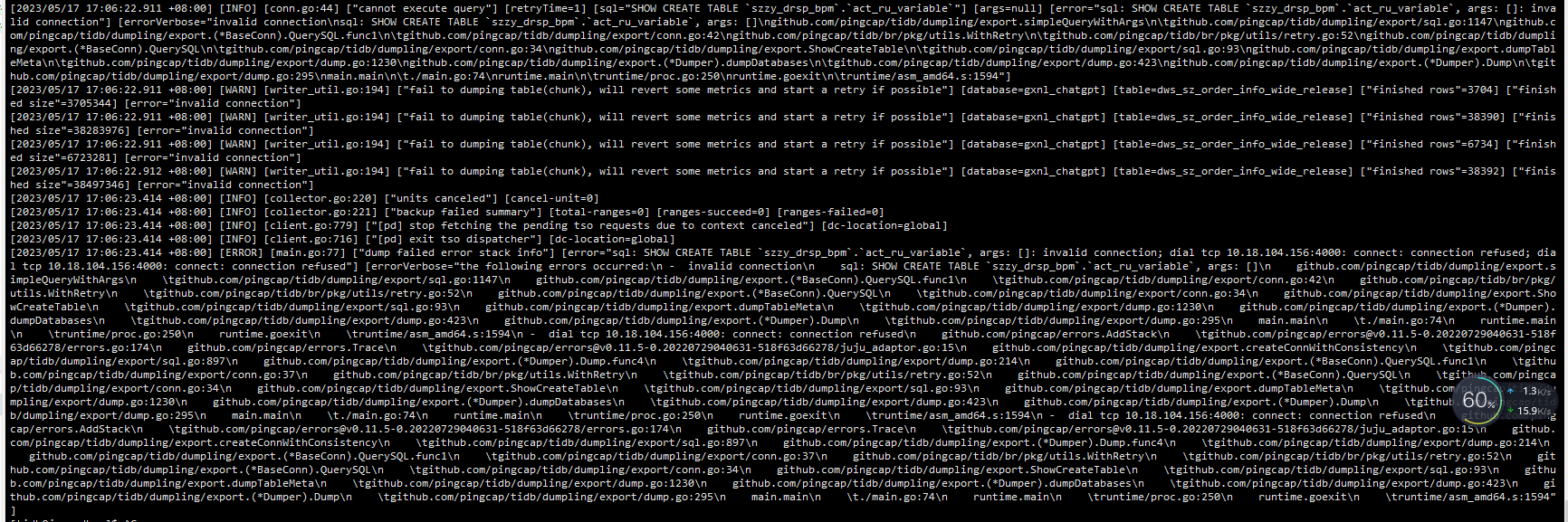

The only error log that can be seen now is this, and the service has crashed and cannot be restarted.

[2023/05/18 09:21:47.987 +08:00] [INFO] [sst_importer.rs:442] [“shrink cache by tick”] [“retain size”=0] [“shrink size”=0]

[2023/05/18 09:21:52.691 +08:00] [WARN] [errors.rs:155] [“backup stream meet error”] [verbose_err=“Etcd(GRpcStatus(Status { code: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", source: None }))”] [err=“Etcd meet error grpc request error: status: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", details: , metadata: MetadataMap { headers: {} }”] [context=“failed to get backup stream task”]

[2023/05/18 09:21:57.693 +08:00] [WARN] [errors.rs:155] [“backup stream meet error”] [verbose_err=“Etcd(GRpcStatus(Status { code: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", source: None }))”] [err=“Etcd meet error grpc request error: status: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", details: , metadata: MetadataMap { headers: {} }”] [context=“failed to get backup stream task”]

[2023/05/18 09:21:57.988 +08:00] [INFO] [sst_importer.rs:442] [“shrink cache by tick”] [“retain size”=0] [“shrink size”=0]

[2023/05/18 09:22:02.694 +08:00] [WARN] [errors.rs:155] [“backup stream meet error”] [verbose_err=“Etcd(GRpcStatus(Status { code: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", source: None }))”] [err=“Etcd meet error grpc request error: status: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", details: , metadata: MetadataMap { headers: {} }”] [context=“failed to get backup stream task”]

[2023/05/18 09:22:07.695 +08:00] [WARN] [errors.rs:155] [“backup stream meet error”] [verbose_err=“Etcd(GRpcStatus(Status { code: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", source: None }))”] [err=“Etcd meet error grpc request error: status: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", details: , metadata: MetadataMap { headers: {} }”] [context=“failed to get backup stream task”]

[2023/05/18 09:22:07.989 +08:00] [INFO] [sst_importer.rs:442] [“shrink cache by tick”] [“retain size”=0] [“shrink size”=0]

[2023/05/18 09:22:12.696 +08:00] [WARN] [errors.rs:155] [“backup stream meet error”] [verbose_err=“Etcd(GRpcStatus(Status { code: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", source: None }))”] [err=“Etcd meet error grpc request error: status: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", details: , metadata: MetadataMap { headers: {} }”] [context=“failed to get backup stream task”]

[2023/05/18 09:22:17.697 +08:00] [WARN] [errors.rs:155] [“backup stream meet error”] [verbose_err=“Etcd(GRpcStatus(Status { code: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", source: None }))”] [err=“Etcd meet error grpc request error: status: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", details: , metadata: MetadataMap { headers: {} }”] [context=“failed to get backup stream task”]

[2023/05/18 09:22:17.991 +08:00] [INFO] [sst_importer.rs:442] [“shrink cache by tick”] [“retain size”=0] [“shrink size”=0]

[2023/05/18 09:22:22.698 +08:00] [WARN] [errors.rs:155] [“backup stream meet error”] [verbose_err=“Etcd(GRpcStatus(Status { code: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", source: None }))”] [err=“Etcd meet error grpc request error: status: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", details: , metadata: MetadataMap { headers: {} }”] [context=“failed to get backup stream task”]

[2023/05/18 09:22:27.700 +08:00] [WARN] [errors.rs:155] [“backup stream meet error”] [verbose_err=“Etcd(GRpcStatus(Status { code: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", source: None }))”] [err=“Etcd meet error grpc request error: status: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", details: , metadata: MetadataMap { headers: {} }”] [context=“failed to get backup stream task”]

[2023/05/18 09:22:27.991 +08:00] [INFO] [sst_importer.rs:442] [“shrink cache by tick”] [“retain size”=0] [“shrink size”=0]

[2023/05/18 09:22:32.701 +08:00] [WARN] [errors.rs:155] [“backup stream meet error”] [verbose_err=“Etcd(GRpcStatus(Status { code: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", source: None }))”] [err=“Etcd meet error grpc request error: status: Unknown, message: "Service was not ready: buffered service failed: load balancer discovery error: transport error: transport error", details: , metadata: MetadataMap { headers: {} }”] [context=“failed to get backup stream task”]