Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: tiup组件恢复使用

[TiDB Usage Environment] Production Environment

[TiDB Version] v5.2.4

[Encountered Problem: Problem Phenomenon and Impact]

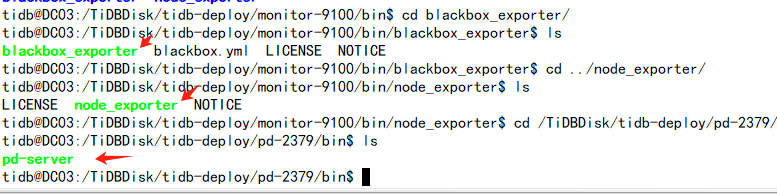

Tiup and tikv are on the same server. This server crashed in the early morning. The tikv on this server can be taken offline through other pd nodes. Could you please advise how to recover the tiup component on other nodes?

Can’t you even access the operating system? If you can, copy the .tiup directory to other nodes.

Can’t access the operating system.

If you can’t even get into the operating system, you can only refer to the solution in the first post. Additionally, I recommend regularly backing up with tiup. We currently have two scheduled backups: one for the database and one for tiup.

I remember you can use the same YAML deployment file to deploy on other machines. You can test it out.

Can’t you just install tiup on the new machine directly?

I found the initial topology.yaml configuration file. How do I redeploy it?

Just redeploy it the same way as before with tiup, and remember to rename the previously deployed instance.

Download an offline package and deploy it on the new node, right?

Hello, after reading this post, I have three questions for you to check:

- In the second step, do we need to mv the startup files under all nodes?

- The original Grafana, monitoring, and alertmanager were also on the crashed server. Can the latest topology.yaml file specify deployment on other servers?

- For the TiKV node configuration on the crashed server, do we no longer need to add it to the configuration file?

So, all the startup services of the original instance have been renamed, right? If the TiKV node on the server is down, does it mean it doesn’t need to be written anymore?

You can directly deploy the new node.

No need to rename the instance

Redeploy and then restore the cluster data from the backup.

My understanding is that the failed TiKV node still needs to appear in the configuration file. After configuring it, use the

My understanding is that the failed TiKV node still needs to appear in the configuration file. After configuring it, use the tiup cluster scale-in --force command to forcibly scale in and remove this node to avoid cached information.

Make a backup every time a change occurs.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.