Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: 缩容Pump

【TiDB Usage Environment】Production Environment

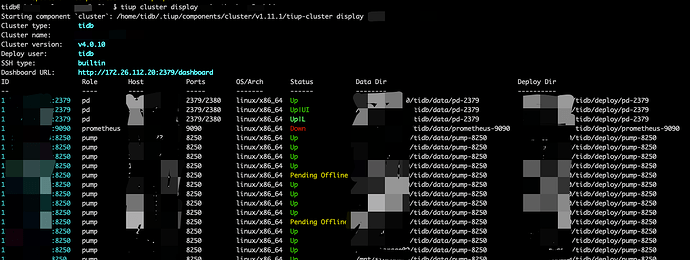

【TiDB Version】v4.0.10

【Reproduction Path】tiup cluster scale-in xxx -N xxx.xx.xx.xx

【Encountered Problem: Problem Phenomenon and Impact】

Problem Description:

Using tiup cluster scale-in to shrink the pump, the command execution result shows successful shrinkage. However, when using display to check the cluster topology, it is found that the status of the two shrunk pump nodes is Pending Offline. Using binlogctl to check the pump node status, it is found that the status of the shrunk pump nodes is online, and MaxCommitTS and UpdateTime remain unchanged since the shrinkage time. Checking the pump’s running log, there are [WARN] logs with content [WARN] [server.go:868] [“Waiting for drainer to consume binlog”] [“Minimum Drainer MaxCommitTS”=437208609048232751] [“Need to reach maxCommitTS”=441964881535041564].

Here is a summary of the operation timeline and results:

Shrink 2 pump nodes of the xxx cluster, hereafter referred to as pump1 and pump2

Management Machine 2023-06-05 18:50 tiup cluster scale-in xxx -N xxx.xx.xx.xx:8250,xxx.xx.xx.xx:8250

Command Result: Scaled cluster xxx in successfully

Check the xxx cluster topology

Management Machine 2023-06-05 18:51 tiup cluster display xxx

Command Result: pump1 and pump2 status is Pending offline

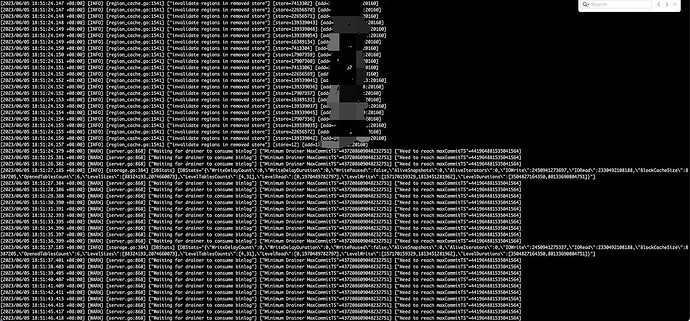

Check pump1 running log

Pump1 Server 2023-06-05 18:51 tail -100 /tidb/deploy/pump-8250/log/pump.log

Pump1 subsequently reports the following WARN

[2023/06/05 18:51:57.440 +08:00] [WARN] [server.go:868] [“Waiting for drainer to consume binlog”] [“Minimum Drainer MaxCommitTS”=437208609048232751] [“Need to reach maxCommitTS”=441964881535041564]

The timestamp 441964881535041564 corresponds to the time 2023-06-05 18:50:25.094 +0800 CST

Use binlogctl to check the status of the shrunk pump nodes

Management Machine 2023-06-05 18:51 /home/tidb/.tiup/components/ctl/v4.0.10/binlogctl -pd-urls=http://xxx.xx.xx.xx:2379 -cmd pumps|grep ‘xxx.xx.xx.xx|xxx.xx.xx.xx’

Command Result:

|[2023/06/05 18:51:00.155 +08:00] [INFO] [nodes.go:53] [“query node”] [type=pump] [node=“{NodeID: xxx.xx.xx.xx:8250, Addr: xxx.xx.xx.xx:8250, State: online, MaxCommitTS: 441964878140277275, UpdateTime: 2023-06-05 18:50:12 +0800 CST}”]

[2023/06/05 18:51:00.155 +08:00] [INFO] [nodes.go:53] [“query node”] [type=pump] [node=“{NodeID: xxx.xx.xx.xx:8250, Addr: xxx.xx.xx.xx:8250, State: online, MaxCommitTS: 441964878140277272, UpdateTime: 2023-06-05 18:50:11 +0800 CST}”]|binlogctl shows pump1’s MaxCommitTS: 441964878140277275

Pump1 instance timestamp 441964878140277275 corresponds to the time 2023-06-05 18:50:12.144 +0800 CST

Phenomenon Description:

The tiup operation shrinks 2 pumps (around 2023-06-05 18:50:00), the scale-in command returns a successful result, but when checking the cluster topology, the pump node status is Pending offline, indicating it is in the process of going offline.

Checking the running log of the shrunk pump1 node, the subsequent log content is [“Need to reach maxCommitTS”=441964881535041564], indicating that the pump1 node’s binlog needs to be consumed up to 441964881535041564 (2023-06-05 18:50:25.094) before it can be taken offline.

Using the binlogctl tool to check the pump status, pump1’s MaxCommitTS: 441964878140277275 (2023-06-05 18:50:12.144), indicating that pump1 should have been kicked out of the pump cluster and no more binlogs will be written to it, so the MaxCommitTS will not change. However, since MaxCommitTS is less than the log’s required consumption point 441964881535041564 (2023-06-05 18:50:25.094), the node will remain in the offline state. After waiting for 2 hours, pump1’s log still shows “Waiting for drainer to consume binlog”, and checking the pump status with binlogctl, the MaxCommitTS and UpdateTime of the offline pump instance do not change.

Personal Understanding:

- There is only one copy of the log in the pump, so during the shrinkage operation, all binlogs on the node need to be consumed before the service can be stopped and taken offline, otherwise, binlogs will be lost.

- During the shrinkage process, the pump node to be taken offline is first kicked out to ensure no new binlogs are written, then the binlogs on it are consumed, and finally, the pump is taken offline.

Questions about Shrinking Pump:

- Is it not possible to directly use the tiup tool to shrink the pump node?

- Is the correct way to shrink the pump node to use binlogctl offline-pump first, and then use tiup scale in?