Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: tikv的扩容

【TiDB Usage Environment】Production Environment / Testing / PoC

【TiDB Version】

【Reproduction Path】Previously, I was using one PD, one TiDB, and one KV for testing. Now the KV space is full, so I expanded with 2 more disks. The expansion was successful, but the full disk space did not decrease.

【Encountered Problem: Problem Phenomenon and Impact】

How to achieve TiKV space rebalancing? Is it automatically balanced by the system, or do I need to run some command to trigger the rebalancing?

【Resource Configuration】

【Attachments: Screenshots/Logs/Monitoring】

Expanding KV nodes… Expanding the disk is not useful.

It is expanding nodes, maybe I didn’t make it clear.

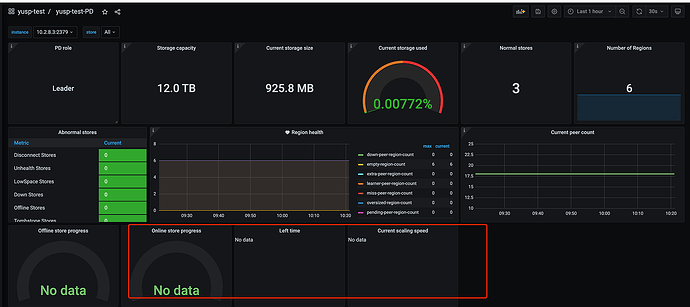

Check if these two monitors (overview->tikv) are level. If they are not level, it means migration is in progress. If they are level, it means migration is complete. Normally, the numbers of leaders and regions are balanced.

Follow the same troubleshooting approach as with hotspots and perform the balance operation.

One of the KV disks is a bit small. Add another KV and then scale in the KV of the PD node.

Did you originally set the replica to 1? Otherwise, a single TiKV node wouldn’t be able to run. If that’s the case, you can directly scale down the old node now. Balancing multiple nodes on the same machine is very difficult to achieve evenly among the three.

For a single node, different directories will suffice.

You can first check the number of replicas (for example, show table test.t regions).

Additionally, you can look at the scheduling situation and check the latest PD monitoring page.