Thank you for your attention~

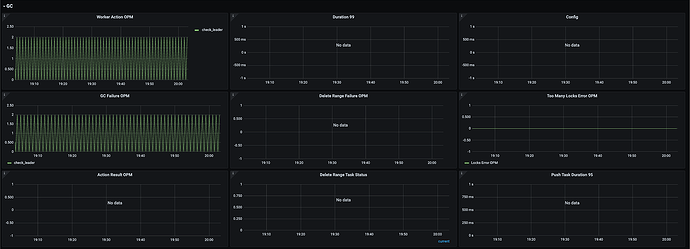

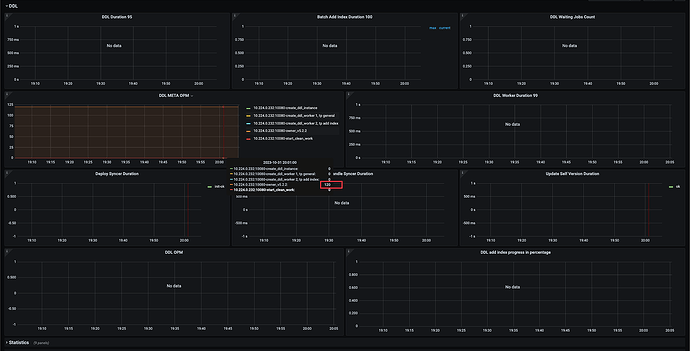

This is the situation of the Grafana dashboard.

I don’t quite understand these metrics. It looks like the job latency distribution is mostly in the high latency part.

# HELP tidb_ddl_deploy_syncer_duration_seconds Bucketed histogram of processing time (s) of deploy syncer

# TYPE tidb_ddl_deploy_syncer_duration_seconds histogram

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="0.001"} 0

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="0.002"} 0

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="0.004"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="0.008"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="0.016"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="0.032"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="0.064"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="0.128"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="0.256"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="0.512"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="1.024"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="2.048"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="4.096"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="8.192"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="16.384"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="32.768"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="65.536"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="131.072"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="262.144"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="524.288"} 1

tidb_ddl_deploy_syncer_duration_seconds_bucket{result="ok",type="init",le="+Inf"} 1

tidb_ddl_deploy_syncer_duration_seconds_sum{result="ok",type="init"} 0.002846399

tidb_ddl_deploy_syncer_duration_seconds_count{result="ok",type="init"} 1

# HELP tidb_ddl_update_self_ver_duration_seconds Bucketed histogram of processing time (s) of update self version

# TYPE tidb_ddl_update_self_ver_duration_seconds histogram

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="0.001"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="0.002"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="0.004"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="0.008"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="0.016"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="0.032"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="0.064"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="0.128"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="0.256"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="0.512"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="1.024"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="2.048"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="4.096"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="8.192"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="16.384"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="32.768"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="65.536"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="131.072"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="262.144"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="524.288"} 1

tidb_ddl_update_self_ver_duration_seconds_bucket{result="ok",le="+Inf"} 1

tidb_ddl_update_self_ver_duration_seconds_sum{result="ok"} 0.000376232

tidb_ddl_update_self_ver_duration_seconds_count{result="ok"} 1

# HELP tidb_ddl_worker_operation_total Counter of creating ddl/worker and isowner.

# TYPE tidb_ddl_worker_operation_total counter

tidb_ddl_worker_operation_total{type="create_ddl_instance"} 1

tidb_ddl_worker_operation_total{type="create_ddl_worker 1, tp general"} 1

tidb_ddl_worker_operation_total{type="create_ddl_worker 2, tp add index"} 1

tidb_ddl_worker_operation_total{type="owner_v5.2.2"} 13258

tidb_ddl_worker_operation_total{type="start_clean_work"} 1

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="0.0005"} 7026

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="0.001"} 13168

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="0.002"} 13221

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="0.004"} 13237

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="0.008"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="0.016"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="0.032"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="0.064"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="0.128"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="0.256"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="0.512"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="1.024"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="2.048"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="4.096"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="8.192"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="16.384"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="32.768"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="65.536"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="131.072"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="262.144"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="524.288"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="1048.576"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="2097.152"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="4194.304"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="8388.608"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="16777.216"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="33554.432"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="67108.864"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="134217.728"} 13240

tidb_meta_operation_duration_seconds_bucket{result="err",type="get_ddl_job",le="+Inf"} 13240

tidb_meta_operation_duration_seconds_sum{result="err",type="get_ddl_job"} 6.707527507000024

tidb_meta_operation_duration_seconds_count{result="err",type="get_ddl_job"} 13240

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="0.0005"} 16

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="0.001"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="0.002"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="0.004"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="0.008"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="0.016"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="0.032"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="0.064"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="0.128"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="0.256"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="0.512"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="1.024"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="2.048"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="4.096"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="8.192"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="16.384"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="32.768"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="65.536"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="131.072"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="262.144"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="524.288"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="1048.576"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="2097.152"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="4194.304"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="8388.608"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="16777.216"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="33554.432"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="67108.864"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="134217.728"} 18

tidb_meta_operation_duration_seconds_bucket{result="ok",type="get_ddl_job",le="+Inf"} 18

tidb_meta_operation_duration_seconds_sum{result="ok",type="get_ddl_job"} 0.007464113

tidb_meta_operation_duration_seconds_count{result="ok",type="get_ddl_job"} 18

tidb_owner_new_session_duration_seconds_bucket{result="ok",type="[ddl-syncer] /tidb/ddl/all_schema_versions/72a55cc4-fa94-4dd2-9ed6-b7630eebcd39",le="0.0005"} 0

tidb_owner_new_session_duration_seconds_bucket{result="ok",type="[ddl-syncer] /tidb/ddl/all_schema_versions/72a55cc4-fa94-4dd2-9ed6-b7630eebcd39",le="0.001"} 1

tidb_owner_new_session_duration_seconds_bucket{result="ok",type="[ddl-syncer] /tidb/ddl/all_schema_versions/72a55cc4-fa94-4dd2-9ed6-b7630eebcd39",le="0.002"} 1

tidb_owner_new_session_duration_seconds_bucket{result="ok",type="[ddl-syncer] /tidb/ddl/all_schema_versions/72a55cc4-fa94-4dd2-9ed6-b7630eebcd39",le="0.004"} 1

tidb_owner_new_session_duration_seconds_bucket{result="ok",type="[ddl-syncer] /tidb/ddl/all_schema_versions/72a55cc4-fa94-4dd2-9ed6-b7630eebcd39",le="0.008"} 1

tidb_owner_new_session_duration_seconds_bucket{result="ok",type="[ddl-syncer] /tidb/ddl/all_schema_versions/72a55cc4-fa94-4dd2-9ed6-b7630eebcd39",le="0.016"} 1

tidb_owner_new_session_duration_seconds_bucket{result="ok",type="[ddl-syncer] /tidb/ddl/all_schema_versions/72a55cc4-fa94-4dd2-9ed6-b7630eebcd39",le="0.032"} 1

tidb_owner_new_session_duration_seconds_bucket{result="ok",type="[ddl-syncer] /tidb/ddl/all_schema_versions/72a55cc4-fa94-4dd2-9ed6-b7630eebcd39",le="0.064"} 1

tidb_owner_new_session_duration_seconds_bucket{result="ok",type="[ddl-syncer] /tidb/ddl/all_schema_versions/72a55cc4-fa94-4dd2-9ed6-b7630eebcd39",le="0.128"} 1

tidb_owner_new_session_duration_seconds_bucket{result="ok",type="[ddl-syncer] /tidb/ddl/all_schema_versions/72a55cc4-fa94-4dd2-9ed6-b7630eebcd39",le="0.256"} 1

tidb_owner_new_session_duration_seconds_bucket{result="ok",type="[ddl-syncer] /tidb/ddl/all_schema_versions/72a55cc4-fa94-4dd2-9ed6-b7630eebcd39",le="0.512"} 1

tidb_owner_new_session_duration_seconds_bucket{result="ok",type="[ddl-syncer] /tidb/ddl/all_schema_versions/72a55cc4-fa94-4dd2-9ed6-b7630eebcd39",le="1.024"} 1

tidb_owner_new_session_duration_seconds_bucket{result="ok",type="[ddl-syncer] /tidb/ddl/all_schema_versions/72a55cc4-fa94-4dd2-9