Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

[TiDB Usage Environment] Production Environment

[TiDB Version] 5.2.2

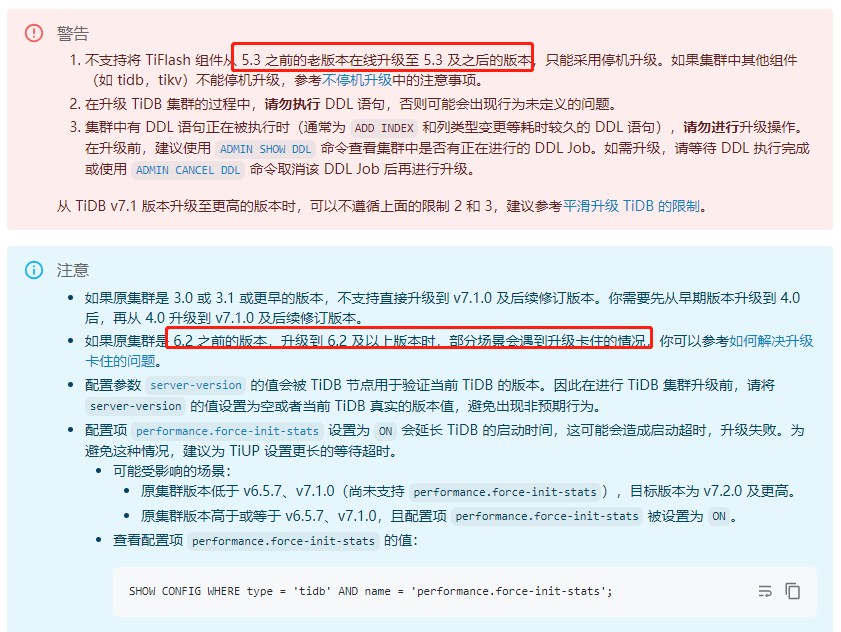

Due to the large amount of data in the production cluster, which is used for core business, there are currently no extra resources to set up a mirror cluster for the upgrade. I want to upgrade directly online to version 7.1.0. I seek advice on the matters that need attention.

Current TiDB cluster information is as follows:

Cluster type: tidb

Cluster name: social-tidb

Cluster version: v5.2.2

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://192.168.3.59:2379/dashboard

Grafana URL: http://192.168.3.15:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

192.168.3.21:9093 alertmanager 192.168.3.21 9093/9094 linux/x86_64 Up /data/tidb/data.alertmanager /data/tidb

192.168.3.131:8300 cdc 192.168.3.131 8300 linux/x86_64 Up /data/tidb-data/cdc-8300 /opt/app/tidb-deploy/cdc-8300

192.168.3.132:8300 cdc 192.168.3.132 8300 linux/x86_64 Up /home/tidb/deploy/cdc-8300/data /home/tidb/deploy/cdc-8300

192.168.3.128:8249 drainer 192.168.3.128 8249 linux/x86_64 Up /data/tidb-data/drainer-8249 /opt/app/tidb-deploy/drainer-8249

192.168.3.15:3000 grafana 192.168.3.15 3000 linux/x86_64 Up - /opt/app/tidb-deploy/grafana-3000

192.168.3.57:2379 pd 192.168.3.57 2379/2380 linux/x86_64 Up /data/tidb/data.pd /data/tidb

192.168.3.58:2379 pd 192.168.3.58 2379/2380 linux/x86_64 Up|L /data/tidb/data.pd /data/tidb

192.168.3.59:2379 pd 192.168.3.59 2379/2380 linux/x86_64 Up|UI /data/tidb/data.pd /data/tidb

192.168.3.25:9090 prometheus 192.168.3.25 9090 linux/x86_64 Up /data/tidb/prometheus2.0.0.data.metrics /data/tidb

192.168.3.134:8250 pump 192.168.3.134 8250 linux/x86_64 Up /data/tidb-data/pump-8250 /opt/app/tidb-deploy/pump-8250

192.168.3.135:8250 pump 192.168.3.135 8250 linux/x86_64 Up /data/tidb-data/pump-8250 /opt/app/tidb-deploy/pump-8250

192.168.1.32:4000 tidb 192.168.1.32 4000/10080 linux/x86_64 Up - /data/tidb

192.168.2.176:4000 tidb 192.168.2.176 4000/10080 linux/x86_64 Up - /data/tidb

192.168.1.105:20160 tikv 192.168.1.105 20160/20180 linux/x86_64 Up /data1/tidb-data /opt/app/tidb-deploy/tikv-20160

192.168.1.178:20160 tikv 192.168.1.178 20160/20180 linux/x86_64 Up /data1/tidb-data /opt/app/tidb-deploy/tikv-20160

192.168.1.179:20160 tikv 192.168.1.179 20160/20180 linux/x86_64 Up /data1/tidb-data /opt/app/tidb-deploy/tikv-20160

192.168.1.74:20160 tikv 192.168.1.74 20160/20180 linux/x86_64 Up /data1/tidb-data /opt/app/tidb-deploy/tikv-20160

192.168.1.93:20160 tikv 192.168.1.93 20160/20180 linux/x86_64 Up /data1/tidb-data /opt/app/tidb-deploy/tikv-20160

192.168.1.94:20160 tikv 192.168.1.94 20160/20180 linux/x86_64 Up /data1/tidb-data /opt/app/tidb-deploy/tikv-20160

192.168.1.98:20160 tikv 192.168.1.98 20160/20180 linux/x86_64 Up /data1/tidb-data /opt/app/tidb-deploy/tikv-20160

192.168.1.99:20160 tikv 192.168.1.99 20160/20180 linux/x86_64 Up /data1/tidb-data /opt/app/tidb-deploy/tikv-20160