Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: MySQL主从日志落盘时间测试

[TiDB Usage Environment] Production Environment / Testing / PoC

[TiDB Version]

[Reproduction Path] What operations were performed when the issue occurred

[Encountered Issue: Issue Phenomenon and Impact]

[Resource Configuration] Go to TiDB Dashboard - Cluster Info - Hosts and take a screenshot of this page

[Attachments: Screenshots / Logs / Monitoring]

Does anyone have experience with testing the time difference when MySQL primary and secondary databases write to disk? I want to see how much time this part takes, especially for remote disaster recovery RPO time.

It definitely depends on your actual environment, it’s not fixed.

Parse binlog and look at these two timestamps: the difference between original_commit_timestamp and immediate_commit_timestamp can be found here:

There are too many variables, such as host performance, network bandwidth…

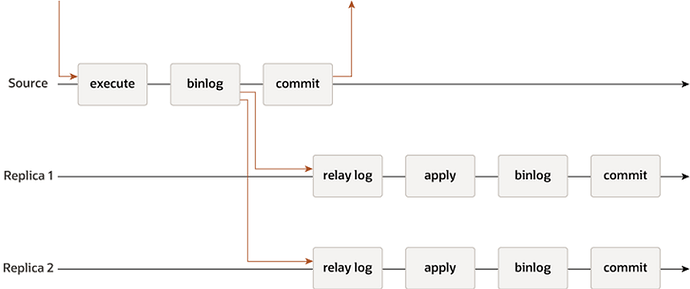

Analysis of binlog implementation

Your solution actually tests the time it takes for the data to be written to the slave database.

We now want to test the time it takes for the Relay log to be written to disk. Generally, as long as the data reaches the relay log, the local MySQL thread will definitely continue to write to its own database, regardless of any anomalies in the master database.

There is a testing plan, and specific scenarios will be handled accordingly. A standard time is not required.

To test the relay log time, you can write to the binlog to verify that the data has already been transferred to the remote location.

Parsing relay-log is the time when the relay is written to disk.

Parsing slave binlog is the time when the log is written to disk after being applied.

The time test varies depending on the architecture: for example, delayed master-slave, asynchronous master-slave, and real-time master-slave. They are all different. Test according to your requirements.

Mark it, and study it later.

I just don’t know how to test it. It’s not about standard data, but is there any testing methodology for the relay log’s disk write time (excluding playback)?

Good idea, integrating it into the self-built dashboard. Leaders tend to like these kinds of metrics.

In fact, for remote disaster recovery, the network bandwidth has a greater impact on the RPO. If you only consider disk writes, then it depends on disk performance. Looking at it in isolation is meaningless.

This RPO is also related to your replication type:

Asynchronous Replication

Semi-Synchronous Replication

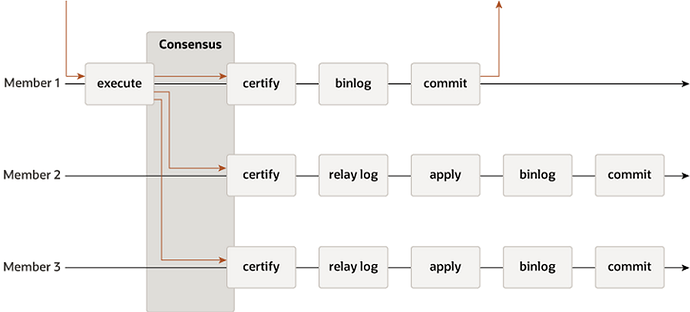

MGR

You also need to consider the impact on the primary database. For performance comparisons of various modes, you can refer to:

https://dev.mysql.com/blog-archive/semi-synchronous-replication-performance-in-mysql-5-7/

https://dev.mysql.com/blog-archive/an-overview-of-the-group-replication-performance/

Parsing binlog, you can look at the difference between these two timestamps: original_commit_timestamp and immediate_commit_timestamp.