Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: br增量备份数据量爆增300倍,请教下原理,还是触发了BUG?

【TiDB Usage Environment】Production

【TiDB Version】

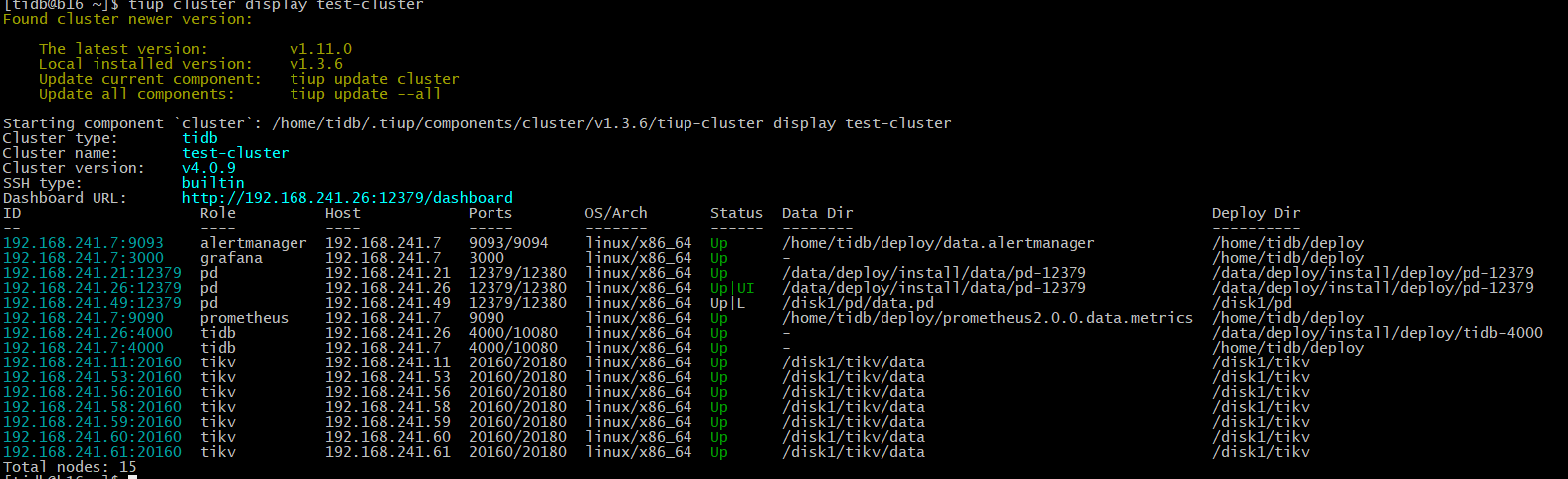

Cluster version: v4.0.9

【Problem Phenomenon and Impact】

The environment is: full backup on the 1st of every month, and incremental backup based on the full backup of the 1st every day thereafter.

Normally, the daily incremental data is about 5G.

Suddenly one day, the data volume increased significantly. Normally, it should take about 1 hour to complete the backup. But today, the backup has not been completed after a whole day and night. The backup data volume reached 3.5T (backup log progress is only 72%), and the process has now been terminated due to the long duration.

What happened? Or under what circumstances would this happen? Because our data modification volume is not that large, I can’t figure it out.

Also, the BR backup logs look normal. Is there really such a large amount of data? Why is the speed much faster when I redo the incremental backup the next day?

【Attachment】

The backup log file is too large, so I have extracted the last part of the log as follows:

[2022/10/31 10:54:15.956 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e2b841983] [EndKey=7480000000000008b65f728000000e2b8872a8]

[2022/10/31 10:54:15.982 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e3c8421ba] [EndKey=7480000000000008b65f728000000e3c867304]

[2022/10/31 10:54:16.010 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000ddc683a87] [EndKey=7480000000000008b65f728000000ddc6ab9c9]

[2022/10/31 10:54:16.010 +08:00] [INFO] [rtree.go:136] [“delete overlapping range”] [StartKey=7480000000000008b65f728000000ddc683a87] [EndKey=7480000000000008b65f728000000ddc6ab9c9]

[2022/10/31 10:54:16.131 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000ddd10baef] [EndKey=7480000000000008b65f728000000ddd13400f]

[2022/10/31 10:54:16.171 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e04465be1] [EndKey=7480000000000008b65f728000000e0449f706]

[2022/10/31 10:54:16.179 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e305724aa] [EndKey=7480000000000008b65f728000000e305ee961]

[2022/10/31 10:54:16.203 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000ddb94a591] [EndKey=7480000000000008b65f728000000ddb9a81e3]

[2022/10/31 10:54:16.205 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e3d99fbae] [EndKey=7480000000000008b65f728000000e3d9bc210]

[2022/10/31 10:54:16.279 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e06f74a6a] [EndKey=7480000000000008b65f728000000e06fd5e73]

[2022/10/31 10:54:16.302 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e4339ac84] [EndKey=7480000000000008b65f728000000e433e87df]

[2022/10/31 10:54:16.303 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e0ca19883] [EndKey=7480000000000008b65f728000000e0ca4d1e9]

[2022/10/31 10:54:16.305 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000dfa79fdf7] [EndKey=7480000000000008b65f728000000dfa855e78]

[2022/10/31 10:54:16.309 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e2c5ab32d] [EndKey=7480000000000008b65f728000000e2c5d70b8]

[2022/10/31 10:54:16.334 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e07a61d42] [EndKey=7480000000000008b65f728000000e07aa05e6]

[2022/10/31 10:54:16.337 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e404f346a] [EndKey=7480000000000008b65f728000000e4057f4a7]

[2022/10/31 10:54:16.354 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e3e82ae44] [EndKey=7480000000000008b65f728000000e3e855d8c]

[2022/10/31 10:54:16.368 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e2e1592db] [EndKey=7480000000000008b65f728000000e2e198fab]

[2022/10/31 10:54:16.381 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e4691b5f6] [EndKey=7480000000000008b65f728000000e469417b5]

[2022/10/31 10:54:16.386 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e0366a4a6] [EndKey=7480000000000008b65f728000000e03694a76]

[2022/10/31 10:54:16.393 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000ddfd61e13] [EndKey=7480000000000008b65f728000000ddfde9c7f]

[2022/10/31 10:54:16.393 +08:00] [INFO] [rtree.go:136] [“delete overlapping range”] [StartKey=7480000000000008b65f728000000ddfd61e13] [EndKey=7480000000000008b65f728000000ddfde9c7f]

[2022/10/31 10:54:16.411 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e05ed2151] [EndKey=7480000000000008b65f728000000e05ee8a07]

[2022/10/31 10:54:16.425 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000ddb48ae17] [EndKey=7480000000000008b65f728000000ddb4bf69d]

[2022/10/31 10:54:16.450 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e45d771f9] [EndKey=7480000000000008b65f728000000e45d9f35a]

[2022/10/31 10:54:16.460 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000de06f9be6] [EndKey=7480000000000008b65f728000000de0794584]

[2022/10/31 10:54:16.476 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e06af1e41] [EndKey=7480000000000008b65f728000000e06b41f67]

[2022/10/31 10:54:16.485 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e2ef529a9] [EndKey=7480000000000008b65f728000000e2f01c67f]

[2022/10/31 10:54:16.494 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e06c011d9] [EndKey=7480000000000008b65f728000000e06c23727]

[2022/10/31 10:54:16.506 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e2f01c67f] [EndKey=7480000000000008b65f728000000e2f097137]

[2022/10/31 10:54:16.510 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000de084c908] [EndKey=7480000000000008b65f728000000de086d3b7]

[2022/10/31 10:54:16.542 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e41c49a6f] [EndKey=7480000000000008b65f728000000e41c7e842]

[2022/10/31 10:54:16.545 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e07762a06] [EndKey=7480000000000008b65f728000000e077902b7]

[2022/10/31 10:54:16.569 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000f4dfd3950] [EndKey=7480000000000008b65f728000000f4dfe9061]

[2022/10/31 10:54:16.584 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e11afe229] [EndKey=7480000000000008b65f728000000e11b4ffda]

[2022/10/31 10:54:16.594 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000f510b054b] [EndKey=7480000000000008b65f728000000f510d9903]

[2022/10/31 10:54:16.600 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e3eabe032] [EndKey=7480000000000008b65f728000000e3eae5c76]

[2022/10/31 10:54:16.637 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000dd9368f6a] [EndKey=7480000000000008b65f728000000dd9377bd3]

[2022/10/31 10:54:16.645 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000dfcb8619d] [EndKey=7480000000000008b65f728000000dfccaf49c]

[2022/10/31 10:54:16.658 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000f4d95fb13] [EndKey=7480000000000008b65f728000000f4d9734df]

[2022/10/31 10:54:16.688 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e0ee25d53] [EndKey=7480000000000008b65f728000000e0ee6f81e]

[2022/10/31 10:54:16.700 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e01ce4963] [EndKey=7480000000000008b65f728000000e01d24be9]

[2022/10/31 10:54:16.702 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000dd95aee39] [EndKey=7480000000000008b65f728000000dd95ce4b2]

[2022/10/31 10:54:16.704 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000ddecf9ed3] [EndKey=7480000000000008b65f728000000dded3420f]

[2022/10/31 10:54:16.707 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e0eed88e3] [EndKey=7480000000000008b65f728000000e0ef06969]

[2022/10/31 10:54:16.717 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000f4eb7e704] [EndKey=7480000000000008b65f728000000f4eb9cd6d]

[2022/10/31 10:54:16.755 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000ddf6c522c] [EndKey=7480000000000008b65f728000000ddf6dbdcb]

[2022/10/31 10:54:16.762 +08:00] [INFO] [client.go:878] [“range backuped”] [StartKey=7480000000000008b65f728000000e41d81649] [EndKey=7480000000000008b65f728000000e41d9c7b7]

[2022