Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 事务两阶段提交中第一阶段(prewrite 阶段)的耗时 过久

In the normal execution plan, it is normal, but when I use Flink to import data, it slows down. I hope to get an answer, thank you very much.

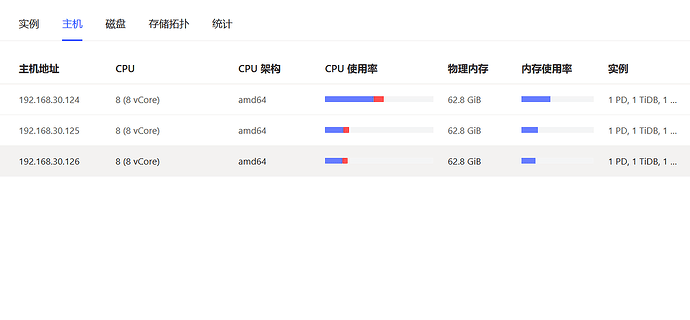

Could you share your server configuration? The latency is terrifyingly high; are there many slow queries?

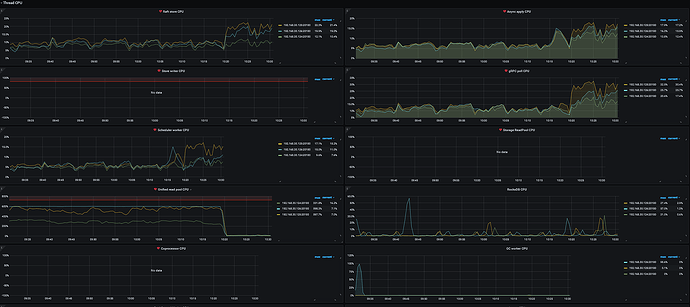

First, check the TiDB CPU utilization, TiKV detail → thread CPU utilization, and disk I/O status.

Is it slow when inserting a single record manually, or only slow during batch inserts?

Only during batch import.

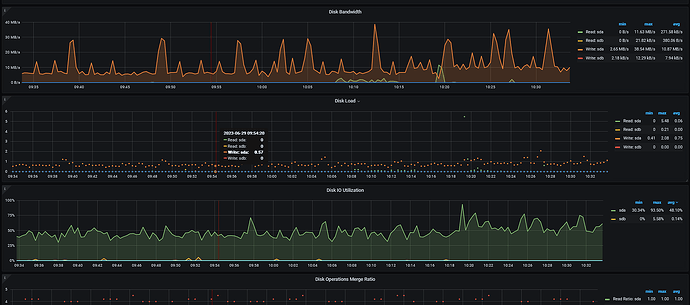

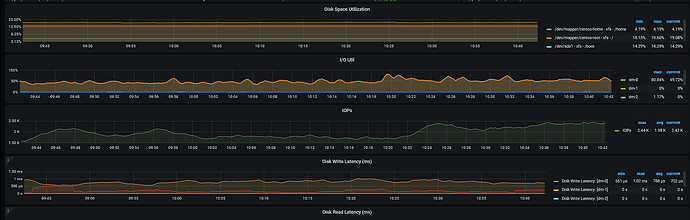

Which one is the disk IO situation?

In the node exporter or disk performance

Is sda the disk you are using for TiKV? What is the IOPS? The disk doesn’t seem to be in good condition.

Are you using a mechanical hard drive?

The virtualized disk is said to be 300MB-500MB per second.

The disk performance is too poor.

It still depends on the business. If it is an important business, it is recommended to switch to dedicated SSDs.

Just testing the performance in a test environment, will SSD significantly improve it? Will such high latency no longer occur?

The read and write speed of a mechanical hard drive is approximately one-sixth that of a SATA SSD and one-thirtieth that of an NVME SSD.

Distributed databases have high requirements for disks and must use SSDs.

Now the latency has decreased, and the CPU usage is not high, but the insert still takes 0.4s. Does this have anything to do with the blob field in my SQL?

Prewrite involves writing the modified data into TiKV, and it is influenced by factors such as network, TiKV disk/CPU, and the size of the data itself.