Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 删除语句执行时间过长

[TiDB Usage Environment] Production Environment

[TiDB Version] 6.1.0

[Issue and Impact] The delete statement for only one table is slow, occurring intermittently, about a few times a day.

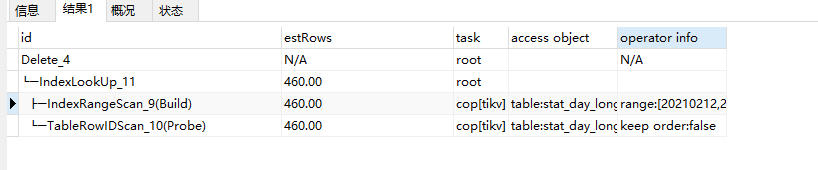

[Reproduction Path] explain

[Resource Configuration] TIKV: 3 nodes (8P32G), Servers and pd: 3 nodes (8P16G)

Premise: TIDB limits statement execution time to no more than 10 seconds.

The delete statement for only one table is slow. After checking the slow log in detail, nothing significant was found.

The data in this table is only: 187937 (180,000 rows).

Please provide the SQL and execution plan.

Take a look at the last two sheets, cop read and transaction.

The SQL statement DELETE FROM stat_day_longtengWHEREevent_date = "20210212" is used to delete records from the table stat_day_longteng where the event_date column matches the value “20210212”.

It’s not always reproducible, but it happens a few times every day.

The image is not visible. Please provide the text you need translated.

When I execute explain now, it works normally, but it occasionally times out.

The whole table is being scanned, so it’s definitely slow. The index isn’t even being used. Try optimizing the conditions first.

Have you enabled slow query? If it’s enabled, you should be able to find detailed information.

If that doesn’t work, enable resource location to help you collect this information.

The slow log is enabled, and the screenshot is above. The entire table has no more than 200,000 rows of data, so even a full table scan shouldn’t take more than 10 seconds, right?

Checked the tidb.log and found this:

[2022/12/05 14:47:17.787 +08:00] [INFO] [conn.go:1149] [“command dispatched failed”] [conn=367744858060751563] [connInfo=“id:367744858060751563, addr:172.28.3.8:61211 status:11, collation:utf8_general_ci, user:tx_data”] [command=Execute] [status=“inTxn:1, autocommit:1”] [sql=“DELETE FROM stat_day_longteng WHERE event_date = ? [arguments: "20210212"]”] [txn_mode=PESSIMISTIC] [timestamp=437838892945375274] [err=“context canceled\ngithub.com/tikv/client-go/v2/internal/locate.(*RegionRequestSender).sendReqToRegion\n\t/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220531092439-efebaeb9fe53/internal/locate/region_request.go:1220\ngithub.com/tikv/client-go/v2/internal/locate.(*RegionRequestSender).SendReqCtx\n\t/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220531092439-efebaeb9fe53/internal/locate/region_request.go:990\ngithub.com/tikv/client-go/v2/internal/locate.(*RegionRequestSender).SendReq\n\t/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220531092439-efebaeb9fe53/internal/locate/region_request.go:231\ngithub.com/tikv/client-go/v2/tikv.(*KVStore).SendReq\n\t/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220531092439-efebaeb9fe53/tikv/kv.go:394\ngithub.com/tikv/client-go/v2/txnkv/transaction.actionPessimisticLock.handleSingleBatch\n\t/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220531092439-efebaeb9fe53/txnkv/transaction/pessimistic.go:143\ngithub.com/tikv/client-go/v2/txnkv/transaction.(*batchExecutor).startWorker.func1\n\t/go/pkg/mod/github.com/tikv/client-go/v2@v2.0.1-0.20220531092439-efebaeb9fe53/txnkv/transaction/2pc.go:1993\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_amd64.s:1571\ngithub.com/pingcap/errors.AddStack\n\t/go/pkg/mod/github.com/pingcap/errors@v0.11.5-0.20211224045212-9687c2b0f87c/errors.go:174\ngithub.com/pingcap/errors.Trace\n\t/go/pkg/mod/github.com/pingcap/errors@v0.11.5-0.20211224045212-9687c2b0f87c/juju_adaptor.go:15\ngithub.com/pingcap/tidb/store/driver/error.ToTiDBErr\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/store/driver/error/error.go:169\ngithub.com/pingcap/tidb/store/driver/txn.extractKeyErr\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/store/driver/txn/error.go:154\ngithub.com/pingcap/tidb/store/driver/txn.(*tikvTxn).extractKeyErr\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/store/driver/txn/txn_driver.go:275\ngithub.com/pingcap/tidb/store/driver/txn.(*tikvTxn).LockKeys\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/store/driver/txn/txn_driver.go:75\ngithub.com/pingcap/tidb/session.(*LazyTxn).LockKeys\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/session/txn.go:392\ngithub.com/pingcap/tidb/executor.(*ExecStmt).handlePessimisticDML\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/executor/adapter.go:714\ngithub.com/pingcap/tidb/executor.(*ExecStmt).handleNoDelay\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/executor/adapter.go:514\ngithub.com/pingcap/tidb/executor.(*ExecStmt).Exec\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/executor/adapter.go:465\ngithub.com/pingcap/tidb/session.runStmt\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/session/session.go:2069\ngithub.com/pingcap/tidb/session.(*session).preparedStmtExec\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/session/session.go:2223\ngithub.com/pingcap/tidb/session.(*session).ExecutePreparedStmt\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/session/session.go:2419\ngithub.com/pingcap/tidb/server.(*TiDBStatement).Execute\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/server/driver_tidb.go:75\ngithub.com/pingcap/tidb/server.(*clientConn).executePreparedStmtAndWriteResult\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/server/conn_stmt.go:234\ngithub.com/pingcap/tidb/server.(*clientConn).handleStmtExecute\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/server/conn_stmt.go:201\ngithub.com/pingcap/tidb/server.(*clientConn).dispatch\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/server/conn.go:1393\ngithub.com/pingcap/tidb/server.(*clientConn).Run\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/server/conn.go:1121\ngithub.com/pingcap/tidb/server.(*Server).onConn\n\t/home/jenkins/agent/workspace/build-common/go/src/github.com/pingcap/tidb/server/server.go:559\nruntime.goexit\n\t/usr/local/go/src/runtime/asm_amd64.s:1571\nDELETE FROM stat_day_longteng WHERE event_date = ? [arguments: "20210212"]”]

What configuration? Machine configuration, cluster configuration

Please supplement the information according to this:

When describing the problem, please follow:

【TiDB Usage Environment】Production environment / Testing / PoC

【TiDB Version】

【Problem Phenomenon and Impact】

【Reproduction Path】What operations were performed that caused the problem

【Resource Configuration】

When describing, try to provide as much effective background information as possible. Many issues may have different suggestions in different scenarios and business contexts. Not explaining clearly will only make it difficult for everyone to help~

[TiDB Usage Environment] Production environment

[TiDB Version] 6.1.0

[Issue and Impact] The delete statement for only one table is slow, occurring intermittently, about a few times a day

[Reproduction Path] explain

[Resource Configuration] TIKV: 3 nodes (8P32G), Servers and PD: 3 nodes (8P16G)

Updated

How many TiDB nodes are there?

What type of disks do TiKV and PD use?

The log describes a command dispatch failure…

command dispatched failed

[2022/12/05 14:47:17.787 +08:00] [INFO] [conn.go:1149] ["command dispatched failed"] [conn=367744858060751563] [connInfo="id:367744858060751563, addr:172.28.3.8:61211 status:11, collation:utf8_general_ci, user:tx_data"] [command=Execute] [status="inTxn:1, autocommit:1"] [sql="DELETE FROM `stat_day_longteng` WHERE `event_date` = ? [arguments: "20210212"]"] [txn_mode=PESSIMISTIC] [timestamp=437838892945375274]

[err="context canceled]

This seems to be manually canceled, can be ignored

The entire TiDB cluster has 6 nodes, with KV exclusively occupying 3 nodes, and tidbServers and tipd occupying the other 3 nodes. All are SSD hard drives.

It is recommended to hit the data through indexing and optimize with this goal in mind.

Other suggestions might not be useful for you.

The data volume is too small

Okay, I have already added the index. I will check in the next couple of days to see if the issue still occurs. If it doesn’t, I will also reply. Please keep an eye on the issue for the next few days. Thank you very much.

Buddy, it still happens. I added an index

After adding the index, how long does it take to execute? Is there still a slow query?