Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: 资源管控管理后台任务测试效果不符合预期

[TiDB Usage Environment]

Test Environment

[TiDB Version]

v7.5.0

Local cluster started with tiup playground: 1 tidb + 1 pd + 1 tikv, only for functionality testing, not involving performance testing.

[Reproduction Path]

[Encountered Issue: Problem Phenomenon and Impact]

Test Expected Goal:

Both front-end tasks and back-end tasks use the default resource group.

When a task is marked as a back-end task, TiKV will dynamically limit the resource usage of that task to minimize its impact on front-end tasks during execution. By setting automatic recognition of back-end tasks and reducing their resource consumption.

- Start the local cluster with tiup, resource control is enabled.

mysql> show variables like ‘tidb_enable_resource_control’;

±-----------------------------±------+

| Variable_name | Value |

±-----------------------------±------+

| tidb_enable_resource_control | ON |

±-----------------------------±------+

1 row in set (0.01 sec)

TiKV parameter resource-control.enabled is not modified, using the default value true.

- Prepare test data and use lightning to verify if the back-end task is effective.

tiup bench tpcc -H 127.0.0.1 -P 4000 --user app_oltp -D tpcc --warehouses 100 --threads 20 prepare

tiup dumpling -u root -h 127.0.0.1 -P 4000 -B tpcc --filetype sql -t 8 -o ./tpcc_data -r 200000 -F 256MiB - Import with lightning, verify the situation without setting back-end tasks.

mysql> SELECT * FROM information_schema.resource_groups;

±---------±-----------±---------±----------±------------±-----------+

| NAME | RU_PER_SEC | PRIORITY | BURSTABLE | QUERY_LIMIT | BACKGROUND |

±---------±-----------±---------±----------±------------±-----------+

| default | UNLIMITED | MEDIUM | YES | NULL | NULL |

| rg_olap | 400 | MEDIUM | YES | NULL | NULL |

| rg_oltp | 1000 | HIGH | YES | NULL | NULL |

| rg_other | 100 | MEDIUM | NO | NULL | NULL |

±---------±-----------±---------±----------±------------±-----------+

4 rows in set (0.01 sec)

tiup tidb-lightning -config tidb-lightning.toml

4. After the import is completed, wait a few minutes for the cluster to stabilize. Delete the imported data.

5. Set lightning import as a back-end task.

mysql> ALTER RESOURCE GROUP default BACKGROUND=(TASK_TYPES=‘lightning’);

Query OK, 0 rows affected (0.15 sec)

mysql> SELECT * FROM information_schema.resource_groups;

±---------±-----------±---------±----------±------------±-----------------------+

| NAME | RU_PER_SEC | PRIORITY | BURSTABLE | QUERY_LIMIT | BACKGROUND |

±---------±-----------±---------±----------±------------±-----------------------+

| default | UNLIMITED | MEDIUM | YES | NULL | TASK_TYPES=‘lightning’ |

| rg_olap | 400 | MEDIUM | YES | NULL | NULL |

| rg_oltp | 1000 | HIGH | YES | NULL | NULL |

| rg_other | 100 | MEDIUM | NO | NULL | NULL |

±---------±-----------±---------±----------±------------±-----------------------+

4 rows in set (0.01 sec)

tiup tidb-lightning -config tidb-lightning.toml

-

Wait for the import to complete and observe the resource consumption.

-

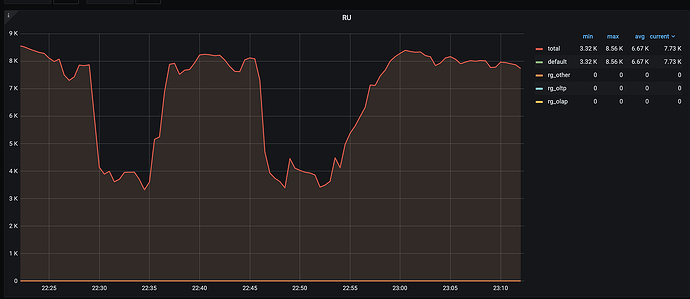

Conclusion: The back-end task did not take effect. Whether or not the back-end task is set, the resource consumption of lightning is almost the same, and the impact on front-end tasks is still relatively large.

-

Continue to verify other scenarios, and similar conclusions are drawn.

mysql> ALTER RESOURCE GROUPdefaultBACKGROUND=(TASK_TYPES=‘lightning,stats,ddl,br’);

Query OK, 0 rows affected (0.27 sec)

mysql> SELECT * FROM information_schema.resource_groups;

±---------±-----------±---------±----------±------------±------------------------------------+

| NAME | RU_PER_SEC | PRIORITY | BURSTABLE | QUERY_LIMIT | BACKGROUND |

±---------±-----------±---------±----------±------------±------------------------------------+

| default | UNLIMITED | MEDIUM | YES | NULL | TASK_TYPES=‘lightning,stats,ddl,br’ |

| rg_olap | 400 | MEDIUM | YES | NULL | NULL |

| rg_oltp | 1000 | HIGH | YES | NULL | NULL |

| rg_other | 100 | MEDIUM | NO | NULL | NULL |

±---------±-----------±---------±----------±------------±------------------------------------+

4 rows in set (0.01 sec)

- DDL through add index back-end task, whether or not the back-end task is set, no optimization.

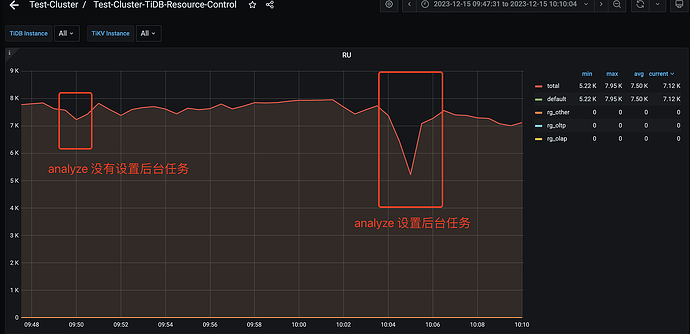

- Stats through analyze a table with 33 million rows, setting the back-end task also did not optimize, and even further squeezed the resources of front-end tasks, resulting in a sharp decline in available resources for front-end tasks, as shown below:

Official website:

Questions:

- The actual results do not meet expectations. Are there any key configurations missing?

- Have other experts tested similar functions, and do they have the same phenomenon? How to solve it?