Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: ticdc 日志包含大量的 execute dml with error 报错

[TiDB Usage Environment] Production Environment

[TiDB Version] 4.0

[Reproduction Path] What operations were performed when the issue occurred

[Encountered Issue: Issue Phenomenon and Impact]

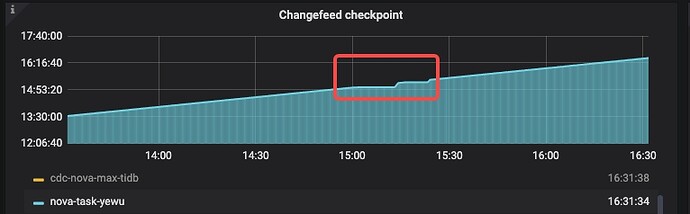

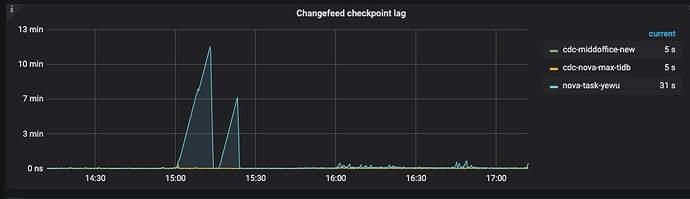

There is high latency in CDC, and many DML errors were found in the logs. Could this be caused by downstream deadlocks? The same logs appear at the time the issue occurred. The logs can be matched with the screenshots.

[Resource Configuration]

[Attachments: Screenshots/Logs/Monitoring]

[2023/07/05 00:01:05.781 +00:00] [WARN] [mysql.go:854] [“execute DMLs with error, retry later”] [error=“[CDC:ErrMySQLTxnError]Error 1213: Deadlock found when trying to get lock; try restarting transaction”] [errorVerbose=“[CDC:ErrMySQLTxnError]Error 1213: Deadlock found when trying to get lock; try restarting transaction\ngithub.com/pingcap/errors.AddStack\n\tgithub.com/pingcap/errors@v0.11.5-0.20201126102027-b0a155152ca3/errors.go:174\ngithub.com/pingcap/errors.(*Error).GenWithStackByCause\n\tgithub.com/pingcap/errors@v0.11.5-0.20201126102027-b0a155152ca3/normalize.go:279\ngithub.com/pingcap/ticdc/pkg/errors.WrapError\n\tgithub.com/pingcap/ticdc@/pkg/errors/helper.go:28\ngithub.com/pingcap/ticdc/cdc/sink.(*mysqlSink).execDMLWithMaxRetries.func2.3\n\tgithub.com/pingcap/ticdc@/cdc/sink/mysql.go:877\ngithub.com/pingcap/ticdc/cdc/sink.(*Statistics).RecordBatchExecution\n\tgithub.com/pingcap/ticdc@/cdc/sink/statistics.go:99\ngithub.com/pingcap/ticdc/cdc/sink.(*mysqlSink).execDMLWithMaxRetries.func2\n\tgithub.com/pingcap/ticdc@/cdc/sink/mysql.go:865\ngithub.com/pingcap/ticdc/pkg/retry.Run.func1\n\tgithub.com/pingcap/ticdc@/pkg/retry/retry.go:32\ngithub.com/cenkalti/backoff.RetryNotify\n\tgithub.com/cenkalti/backoff@v2.2.1+incompatible/retry.go:37\ngithub.com/cenkalti/backoff.Retry\n\tgithub.com/cenkalti/backoff@v2.2.1+incompatible/retry.go:24\ngithub.com/pingcap/ticdc/pkg/retry.Run\n\tgithub.com/pingcap/ticdc@/pkg/retry/retry.go:31\ngithub.com/pingcap/ticdc/cdc/sink.(*mysqlSink).execDMLWithMaxRetries\n\tgithub.com/pingcap/ticdc@/cdc/sink/mysql.go:857\ngithub.com/pingcap/ticdc/cdc/sink.(*mysqlSink).execDMLs\n\tgithub.com/pingcap/ticdc@/cdc/sink/mysql.go:1016\ngithub.com/pingcap/ticdc/cdc/sink.(*mysqlSinkWorker).run.func3\n\tgithub.com/pingcap/ticdc@/cdc/sink/mysql.go:790\ngithub.com/pingcap/ticdc/cdc/sink.(*mysqlSinkWorker).run\n\tgithub.com/pingcap/ticdc@/cdc/sink/mysql.go:811\ngithub.com/pingcap/ticdc/cdc/sink.(*mysqlSink).createSinkWorkers.func1\n\tgithub.com/pingcap/ticdc@/cdc/sink/mysql.go:635\nruntime.goexit\n\truntime/asm_amd64.s:1357”]