Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: TiCDC同步TiDB数据到下游MySQL很慢,而且下游MySQL实例会增加很多的binlog日志

TiDB version 5.4.0, TiCDC version 5.4.0

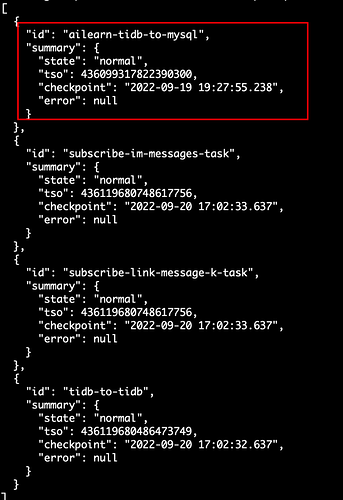

Currently, when using TiCDC tasks to synchronize TiDB data to downstream MySQL instances, there is a significant delay. The incremental data is only 1G per day, but the task is already delayed by 11 hours. Why is this happening? How can it be optimized?

Additionally, the speed at which binlogs are generated by the downstream MySQL instance is very fast, basically 1G of logs per minute. Is there any optimization for this?

【TiDB Usage Environment】Production\Testing Environment\POC

【TiDB Version】

【Encountered Issues】

【Reproduction Path】What operations were performed that led to the issue

【Issue Phenomenon and Impact】

【Attachments】

- Relevant logs, configuration files, Grafana monitoring (https://metricstool.pingcap.com/)

- TiUP Cluster Display information

- TiUP Cluster Edit config information

- TiDB-Overview monitoring

- Corresponding module Grafana monitoring (if any, such as BR, TiDB-binlog, TiCDC, etc.)

- Corresponding module logs (including logs from 1 hour before and after the issue)

If the question is related to performance optimization or troubleshooting, please download the script and run it. Please select all and copy-paste the terminal output results for upload.