Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: TiCDC v6.5.1 不往下游同步数据

【TiDB Usage Environment】Production Environment / Testing / PoC

【TiDB Version】v6.5.1

【Reproduction Path】Operations performed that led to the issue

【Encountered Issue: Problem Phenomenon and Impact】

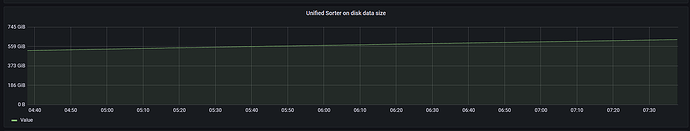

Unified Sorter on disk data size, this value keeps increasing, but CDC is not writing data downstream

【Resource Configuration】

【Attachments: Screenshots / Logs / Monitoring】

Is it because the data volume is too large and the memory is insufficient? Are you using a mechanical disk for TiCDC?

The SSD being used, memory usage is less than 1/10.

The key issue right now is that no one knows what CDC is actually doing.

The tidb_gc_life_time parameter is used to control the garbage collection (GC) life cycle in TiDB. The default value is 10m, which means that data older than 10 minutes will be considered for GC. You can adjust this parameter according to your needs. For example, if you want to keep data for a longer period before it is collected by GC, you can set it to a larger value, such as 24h.

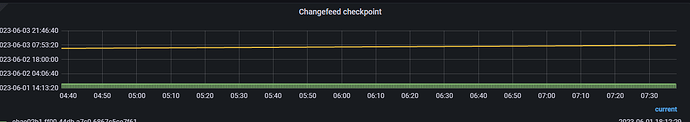

Check the status and running logs of the changfeed.

Is there a large transaction? You can check the size of the output count to see if data is being synchronized.

There should be a large transaction.

It seems that version 6.5.1 will split large transactions by default. Did you specifically specify not to split transactions when creating the changefeed?

- What is the downstream? Check the monitoring for downstream write latency.

- Can you provide more monitoring metrics, such as the metrics in the dataflow section?

- Check the monitoring in the lag analyze section.

- Is ResolvedTs progressing? Or is it still stuck?

- Check the logs for any errors, especially for the keyword “too long.”

If possible, please provide anonymized logs for troubleshooting, thank you.

Take a look at lag analyze