Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: tidb5.4测试性能低,如何调优,求救

[TiDB Usage Environment]

System: conts7

3 virtual machines, 32C+32G

TiUP installation 5.4.1

Testing with sysbench

Reference official test documentation: TiDB Sysbench 性能对比测试报告 - v5.4.0 对比 v5.3.0 | PingCAP 文档中心

Cluster deployment optimized according to official guidelines, reference: TiDB 环境与系统配置检查 | PingCAP 文档中心

[Overview] Scenario + Problem Overview

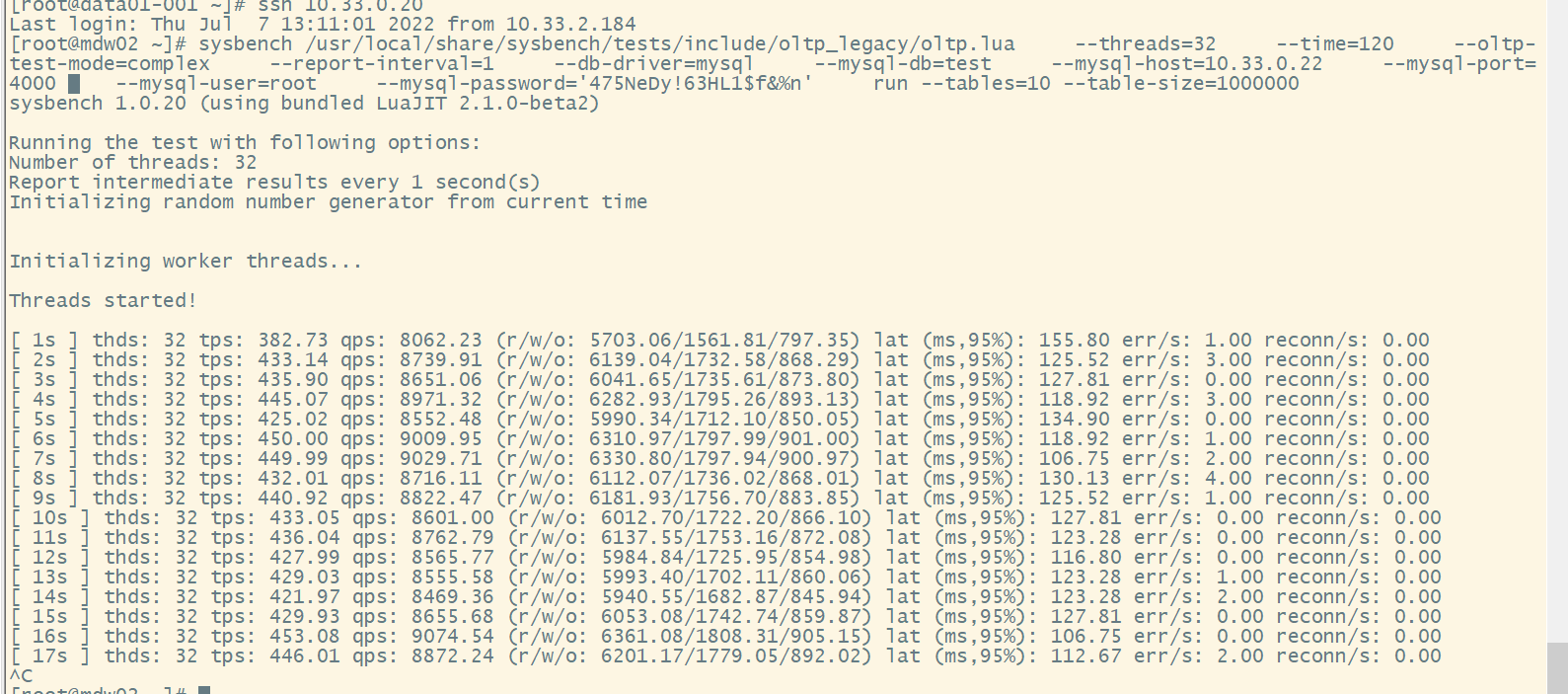

Test command:

sysbench /usr/local/share/sysbench/tests/include/oltp_legacy/oltp.lua

–threads=32

–time=120

–oltp-test-mode=complex

–report-interval=1

–db-driver=mysql

–mysql-db=test

–mysql-host=127.0.0.1

–mysql-port=4000

–mysql-user=root

–mysql-password=‘’

run --tables=10 --table-size=1000000

[Test Results]

Running the test with the following options:

Number of threads: 32

Report intermediate results every 1 second(s)

Initializing random number generator from current time

Initializing worker threads…

Threads started!

[ 1s ] thds: 32 tps: 416.98 qps: 8742.65 (r/w/o: 6179.07/1696.78/866.80) lat (ms,95%): 127.81 err/s: 1.99 reconn/s: 0.00

[ 2s ] thds: 32 tps: 466.10 qps: 9411.10 (r/w/o: 6590.47/1885.42/935.21) lat (ms,95%): 114.72 err/s: 4.00 reconn/s: 0.00

[ 3s ] thds: 32 tps: 456.06 qps: 9200.30 (r/w/o: 6464.91/1820.26/915.13) lat (ms,95%): 121.08 err/s: 1.00 reconn/s: 0.00

[ 4s ] thds: 32 tps: 453.04 qps: 8951.88 (r/w/o: 6248.62/1801.18/902.09) lat (ms,95%): 137.35 err/s: 0.00 reconn/s: 0.00

[ 5s ] thds: 32 tps: 471.86 qps: 9554.10 (r/w/o: 6705.97/1899.42/948.71) lat (ms,95%): 112.67 err/s: 1.00 reconn/s: 0.00

[ 6s ] thds: 32 tps: 475.09 qps: 9506.72 (r/w/o: 6650.20/1905.34/951.17) lat (ms,95%): 108.68 err/s: 1.00 reconn/s: 0.00

[ 7s ] thds: 32 tps: 472.97 qps: 9412.31 (r/w/o: 6585.52/1879.86/946.93) lat (ms,95%): 112.67 err/s: 1.00 reconn/s: 0.00

[ 8s ] thds: 32 tps: 464.41 qps: 9388.10 (r/w/o: 6588.65/1869.63/929.82) lat (ms,95%): 118.92 err/s: 1.00 reconn/s: 0.00

[ 9s ] thds: 32 tps: 446.45 qps: 8890.98 (r/w/o: 6213.27/1782.80/894.90) lat (ms,95%): 139.85 err/s: 2.00 reconn/s: 0.00

[ 10s ] thds: 32 tps: 467.03 qps: 9340.66 (r/w/o: 6539.46/1866.13/935.07) lat (ms,95%): 118.92 err/s: 1.00 reconn/s: 0.00

SQL statistics:

queries performed:

read: 64932

write: 18522

other: 9262

total: 92716

transactions: 4624 (458.37 per sec.)

queries: 92716 (9190.89 per sec.)

ignored errors: 14 (1.39 per sec.)

reconnects: 0 (0.00 per sec.)

General statistics:

total time: 10.0853s

total number of events: 4624

Latency (ms):

min: 33.13

avg: 69.44

max: 342.81

95th percentile: 121.08

sum: 321088.75

Threads fairness:

events (avg/stddev): 144.5000/3.81

execution time (avg/stddev): 10.0340/0.02

[Issues]

- Only the TiDB connected node has a CPU usage of around 30%, while the other TiDB nodes have single-digit CPU usage.

- IO usage on all three nodes is 70-80%.

- Low TPS in the test, and there are error occurrences.