All nodes have been started with 7 TiKV, and the flower has been changed to 1, but error 8243 is still reported.

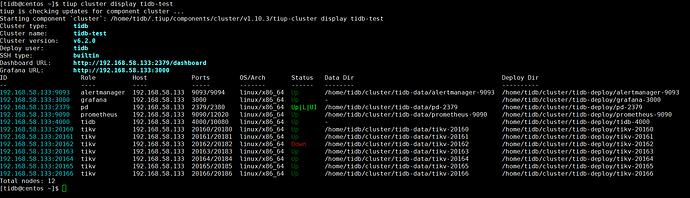

[tidb@centos ~]$ tiup cluster display tidb-test

tiup is checking updates for component cluster ...

Starting component `cluster`: /home/tidb/.tiup/components/cluster/v1.10.3/tiup-cluster display tidb-test

Cluster type: tidb

Cluster name: tidb-test

Cluster version: v6.2.0

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://192.168.58.133:2379/dashboard

Grafana URL: http://192.168.58.133:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.58.133:9093 alertmanager 192.168.58.133 9093/9094 linux/x86_64 Up /home/tidb/cluster/tidb-data/alertmanager-9093 /home/tidb/cluster/tidb-deploy/alertmanager-9093

192.168.58.133:3000 grafana 192.168.58.133 3000 linux/x86_64 Up - /home/tidb/cluster/tidb-deploy/grafana-3000

192.168.58.133:2379 pd 192.168.58.133 2379/2380 linux/x86_64 Up|L|UI /home/tidb/cluster/tidb-data/pd-2379 /home/tidb/cluster/tidb-deploy/pd-2379

192.168.58.133:9090 prometheus 192.168.58.133 9090/12020 linux/x86_64 Up /home/tidb/cluster/tidb-data/prometheus-9090 /home/tidb/cluster/tidb-deploy/prometheus-9090

192.168.58.133:4000 tidb 192.168.58.133 4000/10080 linux/x86_64 Up - /home/tidb/cluster/tidb-deploy/tidb-4000

192.168.58.133:20160 tikv 192.168.58.133 20160/20180 linux/x86_64 Up /home/tidb/cluster/tidb-data/tikv-20160 /home/tidb/cluster/tidb-deploy/tikv-20160

192.168.58.133:20161 tikv 192.168.58.133 20161/20181 linux/x86_64 Up /home/tidb/cluster/tidb-data/tikv-20161 /home/tidb/cluster/tidb-deploy/tikv-20161

192.168.58.133:20162 tikv 192.168.58.133 20162/20182 linux/x86_64 Up /home/tidb/cluster/tidb-data/tikv-20162 /home/tidb/cluster/tidb-deploy/tikv-20162

192.168.58.133:20163 tikv 192.168.58.133 20163/20183 linux/x86_64 Up /home/tidb/cluster/tidb-data/tikv-20163 /home/tidb/cluster/tidb-deploy/tikv-20163

192.168.58.133:20164 tikv 192.168.58.133 20164/20184 linux/x86_64 Up /home/tidb/cluster/tidb-data/tikv-20164 /home/tidb/cluster/tidb-deploy/tikv-20164

192.168.58.133:20165 tikv 192.168.58.133 20165/20185 linux/x86_64 Up /home/tidb/cluster/tidb-data/tikv-20165 /home/tidb/cluster/tidb-deploy/tikv-20165

192.168.58.133:20166 tikv 192.168.58.133 20166/20186 linux/x86_64 Up /home/tidb/cluster/tidb-data/tikv-20166 /home/tidb/cluster/tidb-deploy/tikv-20166

Total nodes: 12

[tidb@centos ~]$

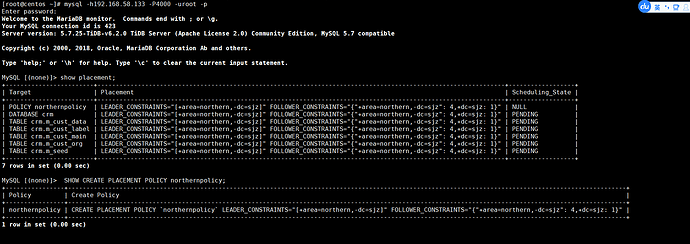

[root@centos ~]# mysql -h192.168.58.133 -P4000 -uroot -p

Enter password:

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MySQL connection id is 405

Server version: 5.7.25-TiDB-v6.2.0 TiDB Server (Apache License 2.0) Community Edition, MySQL 5.7 compatible

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MySQL [(none)]> use crm

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

MySQL [crm]> SHOW PLACEMENT LABELS;

+------+-------------------------------------------------------------------------------+

| Key | Values |

+------+-------------------------------------------------------------------------------+

| area | ["america", "europe", "northern"] |

| dc | ["bj1", "bj2", "germany", "sjz", "usa"] |

| host | ["host100", "host101", "host102", "host103", "host104", "host105", "host106"] |

| rack | ["r1", "r2"] |

+------+-------------------------------------------------------------------------------+

4 rows in set (0.00 sec)

MySQL [crm]> show placement;

+------------------------+---------------------------------------------------------------------------------------------------------------+------------------+

| Target | Placement | Scheduling_State |

+------------------------+---------------------------------------------------------------------------------------------------------------+------------------+

| POLICY northernpolicy | LEADER_CONSTRAINTS="[+area=northern,-dc=sjz]" FOLLOWER_CONSTRAINTS="{"+area=northern,-dc=sjz": 4,+dc=sjz: 1}" | NULL |

| DATABASE crm | LEADER_CONSTRAINTS="[+area=northern,-dc=sjz]" FOLLOWER_CONSTRAINTS="{"+area=northern,-dc=sjz": 4,+dc=sjz: 1}" | PENDING |

| TABLE crm.m_cust_data | LEADER_CONSTRAINTS="[+area=northern,-dc=sjz]" FOLLOWER_CONSTRAINTS="{"+area=northern,-dc=sjz": 4,+dc=sjz: 1}" | PENDING |

| TABLE crm.m_cust_label | LEADER_CONSTRAINTS="[+area=northern,-dc=sjz]" FOLLOWER_CONSTRAINTS="{"+area=northern,-dc=sjz": 4,+dc=sjz: 1}" | PENDING |

| TABLE crm.m_cust_main | LEADER_CONSTRAINTS="[+area=northern,-dc=sjz]" FOLLOWER_CONSTRAINTS="{"+area=northern,-dc=sjz": 4,+dc=sjz: 1}" | PENDING |

| TABLE crm.m_cust_org | LEADER_CONSTRAINTS="[+area=northern,-dc=sjz]" FOLLOWER_CONSTRAINTS="{"+area=northern,-dc=sjz": 4,+dc=sjz: 1}" | PENDING |

| TABLE crm.m_seed | LEADER_CONSTRAINTS="[+area=northern,-dc=sjz]" FOLLOWER_CONSTRAINTS="{"+area=northern,-dc=sjz": 4,+dc=sjz: 1}" | PENDING |

+------------------------+---------------------------------------------------------------------------------------------------------------+------------------+

7 rows in set (0.00 sec)

MySQL [crm]> select * from information_schema.placement_policies;

+-----------+--------------+----------------+----------------+---------+-------------+--------------------------+------------------------------------------+---------------------+----------+-----------+----------+

| POLICY_ID | CATALOG_NAME | POLICY_NAME | PRIMARY_REGION | REGIONS | CONSTRAINTS | LEADER_CONSTRAINTS | FOLLOWER_CONSTRAINTS | LEARNER_CONSTRAINTS | SCHEDULE | FOLLOWERS | LEARNERS |

+-----------+--------------+----------------+----------------+---------+-------------+--------------------------+------------------------------------------+---------------------+----------+-----------+----------+

| 2 | def | northernpolicy | | | | [+area=northern,-dc=sjz] | {"+area=northern,-dc=sjz": 4,+dc=sjz: 1} | | | 2 | 0 |

+-----------+--------------+----------------+----------------+---------+-------------+--------------------------+------------------------------------------+---------------------+----------+-----------+----------+

1 row in set (0.00 sec)

MySQL [crm]> select a.region_id,a.peer_id,a.store_id,a.is_leader,b.address,b.label from INFORMATION_SCHEMA.TIKV_REGION_PEERS a

-> left join INFORMATION_SCHEMA.TIKV_STORE_STATUS b on a.store_id =b.store_id

-> where a.region_id =218;

+-----------+---------+----------+-----------+----------------------+--------------------------------------------------------------------------------------------------------------------------------------------+

| region_id | peer_id | store_id | is_leader | address | label |

+-----------+---------+----------+-----------+----------------------+--------------------------------------------------------------------------------------------------------------------------------------------+

| 218 | 219 | 1 | 1 | 192.168.58.133:20163 | [{"key": "area", "value": "northern"}, {"key": "rack", "value": "r2"}, {"key": "host", "value": "host103"}, {"key": "dc", "value": "bj2"}] |

| 218 | 220 | 2 | 0 | 192.168.58.133:20160 | [{"key": "area", "value": "northern"}, {"key": "rack", "value": "r1"}, {"key": "host", "value": "host100"}, {"key": "dc", "value": "bj1"}] |

| 218 | 221 | 8 | 0 | 192.168.58.133:20164 | [{"key": "area", "value": "northern"}, {"key": "rack", "value": "r1"}, {"key": "host", "value": "host104"}, {"key": "dc", "value": "sjz"}] |

| 218 | 222 | 9 | 0 | 192.168.58.133:20161 | [{"key": "area", "value": "northern"}, {"key": "rack", "value": "r2"}, {"key": "host", "value": "host101"}, {"key": "dc", "value": "bj1"}] |

| 218 | 223 | 7 | 0 | 192.168.58.133:20162 | [{"key": "area", "value": "northern"}, {"key": "rack", "value": "r1"}, {"key": "host", "value": "host102"}, {"key": "dc", "value": "bj2"}] |

+-----------+---------+----------+-----------+----------------------+--------------------------------------------------------------------------------------------------------------------------------------------+

5 rows in set (0.00 sec)

MySQL [crm]> show create PLACEMENT POLICY northernpolicy;

+----------------+--------------------------------------------------------------------------------------------------------------------------------------------------------+

| Policy | Create Policy |

+----------------+--------------------------------------------------------------------------------------------------------------------------------------------------------+

| northernpolicy | CREATE PLACEMENT POLICY `northernpolicy` LEADER_CONSTRAINTS="[+area=northern,-dc=sjz]" FOLLOWER_CONSTRAINTS="{"+area=northern,-dc=sjz": 4,+dc=sjz: 1}" |

+----------------+--------------------------------------------------------------------------------------------------------------------------------------------------------+

1 row in set (0.00 sec)

MySQL [crm]> ALTER PLACEMENT POLICY northernpolicy LEADER_CONSTRAINTS='[+area=northern,-dc=sjz]' FOLLOWER_CONSTRAINTS='{"+area=northern,-dc=sjz": 4,+dc=sjz: 1,+dc=europe: 1,+dc=america: 1}';

ERROR 8243 (HY000): "[PD:placement:ErrRuleContent]invalid rule content, rule 'table_rule_72_3' from rule group 'TiDB_DDL_72' can not match any store"

MySQL [crm]> ALTER PLACEMENT POLICY northernpolicy LEADER_CONSTRAINTS='[+area=northern,-dc=sjz]' FOLLOWER_CONSTRAINTS='{"+area=northern,-dc=sjz": 3,+dc=sjz: 1,+dc=europe: 1,+dc=america: 1}';

ERROR 8243 (HY000): "[PD:placement:ErrRuleContent]invalid rule content, rule 'table_rule_72_3' from rule group 'TiDB_DDL_72' can not match any store"

MySQL [crm]> ALTER PLACEMENT POLICY northernpolicy LEADER_CONSTRAINTS='[+area=northern,-dc=sjz]' FOLLOWER_CONSTRAINTS='{"+area=northern,-dc=sjz": 1,+dc=sjz: 1,+dc=europe: 1,+dc=america: 1}';

ERROR 8243 (HY000): "[PD:placement:ErrRuleContent]invalid rule content, rule 'table_rule_72_2' from rule group 'TiDB_DDL_72' can not match any store"