Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: TiDB 7.1.0 2台 Tiflash oom之后无法启动

[TiDB Usage Environment] Production Environment

[TiDB Version] 7.1.0

[Reproduction Path] None

[Encountered Problem: Problem Phenomenon and Impact]

Business execution of SQL query load SQL caused Tiflash OOM and it could not start

[Resource Configuration] Go to TiDB Dashboard - Cluster Info - Hosts and take a screenshot of this page

[Attachment: Screenshot/Log/Monitoring]

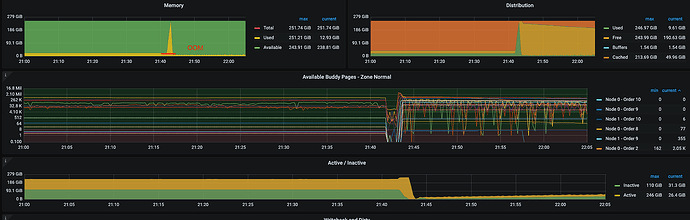

- 21:42:59 OOM

Oct 30 21:42:59 bj1 kernel: titanagent invoked oom-killer: gfp_mask=0x201da, order=0, oom_score_adj=0

- 21:44:18 tiflash_tikv log: failed to send extra message

[2023/10/30 21:44:18.211 +08:00] [ERROR] [peer.rs:5327] ["failed to send extra message"] [err_code=KV:Raftstore:Transport] [err=Transport(Full)] [target="id: 1325743182 store_id: 1308966732"] [peer_id=812270151] [region_id=812270149] [type=MsgHibernateResponse]

[2023/10/30 21:44:18.211 +08:00] [INFO] [region.rs:103] [" 77:1149776626 1149776627, peer created"] [is_replicated=false] [role=Follower] [leader_id=1432444715] [region_id=1149776626]

[2023/10/30 21:44:18.211 +08:00] [ERROR] [peer.rs:5327] ["failed to send extra message"] [err_code=KV:Raftstore:Transport] [err=Transport(Full)] [target="id: 1440643427 store_id: 1308966732"] [peer_id=765197064] [region_id=765197061] [type=MsgHibernateResponse]

[2023/10/30 21:44:18.211 +08:00] [INFO] [region.rs:103] [" 77:197836700 197836701, peer created"] [is_replicated=false] [role=Follower] [leader_id=1391682002] [region_id=197836700]

[2023/10/30 21:44:18.211 +08:00] [INFO] [command.rs:88] ["can't flush data, filter CompactLog"] [compact_term=53] [compact_index=677618] [term=53] [index=677620] [region_epoch="conf_ver: 1279 version: 11688"] [region_id=39379938]

[2023/10/30 21:44:18.212 +08:00] [INFO] [command.rs:144] ["observe useless admin command"] [type=CompactLog] [index=638598] [term=54] [peer_id=191147862] [region_id=191147861]

[2023/10/30 21:44:18.212 +08:00] [INFO] [command.rs:229] ["should persist admin"] [state="applied_index: 638598 commit_index: 638805 commit_term: 54 truncated_state { index: 638596 term: 54 }"] [peer_id=191147862] [region_id=191147861]

[2023/10/30 21:44:18.214 +08:00] [INFO] [command.rs:144] ["observe useless admin command"] [type=CompactLog] [index=777182] [term=64] [peer_id=191121358] [region_id=191121357]

error log

[2023/10/30 23:05:51.485 +08:00] [ERROR] [Exception.cpp:90] ["Code: 33, e.displayText() = DB::Exception: Cannot read all data, e.what() = DB::Exception, Stack trace:\n\n\n 0x1bfe42e\tDB::Exception::Exception(std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, int) [tiflash+29353006]\n \tdbms/src/Common/Exception.h:46\n 0x1c84ff0\tDB::ReadBuffer::readStrict(char*, unsigned long) [tiflash+29904880]\n \tdbms/src/IO/ReadBuffer.h:161\n 0x73bcadf\tDB::DM::readSegmentMetaInfo(DB::ReadBuffer&, DB::DM::Segment::SegmentMetaInfo&) [tiflash+121359071]\n \tdbms/src/Storages/DeltaMerge/Segment.cpp:318\n 0x73bcd48\tDB::DM::Segment::restoreSegment(std::__1::shared_ptr<DB::Logger> const&, DB::DM::DMContext&, unsigned long) [tiflash+121359688]\n \tdbms/src/Storages/DeltaMerge/Segment.cpp:349\n 0x7348013\tDB::DM::DeltaMergeStore::DeltaMergeStore(DB::Context&, bool, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, unsigned int, long, bool, std::__1::vector<DB::DM::ColumnDefine, std::__1::allocator<DB::DM::ColumnDefine> > const&, DB::DM::ColumnDefine const&, bool, unsigned long, DB::DM::DeltaMergeStore::Settings const&, DB::ThreadPoolImpl<DB::ThreadFromGlobalPoolImpl<false> >*) [tiflash+120881171]\n \tdbms/src/Storages/DeltaMerge/DeltaMergeStore.cpp:303\n 0x7e5ad37\tDB::StorageDeltaMerge::getAndMaybeInitStore(DB::ThreadPoolImpl<DB::ThreadFromGlobalPoolImpl<false> >*) [tiflash+132492599]\n \tdbms/src/Storages/StorageDeltaMerge.cpp:1842\n 0x7e66d33\tDB::StorageDeltaMerge::getSchemaSnapshotAndBlockForDecoding(DB::TableDoubleLockHolder<false> const&, bool) [tiflash+132541747]\n \tdbms/src/Storages/StorageDeltaMerge.cpp:1204\n 0x7f7d614\tDB::writeRegionDataToStorage(DB::Context&, DB::RegionPtrWithBlock const&, std::__1::vector<std::__1::tuple<DB::RawTiDBPK, unsigned char, unsigned long, std::__1::shared_ptr<DB::StringObject<false> const> >, std::__1::allocator<std::__1::tuple<DB::RawTiDBPK, unsigned char, unsigned long, std::__1::shared_ptr<DB::StringObject<false> const> > > >&, std::__1::shared_ptr<DB::Logger> const&)::$_2::operator()(bool) const [tiflash+133682708]\n \tdbms/src/Storages/Transaction/PartitionStreams.cpp:126\n 0x7f7a18a\tDB::writeRegionDataToStorage(DB::Context&, DB::RegionPtrWithBlock const&, std::__1::vector<std::__1::tuple<DB::RawTiDBPK, unsigned char, unsigned long, std::__1::shared_ptr<DB::StringObject<false> const> >, std::__1::allocator<std::__1::tuple<DB::RawTiDBPK, unsigned char, unsigned long, std::__1::shared_ptr<DB::StringObject<false> const> > > >&, std::__1::shared_ptr<DB::Logger> const&) [tiflash+133669258]\n \tdbms/src/Storages/Transaction/PartitionStreams.cpp:181\n 0x7f79e4c\tDB::RegionTable::writeBlockByRegion(DB::Context&, DB::RegionPtrWithBlock const&, std::__1::vector<std::__1::tuple<DB::RawTiDBPK, unsigned char, unsigned long, std::__1::shared_ptr<DB::StringObject<false> const> >, std::__1::allocator<std::__1::tuple<DB::RawTiDBPK, unsigned char, unsigned long, std::__1::shared_ptr<DB::StringObject<false> const> > > >&, std::__1::shared_ptr<DB::Logger> const&, bool) [tiflash+133668428]\n \tdbms/src/Storages/Transaction/PartitionStreams.cpp:359\n 0x7fa41ad\tDB::Region::handleWriteRaftCmd(DB::WriteCmdsView const&, unsigned long, unsigned long, DB::TMTContext&) [tiflash+133841325]\n \tdbms/src/Storages/Transaction/Region.cpp:721\n 0x7f620ce\tDB::KVStore::handleWriteRaftCmd(DB::WriteCmdsView const&, unsigned long, unsigned long, unsigned long, DB::TMTContext&) const [tiflash+133570766]\n \tdbms/src/Storages/Transaction/KVStore.cpp:300\n 0x7f82195\tHandleWriteRaftCmd [tiflash+133702037]\n \tdbms/src/Storages/Transaction/ProxyFFI.cpp:97\n 0x7f8e31e4fb98\t_$LT$engine_store_ffi..observer..TiFlashObserver$LT$T$C$ER$GT$$u20$as$u20$raftstore..coprocessor..QueryObserver$GT$::post_exec_que: