Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: tidb cdc 同步数据异常

[TiDB Usage Environment] Test

[TiDB Version] v7.5.1

[Reproduction Path] Merging multiple data entries together

[Encountered Issue: Problem Phenomenon and Impact]

Table creation statement:

CREATE TABLE ods.`ods_test` (

`id` int(11) NOT NULL,

`user_id` int(11) DEFAULT NULL COMMENT 'User ID',

`user_name` varchar(48) COLLATE utf8mb4_general_ci DEFAULT NULL COMMENT '',

`mysql_delete_type` int(11) NOT NULL DEFAULT '0' COMMENT 'MySQL data type',

PRIMARY KEY (`id`) /*T![clustered_index] CLUSTERED */

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_general_ci COMMENT='';

Creating CDC:

case-sensitive = true

[filter]

rules = ['!*.*', 'ods.*']

[mounter]

worker-num = 16

[sink]

dispatchers = [

{matcher = ['*.*'], dispatcher = "table"}

]

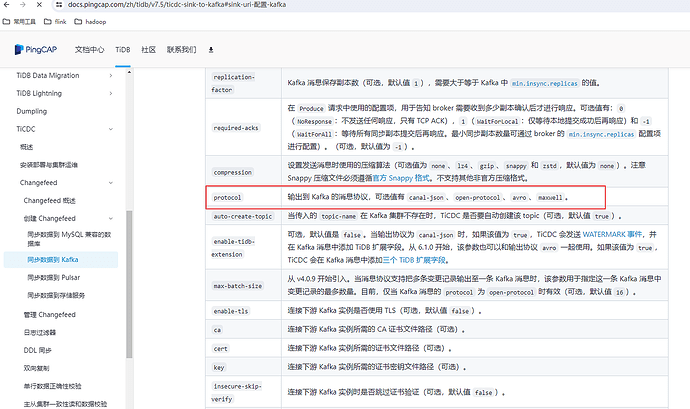

protocol = "maxwell"

Executing SQL:

INSERT INTO ods.ods_test

(id, user_id, user_name, mysql_delete_type)

VALUES(1, 10749, '111', 0);

Kafka displays data:

{

"database": "ods",

"table": "ods_test",

"type": "insert",

"ts": 1719833732,

"data": {

"id": 1,

"mysql_delete_type": 0,

"user_id": 10749,

"user_name": "111"

}

}

Executing SQL again:

INSERT INTO ods.ods_test

(id, user_id, user_name, mysql_delete_type)

VALUES(4, 10596, '555', 0);

INSERT INTO ods.ods_test

(id, user_id, user_name, mysql_delete_type)

VALUES(5, 10749, '666', 0);

INSERT INTO ods.ods_test

(id, user_id, user_name, mysql_delete_type)

VALUES(6, 10596, '777', 0);

INSERT INTO ods.ods_test

(id, user_id, user_name, mysql_delete_type)

VALUES(7, 10749, '888', 0);

INSERT INTO ods.ods_test

(id, user_id, user_name, mysql_delete_type)

VALUES(8, 10596, '999', 0);

INSERT INTO ods.ods_test

(id, user_id, user_name, mysql_delete_type)

VALUES(9, 10749, '000', 0);

INSERT INTO ods.ods_test

(id, user_id, user_name, mysql_delete_type)

VALUES(10, 10596, '111', 0);

Kafka displays data:

First entry:

{

"database": "ods",

"table": "ods_test",

"type": "insert",

"ts": 1719833912,

"data": {

"id": 4,

"mysql_delete_type": 0,

"user_id": 10596,

"user_name": "555"

}

}

Second entry:

{"database":"ods","table":"ods_test","type":"insert","ts":1719833912,"data":{"id":5,"mysql_delete_type":0,"user_id":10749,"user_name":"666"}}

{"database":"ods","table":"ods_test","type":"insert","ts":1719833912,"data":{"id":6,"mysql_delete_type":0,"user_id":10596,"user_name":"777"}}

{"database":"ods","table":"ods_test","type":"insert","ts":1719833912,"data":{"id":7,"mysql_delete_type":0,"user_id":10749,"user_name":"888"}}

{"database":"ods","table":"ods_test","type":"insert","ts":1719833912,"data":{"id":8,"mysql_delete_type":0,"user_id":10596,"user_name":"999"}}

{"database":"ods","table":"ods_test","type":"insert","ts":1719833912,"data":{"id":9,"mysql_delete_type":0,"user_id":10749,"user_name":"000"}}

{"database":"ods","table":"ods_test","type":"insert","ts":1719833912,"data":{"id":10,"mysql_delete_type":0,"user_id":10596,"user_name":"111"}}

Change: dispatch = “ts”, clear table data,

Executing SQL:

INSERT INTO ods.ods_test

(id, user_id, user_name, mysql_delete_type)

VALUES(2, 10596, '222', 0),

(3, 10749, '333', 0),

(4, 10596, '555', 0);

Kafka data (still merged together):

{"database":"ods","table":"ods_test","type":"insert","ts":1719884437,"data":{"id":2,"mysql_delete_type":0,"user_id":10596,"user_name":"222"}}

{"database":"ods","table":"ods_test","type":"insert","ts":1719884437,"data":{"id":3,"mysql_delete_type":0,"user_id":10749,"user_name":"333"}}

{"database":"ods","table":"ods_test","type":"insert","ts":1719884437,"data":{"id":4,"mysql_delete_type":0,"user_id":10596,"user_name":"555"}}