Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: 【TiDB 社区智慧合集】TiDB 数据库中常见的错误 & 解决方法

Introduction:

From the community, back to the community. Thanks to the contributions of various common issues & solutions by TiDBers in the 【TiDBer Chat Session 65】Win Ti Red Camping Five-piece Set, Share Common TiDB Errors & Solutions Without Brain Drain!, we have today’s post summary to help more TiDBers review and solve problems.

Common Error: Tikv memory grows uncontrollably and does not recede

Contributor: @xfworld

【Solution】:

- Check if the tikv version is the official compiled version; otherwise, refer to the Linux memory allocation scheme (wrong scheme selection leads to memory not being reclaimed in time).

- Insufficient disk IO, slow write speed, causing memory write to be faster than disk flush. Enable flow control to ensure memory and disk flush can be stable.

- Slow Query pushdown occupies resources for a long time and cannot be released in time; enable resource location to find these slow queries, optimize them, and reduce long-term resource occupation.

Common Error: When executing SQL, especially long-running SQL, sometimes an Information schema is changed error occurs

Contributor: @Jellybean

- Principle: TiDB uses the schema at the time of executing SQL statements to process the SQL statement, and TiDB supports online asynchronous DDL changes. Therefore, when executing DML, there may be DDL statements executing simultaneously, and you need to ensure that each SQL statement executes on the same schema. So, when executing DML, encountering an ongoing DDL operation may result in an Information schema is changed error.

- Reason 1: The table involved in the ongoing DML and the table in the ongoing DDL in the cluster are the same.

- Reason 2: The DML execution time is very long, during which many DDL statements were executed in the cluster, or TiDB could not load the

schema informationfor a long time due to network issues, causing theschemaversion change count to exceed the value oftidb_max_delta_schema_count.

【Solution】- Retry the SQL after failure; in most cases, it can recover.

- Check the network conditions between clusters to rule out network issues.

Common Error: Query uses hint but does not use tiflash, resulting in slow query. However, setting SESSION to tiflash manually results in fast query

Contributor: @TiDBer_pFFcXLgY

【Solution】:

Add hint to subqueries as well;

If the table’s statistics are inaccurate, manually analyze the table to collect statistics, and it may default to tiflash without adding a hint.

Common Error: When creating Pods for each component using TiDB operator, they often remain in Pending state, usually stuck at creating a certain component service.

Contributor: @lqbyz

【Solution】

Usually caused by insufficient resources. Specifically, you can check usingkubectl describe po -n ${namespace} ${pod_name}. Most reasons are due to PV volumes not being properly bound, which can be resolved by modifying or deleting them.

Common Error: TiDB service components frequently encounter CrashLoopBackOff

Contributor: @lqbyz

【Reason】

Caused by data or lack of permissions in the PV when writing to the database.

【Solution】

- Use

kubectl describe pod/$pod -n$nsto find initialization failure and restart. - Check the pod’s logs to find related errors, usually caused by related files during data initialization. New pods cannot write data.

- Unbind PV and PVC, delete data in PV, then rebind to resolve. Or directly delete data in the bound PV, kubelet will restart the container after two minutes, and the pod status will be running.

Common Error: Accessing PD reports error: TiKV cluster is not bootstrapped

Contributor: @天蓝色的小九

【Solution】:

Most PD APIs can only be used after initializing the TiKV cluster. If only PD is started when deploying a new cluster and TiKV is not started, accessing PD will report this error. To resolve this error, start the TiKV to complete the initialization, then access PD normally.

Common Error: Common errors during scaling down, a 3-node tikv cluster, one node crashes and cannot come up, scaling down remains in offline state and cannot be properly taken offline.

Contributor: @啦啦啦啦啦

【Reason】

To ensure three replicas, only two tikv nodes left will cause region replicas to be unschedulable, thus cannot be properly taken offline.

【Solution】

First, scale up a node to meet the condition of at least three nodes in the cluster, allowing region replicas to be schedulable, then scale down tikv.

Common Error: TiKV metric overload causes huge prometheus storage and multiple prometheus restarts

Contributor: @DBRE

【Reason】

Too many tikv metrics

【Solution】

Add the following lines to the prometheus configuration file under job:tikv

metric_relabel_configs:

- source_labels: [name]

separator: ;

regex: tikv_thread_nonvoluntary_context_switches|tikv_thread_voluntary_context_switches|tikv_threads_io_bytes_total

action: drop- source_labels: [name,name]

separator: ;

regex: tikv_thread_cpu_seconds_total;(tokio|rocksdb).+

action: drop

Common Error: drainer component sync delay: This issue is caused by high business volume, and the drainer process cannot handle it.

Contributor: @Soysauce520

【Solution】

Split drainer, scale up drainer, and use the parameter syncer.replicate-do-db to sync different databases separately.

config:

syncer.replicate-do-db

- database1, database2, database3

Common Error: Error 1105 - Out Of Memory Quota when executing SQL statements

Contributor: @ddhe9527

【Solution】

Set tidb_mem_quota_query to a sufficiently large value at the session level, for example:

set tidb_mem_quota_query=8589934592;

Common Error: Using TiDB Lightning to import data may fail due to the following situations:

Contributor: @tomxu

【Solution】

- Data source error: TiDB Lightning requires MySQL database dump files to be in a specific format, otherwise, it may fail. Use mydumper tool to generate compliant dump files or use other tools for data preprocessing to meet the requirements.

- Configuration error: TiDB Lightning’s configuration file needs to specify correct parameters, such as MySQL database address, port, username, password, etc. If the configuration is incorrect, the import will fail.

- Insufficient disk space: During the import process, TiDB Lightning temporarily stores the imported data on disk and deletes it after completion. If disk space is insufficient, the import will fail.

- TiDB cluster failure: If the TiDB cluster encounters issues during the import process, such as PD node crash, TiKV node crash, etc., the import will fail.

- Data conflict: If there is conflicting data in the original database, such as primary key conflict, the import will fail.

- Permission issues: TiDB Lightning requires specific permissions for the downstream database. If sufficient permissions are not granted, permission errors will occur during the import.

In summary, there can be many reasons for TiDB Lightning import failures, and troubleshooting should be based on specific situations. Check TiDB Lightning’s error messages and analyze the actual situation to resolve the issues step by step.

Common Error: Common issues and solutions during TiDB usage:

Contributor: @tomxu

【Solution】

- TiDB cluster cannot start normally:

Possible reasons include TiDB component configuration errors, physical machine hardware failures, TiDB version incompatibility, etc. Solutions require locating the problem based on specific error logs and troubleshooting step by step. - Poor TiDB cluster performance:

Possible reasons include insufficient TiDB performance tuning, data distribution imbalance due to write hotspots, etc. Solutions include using the TiUP Bench tool for benchmark testing and then optimizing accordingly. - TiDB import/export data failure:

Main issues include insufficient disk space, large data volume causing insufficient memory, unstable database connections, etc. Solutions include adding more disks or increasing nodes in the TiDB cluster to improve resource capacity, or splitting data into smaller batches for export. - TiDB cluster data corruption:

Possible reasons include disk failures, node crashes, operating system anomalies, etc. Solutions include using backups and restoring the cluster to resolve the issue. - Insufficient TiDB SQL optimization:

Possible reasons include unreasonable SQL writing, unreasonable table design, etc. Solutions include using the TiDB Query Profiling tool to analyze SQL query performance and optimize accordingly. - TiDB security risks:

Possible issues include privacy data leakage, insecure authentication, etc. Solutions include strengthening TiDB cluster security mechanisms, restricting certain permissions and operations, and protecting sensitive data.

Common Error: The size recorded by information_schema.tables.data_length is inconsistent with the store size on the TiKV monitoring panel

Contributor: @裤衩儿飞上天

【Reason】

Because they are calculated from different perspectives. information_schema.tables.data_length is an estimated value obtained through statistical information (average row size). The store size on the TiKV monitoring panel is the total size of data files (RocksDB’s SST files) of a single TiKV instance. Due to multiple versions and TiKV compressing data, the sizes displayed are different.

【Solution】

Refer to both methods according to your needs to get an approximate estimate.

Common Error: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure The last packet successfully received from the server was 9,303 milliseconds ago. The last packet sent successfully to the server was 9,303 milliseconds ago.

Contributor: @weixiaobing

【Reason】

The database connection validity period on the application side is longer than the database’s own set validity period.

【Solution】

- Modify druid configuration (if using druid)

spring.datasource.druid.validationQuery=select 1

spring.datasource.druid.testWhileIdle=true

spring.datasource.druid.testOnBorrow=true

spring.datasource.druid.testOnReturn=true

PS. This solution may have some impact on performance. - Modify database connection configuration

Add “&autoReconnect=true&failOverReadOnly=false” to the database connection configuration. - Modify database connection validity period

Set the database connection validity period to a longer time, such as 12 hours or 24 hours.

Common Error: TIKV server is busy

Contributor: @BraveChen

Usually caused by high tikv load or network load saturation. Confirm through monitoring and resolve by increasing network bandwidth or scaling up tikv nodes.

Common Error: High load despite low business volume, sometimes causing inexplicable slow SQL

Contributor: @db_user

【Troubleshooting】:

- Check analyze configuration.

- Check analyze execution status.

- Check the health of the tables involved in slow queries.

【Solution】:

If there are frequent unsuccessful analyzes, execute analyze manually.

If frequent analyze causes high load, adjust analyze time.

If analyze execution is slow for large tables, execute analyze manually at regular intervals using scripts.

Common Error: TiKV node logs occupy 80% of the disk space, modifying the log retention policy and deleting logs, but after a while, no writes are allowed due to no available space, but df -Th shows plenty of free space.

Contributor: @ffeenn

【Reason】

df -Th shows 50% free space, but df -i shows 100% inode usage, because inode was not released when deleting logs last time.

【Solution】

Stop the node and restart to fully release inode.

Common Error: An SQL query reports other error for mpp stream

Contributor: @LI-ldc

【Solution】:

set @@session.tidb_allow_mpp=0;

Common Error: Dashboard error: error.pd.client_request_failed: Request failed with status code 500 from PD API: “[PD:cluster:ErrNotBootstrapped]TiKV cluster not bootstrapped, please start TiKV first”

Contributor: @LI-ldc

【Solution】:

Switch dashboard address, simulate restarting the dashboard.

tiup cluster display tidb-app --dashboard # Check the current address

tiup ctl:v5.1.1 pd -u http://192.168.10.51:2379 config set dashboard-address http://192.168.10.52:2379 # Switch from 51 to 52

tiup ctl:v5.1.1 pd -u http://192.168.10.52:2379 config set dashboard-address http://192.168.10.51:2379 # Switch back

Common Error: group by prompts only_full_group_by

Contributor: @LI-ldc

【Solution】:

set global sql_mode=‘STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION’;

Common Error: When joining multiple tables, the SQL join order is inconsistent with expectations, resulting in slow queries

Contributor: @ealam_小羽

【Reason】:

TiDB table join defaults to the Join Reorder algorithm.

【Solution】:

UseSTRAIGHT_JOINto specify the join order.

Article: Column - A Slow Query of Multi-table Join Order——TiDB Join Feature | TiDB Community

Documentation: https://docs.pingcap.com/zh/tidb/stable/join-reorder#join-reorder-算法简介

Common Error: Previously using version 4.0, executing LOAD DATA did not report an error, but after upgrading to version 5.0+, LOAD DATA reports an error

ERROR 8004 (HY000) at line 1: Transaction is too large, size: 100000058

Contributor: @Hacker_hnSEntrA

【Reason】:

In early versions of TiDB, theLOAD DATAstatement committed every 20000 rows. The new version of TiDB defaults to committing all rows in one transaction. After upgrading from TiDB 4.0 or earlier versions, you may encounter the errorERROR 8004 (HY000) at line 1: Transaction is too large, size: 100000058.

【Solution】:

To resolve this issue, it is recommended to increase thetxn-total-size-limitvalue in thetidb.tomlfile. If this limit cannot be increased, you can set thetidb_dml_batch_sizevalue to20000to restore the behavior before the upgrade.

Common Error: tiup online upgrade of tidb components fails, showing timeout

Contributor: @半瓶醋仙

【Solution】

- Manually restart the database system.

- Network congestion, manually adjust bandwidth, upgrade during off-peak hours.

- Modify configuration.

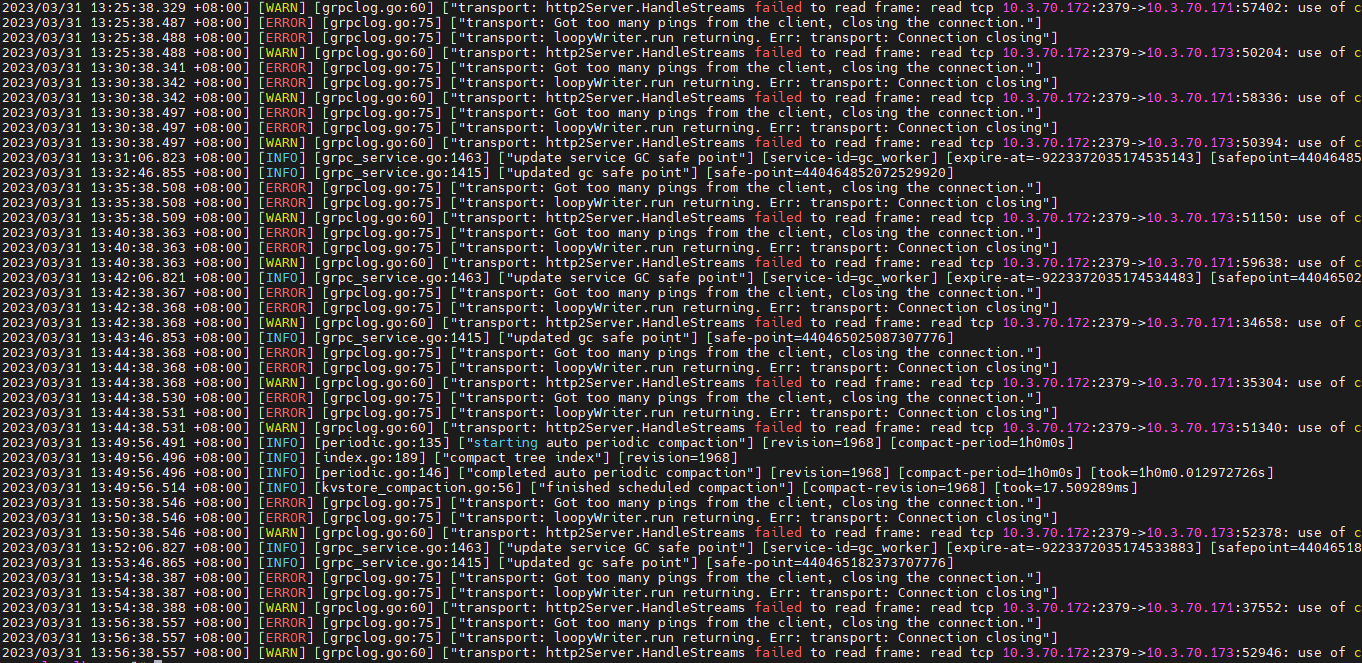

Common Error: transport: Got too many pings from the client, closing the connection.

Contributor: @xingzhenxiang

【Solution】

Ignore it, just pretend you didn’t see it.

Common Error: Error when adding or removing nodes

Contributor: @neolithic

【Solution】

Clean up tombstone nodes.

Common Error: Using visualization tools to copy data from other databases to tidb, reporting transaction too large sync failure

Contributor: @tidb菜鸟一只

【Solution】

Increase the txn-total-size-limit parameter of tidb, as this parameter defaults to 1G, and syncing slightly larger tables will report an error.

Common Error: TiKV frequently OOM and restarts when deploying TiDB on k8s

Contributor: [@tidb菜鸟一只]

【Solution】

Modify the storage.block-cache.capacity parameter of tikv, as this parameter defaults to 45% of memory, but on k8s, it defaults to 45% of the physical