Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: TIDB的dashboard Top SQL500报错

[TiDB Usage Environment] Production Environment / Testing / PoC

[TiDB Version] v6.5.1

[Reproduction Path] Operations performed that led to the issue

After the server restarted due to a power outage

[Encountered Issue: Problem Phenomenon and Impact]

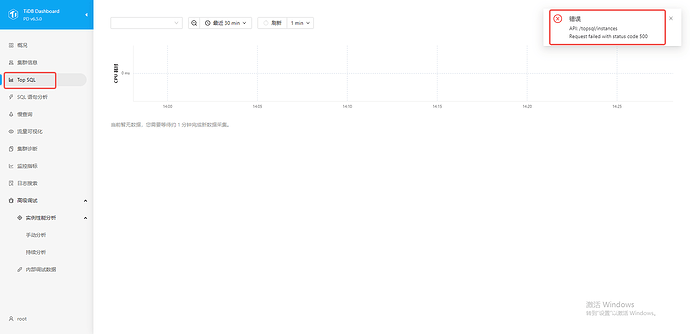

Wanted to view the Top SQL rankings, but the page directly displayed the following content

Error

API: /topsql/instances

Request failed with status code 500

[Resource Configuration]

[Attachment: Screenshot/Log/Monitoring]

There are too many slow SQLs. Go delete and clean up the slow log.

This data is in memory, right? It will be gone after a restart, right?

Part of the show log has been cleared and some TiDB log files have been deleted, but the issue persists.

Take a look at the PD logs, it’s best to change them to debug level logs.

I remember that switching the PD leader should work. Give it a try. There’s a similar post.

Switch PD leader: member leader transfer pd3 // pd3 is the new leader to transfer to

Here are some error logs.

Is it working normally now?

You can follow what the brother above said and switch the PD leader.

The issue with switching the leader still persists.

Here is the statement for a successful switch:

» member leader transfer pd-10.20.60.133-2379

Success!

Use tiup cluster display <cluster_name> to check the status of PD and Dashboard.

Changing the PD leader node, just not sure about the logic and principles.

Restart the PD, then switch to the leader.

A 500 error is generally a server issue. Use tiup cluster display <cluster-name> to check if all nodes in the cluster are functioning properly. Then, restart the problematic nodes. If that doesn’t work, try restarting the entire cluster service.

Try switching the PD and see.

All nodes are displayed in green and normal, no issues!~

Switched the PD leader, but the problem remains. Restarted the entire database service, but the problem still persists. I’m at a loss and don’t know how to handle it.