Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: tidb报错:[error=“[types:1406]Data Too Long, field len 40, data len 64”]

[TiDB Usage Environment] Production

[TiDB Version] v4.0.9

[Encountered Issues]

- TiDB frequently reports errors: Data Too Long

[2022/08/30 10:52:27.997 +08:00] [ERROR] [handle.go:187] [“[stats] error occurred when read table stats”] [table=log_need_retry_method] [error=“[types:1406]Data Too Long, field len 40, data len 64”]

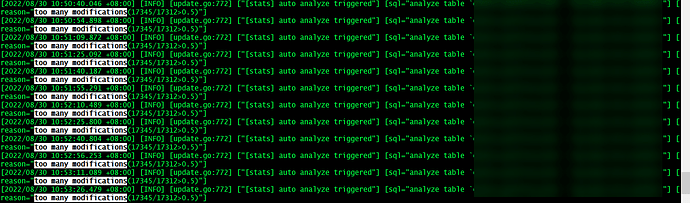

- A table is frequently being analyzed, but the data in the table hasn’t changed much.

[Reproduction Path] What operations were performed to cause the issue

None

[Attempted Fixes]

ALTER TABLE mysql.stats_buckets MODIFY upper_bound LONGBLOB NOT NULL;

ALTER TABLE mysql.stats_buckets MODIFY lower_bound LONGBLOB;

ALTER TABLE mysql.stats_histograms MODIFY last_analyze_pos LONGBLOB DEFAULT NULL;

[Issue Phenomenon and Impact] Yesterday, it caused TiDB’s memory to suddenly spike to its peak.

I saw this fix, but it seems that the targeted version is not 4.X.

Has there been any improvement after attempting the fix?

Try using this statement to check:

SELECT

b.TABLE_SCHEMA, b.table_name, ROUND((1 - a.modify_count / a.count) * 100, 0) AS healthy

FROM mysql.stats_meta a

LEFT JOIN INFORMATION_SCHEMA.TABLES b ON a.table_id = b.TIDB_TABLE_ID

WHERE b.TABLE_SCHEMA = ''

AND b.table_name = ''

ORDER BY table_id;

The issue remains the same after executing the command, it didn’t help at all.

After executing your command, it shows healthy is 100.

“Data Too Long, field len 40, data len 64” Has the table structure changed?

Is this database native? Or was it restored or synchronized from another database?

Has the encoding of the database or table changed? Or is the encoding of the database and table consistent? Are they all UTF8?

That should be normal, it shouldn’t trigger automatic analyze.

The database is native and has not undergone any changes.

This question is so strange.

I suspect there is an issue with this table.

If you need to urgently handle the issue online, you can try rebuilding the table, importing the data, and then renaming it.

It’s quite strange why this is happening, the table usage has no issues.

Is this SQL called by a program or executed manually from the command line?

If it’s executed from the command line, try adjusting the shell’s language (LANG or NLS_LANG) and see if that helps.

This appeared in the TiDB logs.

At present, it seems that the memory surge is not related to this error. I moved the table to the test environment, but there is no error in the test environment. It’s a bit strange.

Oh, so which problem needs to be solved? Solving the memory issue or capturing the profile?

How do you capture a profile?