Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: tidb冷热分离实践—PD按照label调度失败

[Problem Phenomenon and Impact]

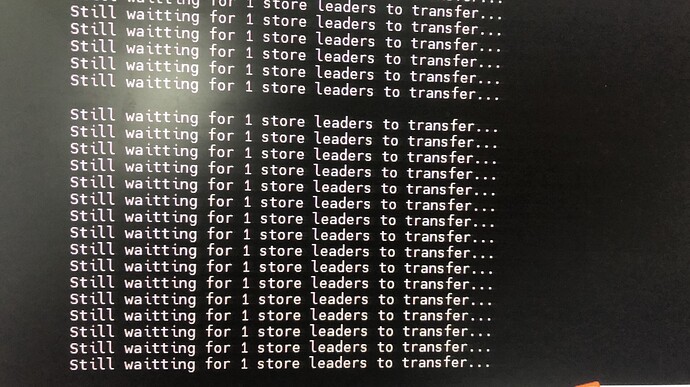

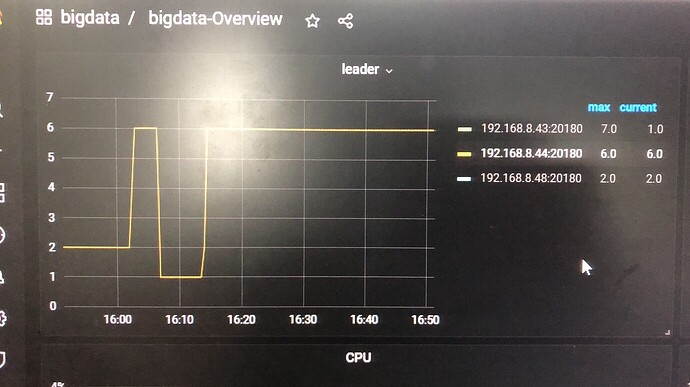

The monitoring results are shown in the figure:

But after 5 minutes, it reports evicting leader store timeout…, and the cluster leader region cannot be scheduled as expected.

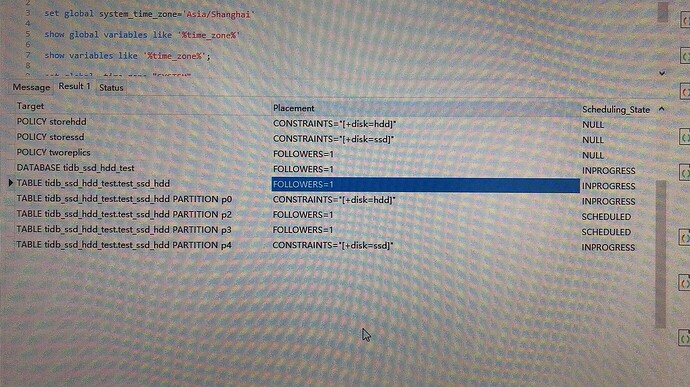

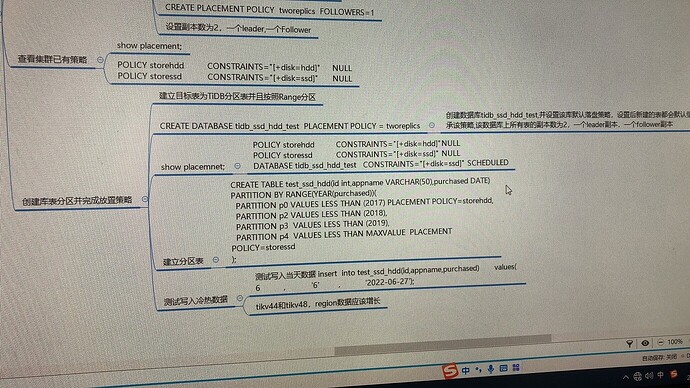

Connect to the client and execute the show placement statement, the execution result is as follows:

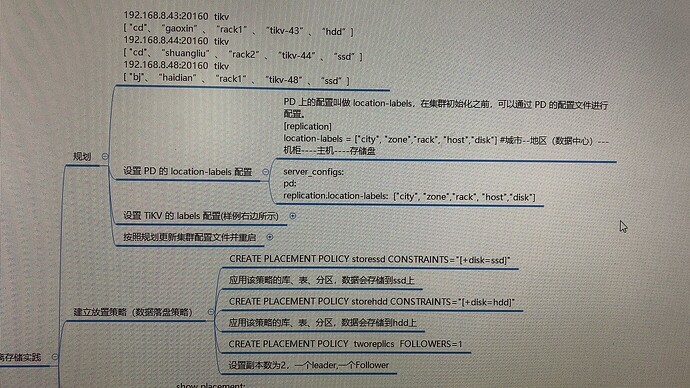

I built a TiDB cluster on a test environment machine, upgraded the cluster to the latest version 6.1.0, and prepared to do a hot and cold storage separation demo. The specific steps are as follows:

- TIKV label planning

- Set PD’s location-labels

- According to the tikv label planning, modify the TiKV configuration as shown in the figure below;

- After modifying the parameters, restart the cluster with tiup cluster reload cluster name

- Establish placement policies as shown in the figure;

The actual results are shown in the figure:

It should be the constraint limited to HDD or SSD, but there is only one HDD/SSD node, and the number of replicas is 2, which does not meet the scheduling requirements. Try leader_constraint.

In the TiKV cluster topology planning, there are two disks labeled as SSD and one disk labeled as HDD. I set the replica count to 2 (1 leader, 1 follower). From the current observation, the replica strategy is effective. According to the storage placement policy, the latest data is written to the SSD, and the oldest data is written to the HDD. Using Constraints=[+disk=ssd], it means that all data on the TiKV nodes must match the SSD label on the disk.

The strategy for SSD requires [+disk=ssd] along with follower=1. The strategy you are using at the database level is follower=1, but it gets overridden by other strategies at the partition level, causing follower=1 to become invalid, resulting in the default 3 replicas.

Thank you, it was indeed a placement strategy conflict.

This topic was automatically closed 1 minute after the last reply. No new replies are allowed.