Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: tidb不停oom

【TiDB Usage Environment】Production, Testing, Research

【TiDB Version】

【Encountered Problem】

【Reproduction Path】Operations performed that led to the problem

【Problem Phenomenon and Impact】

【Attachments】

Please provide the version information of each component, such as cdc/tikv, which can be obtained by executing cdc version/tikv-server --version.

Provide some information, such as logs and monitoring. We are not fortune-tellers and cannot predict things.

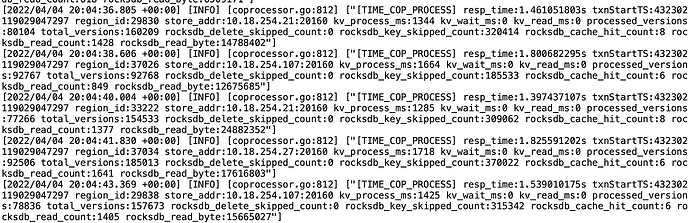

All TiDB nodes are frantically logging:

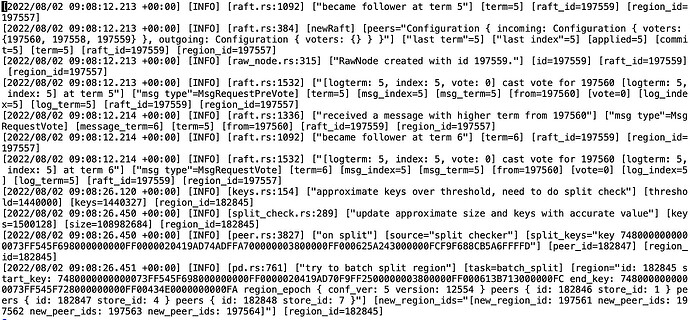

Logs from one of the TiKV nodes:

Well, it’s an issue that has existed for a long time. Upgrading from version v5.2.1 to v5.2.2 doesn’t seem to fix it either, so it can only be used temporarily.

If TiDB encounters an OOM (Out of Memory) issue, there will be keywords in the logs. Find the location of the OOM and check the context around it.

The image is not visible. Please provide the text you need translated.

Provide the current logs for TiDB. If it keeps encountering OOM (Out of Memory) issues, look for the logs at the time of the OOM events.

The TIME_COP_PROCESS that keeps appearing in TiDB indicates there are slow queries, which is why it keeps showing up. As for the OOM issue you mentioned later, do you have any information from the logs preceding this entry? Look for error or warning messages.

According to the slow query, check how much memory is being used by sorting by memory usage.

Please describe the issue according to this problem template~

It will be more efficient if you explain everything at once.

It has been said repeatedly, the content is consistent, don’t worry about the time.

Looking for OOM logs? It’s clearly a GC issue, a raft bug. Are you a source code developer?

It’s just constantly refreshing, even when there’s no load. Do you have time to share your screen and take a look together?

I’m not an official person. You keep saying OOM, and you post logs from a few months ago? That’s ridiculous. If you know it’s a GC issue, then adjust it. Why are you asking here if you’re so capable? What does GC have to do with Raft? Although I haven’t contributed much to the official source code, I’ve read almost all the code for TiKV, RocksDB, and PD. Why is it so difficult for you to post a log? I’m just an enthusiastic community member and have no obligation to solve your problems. You ask a question but don’t post logs or provide monitoring data. When asked for logs, you have all sorts of issues. In the future, solve your problems by reading the documentation yourself.

Before, there was no problem with continuous refreshing, but now it’s causing an OOM (Out of Memory) error. So, what logs should you look for if not the current ones? The term “time_cop_process” means that the coprocessor is taking too long to process, indicating you have slow queries. If it keeps happening, it will keep refreshing. This is not the root cause of the OOM. The OOM is definitely due to a sudden increase in memory usage or memory usage exceeding the limit, so you need to check the current logs.

This topic will be automatically closed 60 days after the last reply. No new replies are allowed.