Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: tidblightning导入200GB数据失败

【TiDB Usage Environment】Testing environment

【TiDB Version】v6.1.0

【Problem Encountered】Failed to import 200GB data using tidb-lightning. The data was exported from MySQL 8 using dumpling.

【Reproduction Path】Execute the SQL import command: tiup tidb-lightning -config tidb-lightning.toml

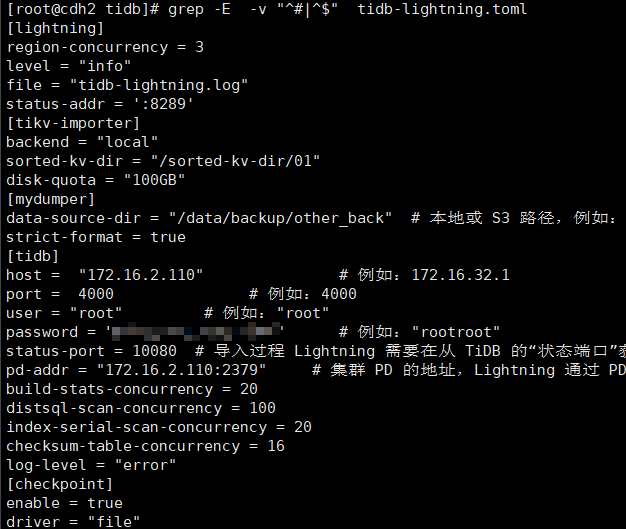

The tidb-lightning.toml configuration is as follows:

【Problem Phenomenon and Impact】

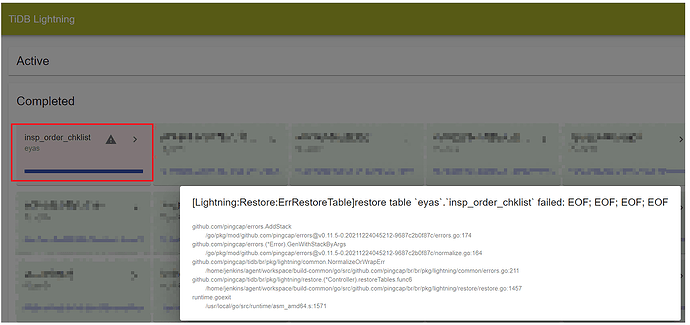

Importing over 200GB of data with tidb-lightning appears normal during data conversion but takes a long time. After conversion, the import to TiDB fails. Monitoring as shown in the image:

【Attachments】

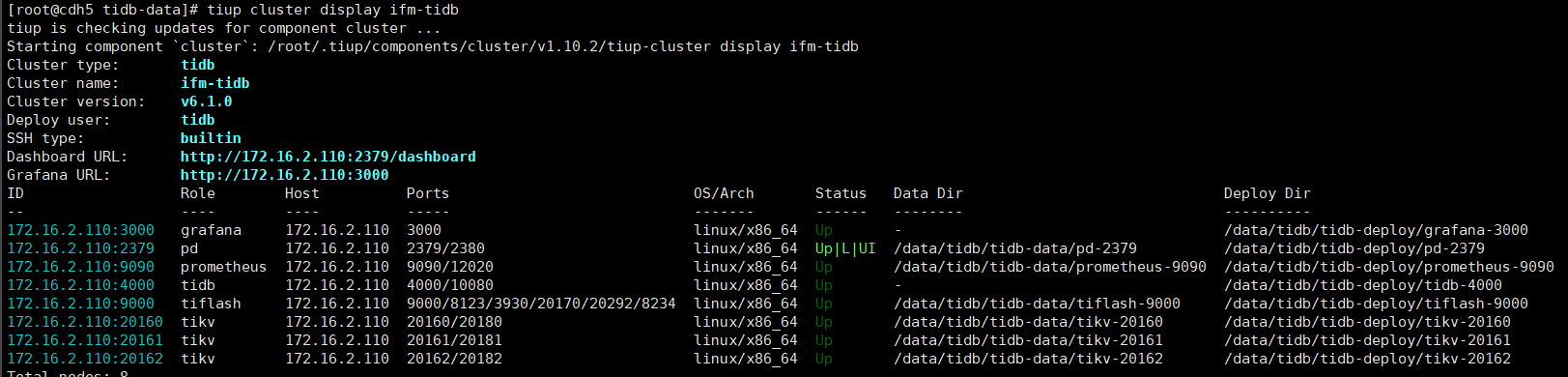

Deployment method referenced for single-machine simulated production environment deployment:

TiDB 数据库快速上手指南 | PingCAP 文档中心

For questions related to performance optimization and fault troubleshooting, please download the script and run it. Please select all and copy-paste the terminal output results for uploading.

Try restarting the cluster, it’s all timeouts. Also, I want to ask where you got your configuration file from. The disk-quota feature isn’t documented, right? (This value will affect import efficiency.)

This is mentioned in the documentation. I referred to the toml configuration in the operation documentation because during the import, the backup data storage disk and the sorting disk cannot use the same disk, otherwise, an error will occur. The error message suggests adding this configuration or changing the sorting disk. My partition doesn’t have much space (usually needs more than 200GB), so I limited it a bit.

The import speed is indeed slow. The total data size is over 400GB, with a single table size of over 200GB. The first time, both the backup data and TiDB were on the same server and on the same disk, which was slow and the import was unsuccessful. Later, I deployed the backup data and TiDB Lightning to other machines, but the import was still slow and unsuccessful.

Why do you need to restart TiDB? The status during the TiDB process is normal.

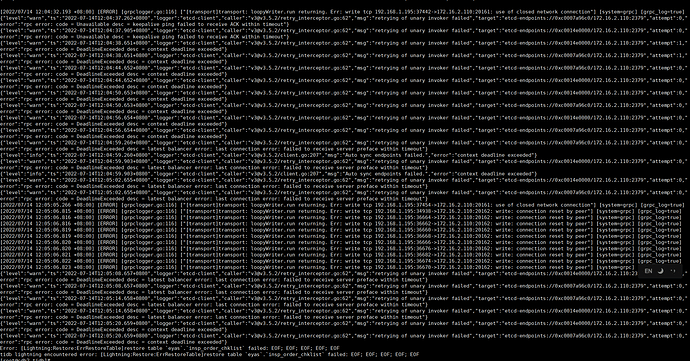

I don’t know where to modify the parameters for this timeout. The single table is over 200GB, and it is estimated that the client timed out during the import after the conversion is completed.

What format is the file you exported? Is it SQL or CSV?

The SQL format exported from MySQL 8 using Dumpling, other tables (less than 10G) were successfully imported, but this table (200G) failed to import.

200G, is the table too large? Try splitting the exported SQL file and see if that works?

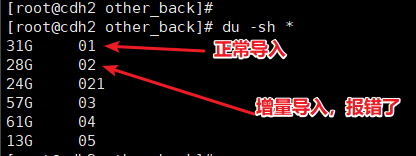

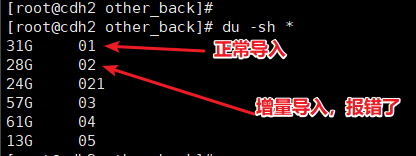

I tried splitting it into 5 parts. The first part, 30G, was successfully imported. The second part, 20G, using incremental import, failed with errors similar to the ones above. I tried several times. I’m not sure if incremental import is supported.

I saw that the community has parallel import, which seems to be divided by the number of rows, but I didn’t do it that way.

Are you saying that after importing 30GB initially, you encountered an error when importing an additional 20GB?

Which backend mode did you choose?

Yes, the import mode is all local. I will import it again later and take a screenshot.

The incremental import configuration has added the following line:

[tikv-importer]

backend = "local" // The import mode is all local

sorted-kv-dir = "/sorted-kv-dir/02"

disk-quota = "200GB"

incremental-import = true // Incremental import, otherwise an error will occur if the target table is not empty

The timeout mentioned above seems to be reported by PD. It doesn’t appear to be an issue within Lightning itself, nor can we tell which stage the import has reached. Could you provide the complete log? It’s difficult to solve the problem by guessing.

For the first import, use local. For subsequent incremental imports, you should choose tidb-backend.

Incremental import can be done using local.

I have another question. You exported an SQL file (insert), can the SQL file be executed directly?

Is the original data on the target still there?

Yes, otherwise how could it be called incremental import?

Yes, it should be possible. I didn’t think of that at the time and used the official Lightning tool to import it. The data volume is quite large. The source database is about 400GB, and this table alone is over 200GB. It seems the log indicates some kind of heartbeat disconnection, but I don’t know how to debug it. I couldn’t find related issues on the forum either. Currently attempting the first import.

Oh, I learned something new.