Version v4.0.0.

Steps

Error log information:

goroutine 479 [select]:

github.com/pingcap/tidb/domain.(*Domain.handleEvolvePlanTasksLoop.func1(0xc0003d3440, 0x3707560, 0xc00270c200)

/home/jenkins/agent/workspace/tidb_v4.0.0/go/src/github.com/pingcap/tidb/domain/domain.go:913 +0x1c1

created by github.com/pingcap/tidb/domain.(*Domain.handleEvolvePlanTasksLoop

/home/jenkins/agent/workspace/tidb_v4.0.0/go/src/github.com/pingcap/tidb/domain/domain.go:908 +0x73

goroutine 464 [select, 3 minutes]:

go.etcd.io/etcd/clientv3.(*lessor.keepAliveCtxCloser({“level”:“warn”,“ts”:“2022-08-09T11:41:47.308+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-e1f52210-d6f8-495d-a667-b2c01ae462e3/192.168.0.41:2379”,“attempt”:0,“error”:“rpc error: code = DeadlineExceeded desc = context deadline exceeded”}

{“level”:“warn”,“ts”:“2022-08-09T11:41:48.196+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-48902fbb-0317-4aa5-815b-a8c309ad3ed1/192.168.0.41:2379”,“attempt”:0,“error”:“rpc error: code = Unavailable desc = transport is closing”}

{“level”:“warn”,“ts”:“2022-08-09T11:41:48.312+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-e1f52210-d6f8-495d-a667-b2c01ae462e3/192.168.0.41:2379”,“attempt”:0,“error”:“rpc error: code = Unavailable desc = transport is closing”}

{“level”:“warn”,“ts”:“2022-08-09T11:57:42.942+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-b7122453-edc5-401e-9c15-f3b19a6957d0/192.168.0.41:2379”,“attempt”:0,“error”:“rpc error: code = DeadlineExceeded desc = context deadline exceeded”}

{“level”:“warn”,“ts”:“2022-08-09T11:57:50.062+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-b318aef7-2b8e-4d96-8c70-01cc8176f889/192.168.0.41:2379”,“attempt”:0,“error”:“rpc error: code = DeadlineExceeded desc = context deadline exceeded”}

{“level”:“warn”,“ts”:“2022-08-09T11:57:51.967+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-b318aef7-2b8e-4d96-8c70-01cc8176f889/192.168.0.41:2379”,“attempt”:0,“error”:“rpc error: code = Unavailable desc = transport is closing”}

{“level”:“warn”,“ts”:“2022-08-09T12:01:54.049+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-804d5f0a-1041-4a8f-b792-604a99da1e6e/192.168.0.41:2379”,“attempt”:0,“error”:“rpc error: code = DeadlineExceeded desc = context deadline exceeded”}

{“level”:“warn”,“ts”:“2022-08-09T12:01:54.934+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-68b7b320-1768-45ce-ae37-2607d87a9a83/192.168.0.41:2379”,“attempt”:0,“error”:“rpc error: code = Unavailable desc = transport is closing”}

{“level”:“warn”,“ts”:“2022-08-09T12:01:55.055+0800”,“caller”:“clientv3/retry_interceptor.go:61”,“msg”:“retrying of unary invoker failed”,“target”:“endpoint://client-804d5f0a-1041-4a8f-b792-604a99da1e6e/192.168.0.41:2379”,“attempt”:0,“error”:“rpc error: code = Unavailable desc = transport is closing”}

fatal error: runtime: out of memory

runtime stack:

fatal error: runtime: out of memory

runtime stack:

runtime.throw(0x318b551, 0x16)

/usr/local/go/src/runtime/panic.go:774 +0x72

runtime.sysMap(0xc720000000, 0x4000000, 0x51aae98)

/usr/local/go/src/runtime/mem_linux.go:169 +0xc5

runtime.(*mheap).sysAlloc(0x5190d60, 0x30000, 0xffffffff010dd5c9, 0x29dbff6)

/usr/local/go/src/runtime/malloc.go:701 +0x1cd

runtime.(*mheap).grow(0x5190d60, 0x18, 0xffffffff)

/usr/local/go/src/runtime/mheap.go:1252 +0x42

runtime.(*mheap).allocSpanLocked(0x5190d60, 0x18, 0x51aaea8, 0x4d15320)

/usr/local/go/src/runtime/mheap.go:1163 +0x291

runtime.(*mheap).alloc_m(0x5190d60, 0x18, 0x101, 0x0)

/usr/local/go/src/runtime/mheap.go:1015 +0xc2

runtime.(*mheap).alloc.func1()

/usr/local/go/src/runtime/mheap.go:1086 +0x4c

runtime.(*mheap).alloc(0x5190d60, 0x18, 0x7fdba5000101, 0x112b580)

/usr/local/go/src/runtime/mheap.go:1085 +0x8a

runtime.largeAlloc(0x30000, 0x1150100, 0xc71ffd4000)

/usr/local/go/src/runtime/malloc.go:1138 +0x97

runtime.mallocgc.func1()

/usr/local/go/src/runtime/malloc.go:1033 +0x46

runtime.systemstack(0x0)

/usr/local/go/src/runtime/asm_amd64.s:370 +0x66

runtime.mstart()

/usr/local/go/src/runtime/proc.go:1146

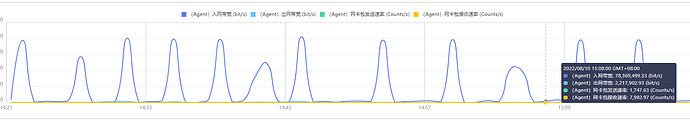

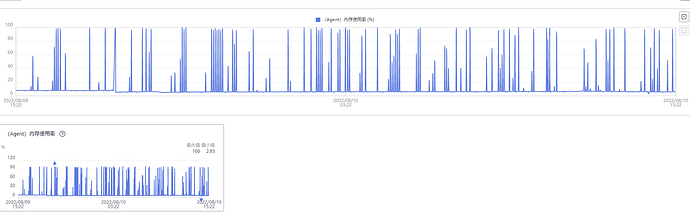

High memory usage will cause tidb-server to automatically restart (randomly on three machines),

Server configuration:

pd_servers, tidb_servers: 16 cores, 32G RAM, 500G SSD (3 machines)

tikv_servers: 16 cores, 32G RAM, 1T SSD (3 machines)