Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: PD同时重启后TIDB启动了新的集群

[TiDB Usage Environment]

Testing environment: Deployed with tidb-operator v5.3.0

[Phenomenon]

Three PD nodes in the testing environment were deployed on the same machine. After the machine failed, the PD cluster restarted on another machine. The PVC used by TiKV did not change, but all data was lost, and a new TiDB cluster was started (“critical error” [error="get timestamp failed: rpc error: code = FailedPrecondition desc = mismatch cluster id, need 7122651197468007202 but got 7054113717097513058).

Can the data be recovered, and what exactly is the issue?

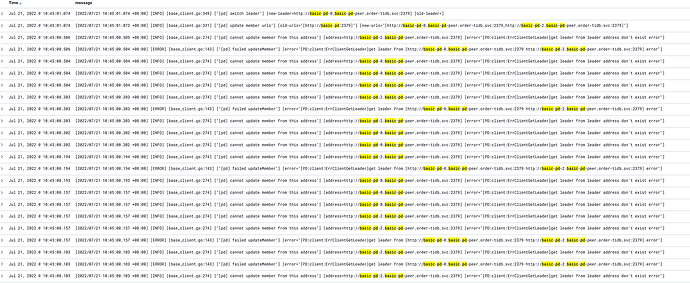

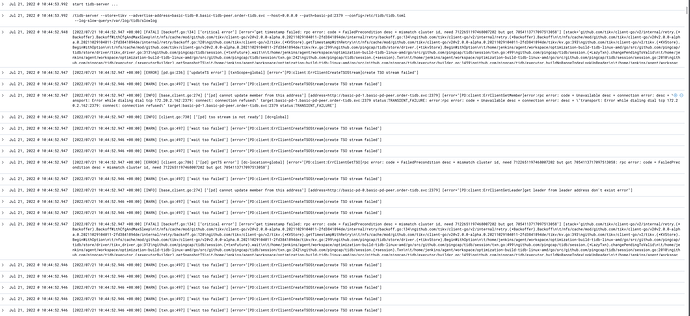

[Logs]

Logs were written to Kibana. Logs at the time of cluster restart:

You can try using pd-recover to rebuild the PD cluster

I referred to this (使用 PD Recover 恢复 PD 集群 | PingCAP 文档中心) and used 7054113717097513058 as the cluster_id to rebuild the PD cluster based on pd-recover, but the TiKV cluster failed to start…

[2022/07/21 14:52:27.557 +08:00] [ERROR] [server.rs:1052] [“failed to init io snooper”] [err_code=KV:Unknown] [err=“"IO snooper is not started due to not compiling with BCC"”]

[2022/07/21 14:52:27.668 +08:00] [INFO] [mod.rs:227] [“Storage started.”]

[2022/07/21 14:52:27.671 +08:00] [FATAL] [server.rs:732] [“failed to bootstrap node id: "[src/server/node.rs:241]: cluster ID mismatch, local 7122651197468007202 != remote 7054113717097513058, you are trying to connect to another cluster, please reconnect to the correct PD"”]

This is the correct cluster ID.

Region-related information is stored in the raft column family of each TiKV’s RocksDB data. If the data in PD is lost and you want to recover it, it’s not particularly difficult. Just increase the cluster ID, allocated region, and peer ID, and you should be able to recover the data.

Before performing pd-recover, from the PD monitoring panel and the command kubectl get tc ${cluster_name} -n ${namespace} -o='go-template={{.status.clusterID}}{{"\ "}}', it can be seen that the clusterID of the PD cluster is already 7122651197468007202. However, all table structures (including MySQL and account information) are missing.

Just follow what TiKV says, the data recorded in TiKV is accurate.

The cluster ID was specified incorrectly. Use 7122651197468007202.

It’s still not working. From the disk usage, the data is still there, but all the tables are gone. Could it be a different cluster ID?

Is there an error reported? It should be the cluster ID mentioned in the TiKV logs. Before rebuilding the PD cluster, you need to clear the PD data.

This topic was automatically closed 60 days after the last reply. No new replies are allowed.