Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: tiflash异常重启 checksum not match

[TiDB Usage Environment] Online

[TiDB Version] 6.1.0

[Encountered Problem]

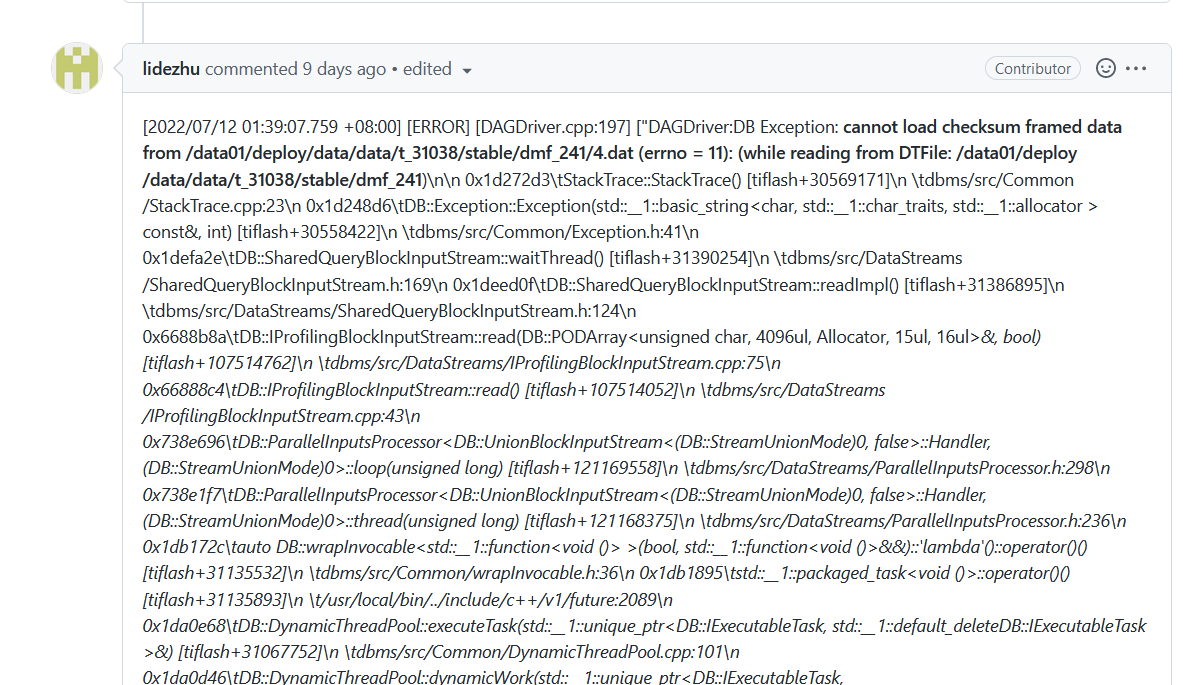

Previously, accessing the table in TiFlash resulted in an error. After the error, the replica count was set to 0, and the error was as follows (error only, no restart):

Now, when re-enabling the replica for this table, synchronization reaches about 50% and TiFlash starts reporting errors, with the service continuously restarting.

The tiflash.log error is the same as above, with part of the log as follows:

[2022/07/27 16:56:48.764 +08:00] [ERROR] [Exception.cpp:85] ["void DB::BackgroundProcessingPool::threadFunction():Code: 40, e.displayText() = DB::Exception: Page[167976] field[1] checksum not match, broken file: /data01/deploy/data/data/t_17630/log/page_56_0/page, expected: b0d1876a36fa4582, but: a3e70a0a2ff59556, e.what() = DB::Exception, Stack trace:\

0x1d272d3\tStackTrace::StackTrace() [tiflash+30569171]

dbms/src/Common/StackTrace.cpp:23

0x1d248d6\tDB::Exception::Exception(std::__1::basic_string<char, std::__1::char_traits, std::__1::allocator > const&, int) [tiflash+30558422]

dbms/src/Common/Exception.h:41

0x79f8633\tDB::PS::V2::PageFile::Reader::read(std::__1::vector<DB::PS::V2::PageFile::Reader::FieldReadInfo, std::__1::allocatorDB::PS::V2::PageFile::Reader::FieldReadInfo >&, std::__1::shared_ptrDB::ReadLimiter const&) [tiflash+127895091]

dbms/src/Storages/Page/V2/PageFile.cpp:1050

0x7a0c089\tDB::PS::V2::PageStorage::readImpl(unsigned long, std::__1::vector<std::__1::pair<unsigned long, std::__1::vector<unsigned long, std::__1::allocator > >, std::__1::allocator<std::__1::pair<unsigned long, std::__1::vector<unsigned long, std::__1::allocator > > > > const&, std::__1::shared_ptrDB::ReadLimiter const&, std::__1::shared_ptrDB::PageStorageSnapshot, bool) [tiflash+127975561]

dbms/src/Storages/Page/V2/PageStorage.cpp:783

0x7a8fbf0\tDB::PageReaderImplNormal::read(std::__1::vector<std::__1::pair<unsigned long, std::__1::vector<unsigned long, std::__1::allocator > >, std::__1::allocator<std::__1::pair<unsigned long, std::__1::vector<unsigned long, std::__1::allocator > > > > const&) const [tiflash+128515056]

dbms/src/Storages/Page/PageStorage.cpp:113

0x7a8d3f2\tDB::PageReader::read(std::__1::vector<std::__1::pair<unsigned long, std::__1::vector<unsigned long, std::__1::allocator > >, std::__1::allocator<std::__1::pair<unsigned long, std::__1::vector<unsigned long, std::__1::allocator > > > > const&) const [tiflash+128504818]

dbms/src/Storages/Page/PageStorage.cpp:415

0x7898948\tDB::DM::ColumnFileTiny::readFromDisk(DB::PageReader const&, std::__1::vector<DB::DM::ColumnDefine, std::__1::allocatorDB::DM::ColumnDefine > const&, unsigned long, unsigned long) const [tiflash+126454088]

dbms/src/Storages/DeltaMerge/ColumnFile/ColumnFileTiny.cpp:79

0x7899124\tDB::DM::ColumnFileTiny::fillColumns(DB::PageReader const&, std::__1::vector<DB::DM::ColumnDefine, std::__1::allocatorDB::DM::ColumnDefine > const&, unsigned long, std::__1::vector<COWPtrDB::IColumn::immutable_ptrDB::IColumn, std::__1::allocator<COWPtrDB::IColumn::immutable_ptrDB::IColumn > >&) const [tiflash+126456100]

dbms/src/Storages/DeltaMerge/ColumnFile/ColumnFileTiny.cpp:115

0x789a3f6\tDB::DM::ColumnFileTinyReader::readRows(std::__1::vector<COWPtrDB::IColumn::mutable_ptrDB::IColumn, std::__1::allocator<COWPtrDB::IColumn::mutable_ptrDB::IColumn > >&, unsigned long, unsigned long, DB::DM::RowKeyRange const*) [tiflash+126460918]

dbms/src/Storages/DeltaMerge/ColumnFile/ColumnFileTiny.cpp:237

0x788fa13\tDB::DM::ColumnFileSetReader::readRows(std::__1::vector<COWPtrDB::IColumn::mutable_ptrDB::IColumn, std::__1::allocator<COWPtrDB::IColumn::mutable_ptrDB::IColumn > >&, unsigned long, unsigned long, DB::DM::RowKeyRange const*) [tiflash+126417427]

dbms/src/Storages/DeltaMerge/ColumnFile/ColumnFileSetReader.cpp:160

0x788f485\tDB::DM::ColumnFileSetReader::readPKVersion(unsigned long, unsigned long) [tiflash+126416005]

dbms/src/Storages/DeltaMerge/ColumnFile/ColumnFileSetReader.cpp:115

0x788fb51\tDB::DM::ColumnFileSetReader::getPlaceItems(std::__1::vector<DB::DM::BlockOrDelete, std::__1::allocatorDB::DM::BlockOrDelete >&, unsigned long, unsigned long, unsigned long, unsigned long, unsigned long) [tiflash+126417745]

dbms/src/Storages/DeltaMerge/ColumnFile/ColumnFileSetReader.cpp:185

0x78c55c0\tDB::DM::DeltaValueReader::getPlaceItems(unsigne…

/var/log/message error as follows:

systemd: tiflash-9000.service: main process exited, code=killed, status=6/ABRT

systemd: Unit tiflash-9000.service entered failed state.

systemd: tiflash-9000.service failed.

systemd: tiflash-9000.service holdoff time over, scheduling restart.

systemd: Stopped tiflash service.

systemd: Started tiflash service.

bash: sync …

bash: real#0110m0.103s

bash: user#0110m0.000s

bash: sys#0110m0.074s

bash: ok

Subsequent Actions:

TiFlash node was taken offline. During the offline process, the service continued to restart. Later, it was forcibly taken offline. Currently, the node has been brought back online.