Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.Original topic: tiflash不同步数据,执行完【创建副本】执行链接不到tiflash

[TiDB Usage Environment] Production Environment

[TiDB Version] v6.5.1

[Reproduction Path]

Execute ALTER TABLE sxt_order SET TIFLASH REPLICA 1;

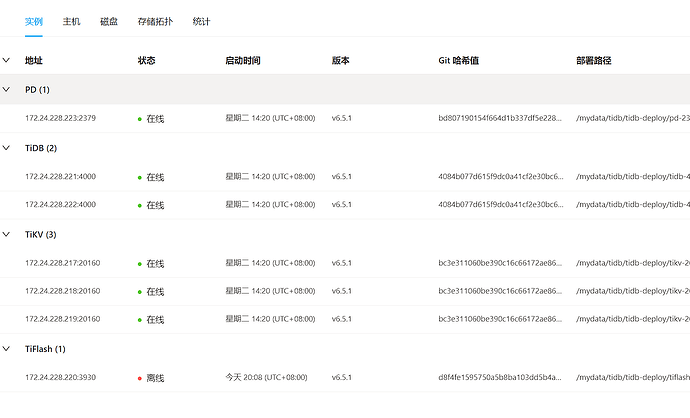

After a few minutes, the cluster cannot connect to TiFlash, and there are error logs.

The log is as follows:

[2023/03/30 17:24:39.182 +08:00] [ERROR] [Exception.cpp:89] ["Code: 49, e.displayText() = DB::Exception: invalid flag 83 in write cf: physical_table_id=10479: (while preHandleSnapshot region_id=6513, index=45900, term=7), e.what() = DB::Exception, Stack trace:\n\n\n 0x17225ce\tDB::Exception::Exception(std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, int) [tiflash+24257998]\n \tdbms/src/Common/Exception.h:46\n 0x6b08404\tDB::RegionCFDataBase<DB::RegionWriteCFDataTrait>::insert(DB::StringObject<true>&&, DB::StringObject<false>&&) [tiflash+112231428]\n \tdbms/src/Storages/Transaction/RegionCFDataBase.cpp:46\n 0x6a949db\tDB::DM::SSTFilesToBlockInputStream::read() [tiflash+111757787]\n \tdbms/src/Storages/DeltaMerge/SSTFilesToBlockInputStream.cpp:137\n 0x696ca15\tDB::DM::readNextBlock(std::__1::shared_ptr<DB::IBlockInputStream> const&) [tiflash+110545429]\n \tdbms/src/Storages/DeltaMerge/DeltaMergeHelpers.h:253\n 0x6a97412\tDB::DM::PKSquashingBlockInputStream<true>::read() [tiflash+111768594]\n \tdbms/src/Storages/DeltaMerge/PKSquashingBlockInputStream.h:68\n 0x696ca15\tDB::DM::readNextBlock(std::__1::shared_ptr<DB::IBlockInputStream> const&) [tiflash+110545429]\n \tdbms/src/Storages/DeltaMerge/DeltaMergeHelpers.h:253\n 0x16d57e5\tDB::DM::DMVersionFilterBlockInputStream<1>::initNextBlock() [tiflash+23943141]\n \tdbms/src/Storages/DeltaMerge/DMVersionFilterBlockInputStream.h:137\n 0x16d360c\tDB::DM::DMVersionFilterBlockInputStream<1>::read(DB::PODArray<unsigned char, 4096ul, Allocator<false>, 15ul, 16ul>*&, bool) [tiflash+23934476]\n \tdbms/src/Storages/DeltaMerge/DMVersionFilterBlockInputStream.cpp:51\n 0x6a96868\tDB::DM::BoundedSSTFilesToBlockInputStream::read() [tiflash+111765608]\n \tdbms/src/Storages/DeltaMerge/SSTFilesToBlockInputStream.cpp:307\n 0x16d9044\tDB::DM::SSTFilesToDTFilesOutputStream<std::__1::shared_ptr<DB::DM::BoundedSSTFilesToBlockInputStream> >::write() [tiflash+23957572]\n \tdbms/src/Storages/DeltaMerge/SSTFilesToDTFilesOutputStream.cpp:200\n 0x6a8d38f\tDB::KVStore::preHandleSSTsToDTFiles(std::__1::shared_ptr<DB::Region>, DB::SSTViewVec, unsigned long, unsigned long, DB::DM::FileConvertJobType, DB::TMTContext&) [tiflash+111727503]\n \tdbms/src/Storages/Transaction/ApplySnapshot.cpp:360\n 0x6a8ca64\tDB::KVStore::preHandleSnapshotToFiles(std::__1::shared_ptr<DB::Region>, DB::SSTViewVec, unsigned long, unsigned long, DB::TMTContext&) [tiflash+111725156]\n \tdbms/src/Storages/Transaction/ApplySnapshot.cpp:275\n 0x6ae7d66\tPreHandleSnapshot [tiflash+112098662]\n \tdbms/src/Storages/Transaction/ProxyFFI.cpp:388\n 0x7f693aa9a228\tengine_store_ffi::_$LT$impl$u20$engine_store_ffi..interfaces..root..DB..EngineStoreServerHelper$GT$::pre_handle_snapshot::hec57f9b0ef29a0bb [libtiflash_proxy.so+17646120]\n 0x7f693aa91d09\tengine_store_ffi::observer::pre_handle_snapshot_impl::h0b40090f59175b24 [libtiflash_proxy.so+17612041]\n 0x7f693aa84b86\tyatp::task::future::RawTask$LT$F$GT$::poll::hd3296fb5cae316b9 [libtiflash_proxy.so+17558406]\n 0x7f693c910dc3\t_$LT$yatp..task..future..Runner$u20$as$u20$yatp..pool..runner..Runner$GT$::handle::h0056e31c4da70e35 [libtiflash_proxy.so+49589699]\n 0x7f693c9036ac\tstd::sys_common::backtrace::__rust_begin_short_backtrace::h747afb2668c16dcb [libtiflash_proxy.so+49534636]\n 0x7f693c9041cc\tcore::ops::function::FnOnce::call_once$u7b$$u7b$vtable.shim$u7d$$u7d$::h83ec6721ad8db87f [libtiflash_proxy.so+49537484]\n 0x7f693c071555\tstd::sys::unix::thread::Thread::new::thread_start::hd2791a9cabec1fda [libtiflash_proxy.so+40547669]\n \t/rustc/96ddd32c4bfb1d78f0cd03eb068b1710a8cebeef/library/std/src/sys/unix/thread.rs:108\n 0x7f69397b1ea5\tstart_thread [libpthread.so.0+32421]\n 0x7f6938bb6b0d\tclone [libc.so.6+1043213]"] [source="DB::RawCppPtr DB::PreHandleSnapshot(DB::EngineStoreServerWrap *, DB::BaseBuffView, uint64_t, DB::SSTViewVec, uint64_t, uint64_t)"] [thread_id=30]

TiFlash error log:

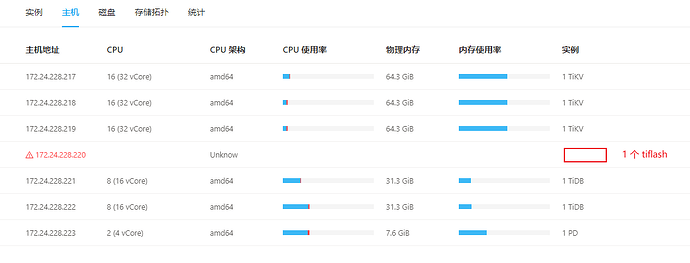

Cluster configuration:

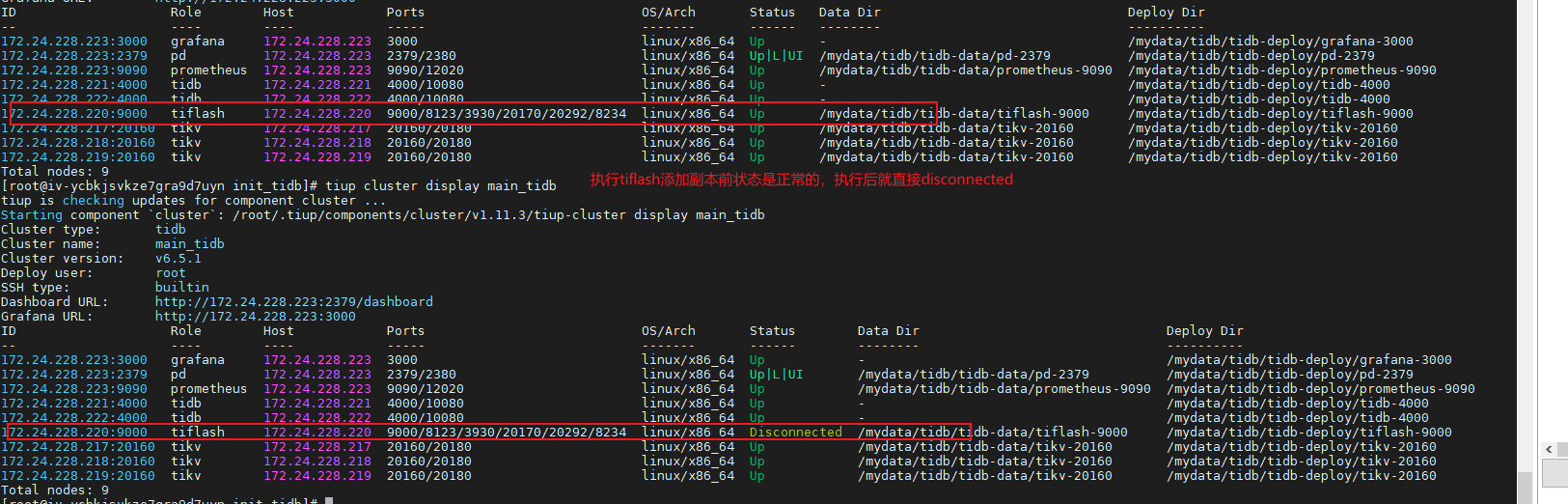

The status was normal before execution, but after executing ALTER TABLE sxt_order SET TIFLASH REPLICA 1, it got disconnected.

TiFlash has already tried scaling in and out but cannot solve the problem.

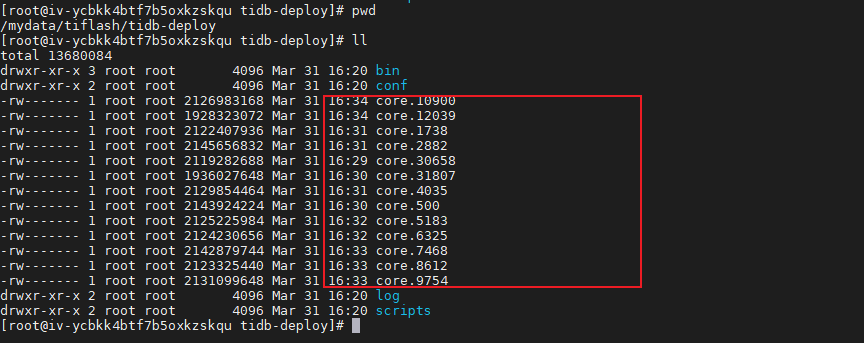

After the error, the TiFlash deployment directory keeps generating a core file of about 1G in size, which intuitively feels like some overflow.

I verified this bug, and the result is: as long as the downstream TiDB cluster has received data synchronized from the upstream CDC, the downstream TiFlash will definitely crash. No matter how the downstream TiFlash scales in and out, it cannot start normally again. This issue is consistently reproducible in v6.5.0, v6.5.1, and v7.0.0.