Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: 混布的场景下 tiflash 启动失败, 处于offline 状态无法启动

[TiDB Usage Environment] Poc

[TiDB Version] 6.5.3

[Reproduction Path] In the morning, some TiFlash tables could not be queried, prompting a 9012 timeout issue.

At noon, we performed a scale-in operation on the three TiFlash nodes.

We replaced them with a new NVMe and new ports to redeploy the three TiFlash nodes.

However, we found that the TiFlash servers remained in an offline state.

The service kept restarting.

We have several partitioned tables, and the number of partitions is relatively large.

[Encountered Issue: Symptoms and Impact] TiFlash cannot start.

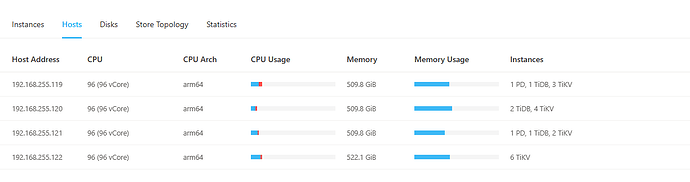

[Resource Configuration] Four servers, all with Kunpeng 96 cores, 512G memory, NVMe SSD.

[Attachments: Screenshots/Logs/Monitoring]

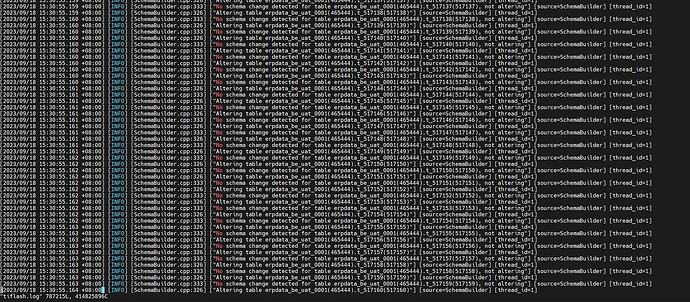

Partial error information from tiflash.log:

Configuration information:

default_profile = “default”

display_name = “TiFlash”

http_port = 8124

listen_host = “0.0.0.0”

path = “/nvme02/tiflash/data/tiflash-9001”

tcp_port = 9002

tmp_path = “/nvme02/tiflash/data/tiflash-9001/tmp”

[flash]

service_addr = “192.168.255.119:3931”

tidb_status_addr = “192.168.255.119:10080,192.168.255.121:10080,192.168.255.120:10080,192.168.255.120:10081”

[flash.flash_cluster]

cluster_manager_path = “/deploy/tidb/tiflash-9002/bin/tiflash/flash_cluster_manager”

log = “/deploy/tidb/tiflash-9002/log/tiflash_cluster_manager.log”

master_ttl = 600

refresh_interval = 200

update_rule_interval = 50

[flash.proxy]

config = “/deploy/tidb/tiflash-9002/conf/tiflash-learner.toml”

[logger]

count = 20

errorlog = “/deploy/tidb/tiflash-9002/log/tiflash_error.log”

level = “debug”

log = “/deploy/tidb/tiflash-9002/log/tiflash.log”

size = “1000M”

[profiles]

[profiles.default]

max_memory_usage = 0

[raft]

pd_addr = “192.168.255.119:2379,192.168.255.121:2379”

[status]

metrics_port = 8235

Cluster Information:

tiflash.tar.gz (25.4 MB)

The service automatically exits after running for about seven seconds.

When scaling down, did you cancel the TiFlash table replicas before scaling down TiFlash? The system variable tidb_allow_fallback_to_tikv is used to decide whether to automatically fallback to TiKV for execution when a TiFlash query fails. It is OFF by default. You can temporarily adjust it to prevent the application from reporting errors.

When scaling in, the offline status is normal while waiting for the region migration to complete. You can use pd-ctl store XXX or information_schema.tikv_store_status to check if the region_count is decreasing. For scaling down, you can refer to:

Are there any error logs in the TiFlash logs before the restart? The posted warn and info level logs should not have much impact.

In version 6.5, you can remove the TiFlash replicas for the entire database. First, remove all TiFlash replicas by executing ALTER DATABASE aaa SET TIFLASH REPLICA 0, then scale down TiFlash and scale it up again.

TiFlash in TiDB needs to be canceled and rebuilt.

It’s about canceling the rebuild…

Scale in has been successful, but the scale out of the TiFlash node is invalid. It has not been able to start.

The system is frantically refreshing the table information in the TiKV database. After refreshing to a certain extent, it exits and then becomes unresponsive.

SELECT * FROM INFORMATION_SCHEMA.TIFLASH_REPLICA a WHERE a.TABLE_NAME=‘’;—What does this table show in 2022?

Everything has been cleaned up. Now all the new tiflash instances with 0 can’t start.

I have encountered the same problem. It seems to be related to the version of the TiDB cluster. After upgrading to version 5.0, the problem was resolved.

Your TiFlash hasn’t set up replicas, but there’s no error when deploying TiFlash, and it can’t start… Theoretically, if you set TiFlash replicas to 0, what logs is it writing… Shouldn’t the service start first? Did you upgrade your cluster from a lower version?

No, I feel like I’ve encountered a bug. The metadata fails once it reaches 282MB. I’ve tried various methods, but it fails at 282MB every time. I hope someone can provide a solution.

[root@clickhouse1 tiflash-9003]# du -ahd 1

4.0K ./format_schemas

36G ./flash

4.0K ./status

36K ./page

4.0K ./flags

4.0K ./user_files

282M ./metadata

4.0K ./tmp

8.0K ./data

36G .

[root@clickhouse1 tiflash-9003]# systemctl status tiflash-9003

● tiflash-9003.service - tiflash service

Loaded: loaded (/etc/systemd/system/tiflash-9003.service; enabled; vendor preset: disabled)

Active: activating (auto-restart) (Result: exit-code) since Mon 2023-09-18 18:03:19 CST; 9s ago

Process: 888878 ExecStart=/bin/bash -c /deploy/tidb/tiflash-9003/scripts/run_tiflash.sh (code=exited, status=1/FAILURE)

Main PID: 888878 (code=exited, status=1/FAILURE)

[root@clickhouse1 tiflash-9003]#

Check the logs on the dashboard. Are there any error logs?

Here is a similar issue you can check out: TiFlash节点不断重启 - TiDB 的问答社区

Also refer to this issue!

Please run this command on the machine where TiFlash is deployed to check if your CPU supports AVX2 instructions:

cat /proc/cpuinfo | grep avx2

Paste the output text here.

After reading the log you posted, I only see an Address already in use error. Is the port being occupied?

❯ grep -Ev "INFO|DEBUG|ection for new style" tiflash.log

[2023/09/18 14:51:07.222 +08:00] [ERROR] [<unknown>] ["Net Exception: Address already in use: 0.0.0.0:8124"] [source=Application] [thread_id=1]

In your mixed deployment scenario, which component is TiFlash mixed with? Check if there is a port conflict. I found that TiFlash has quite a few ports…

tiflash_servers:

- host: 10.0.1.11

# ssh_port: 22

# tcp_port: 9000

# flash_service_port: 3930

# flash_proxy_port: 20170

# flash_proxy_status_port: 20292

# metrics_port: 8234

# deploy_dir: /tidb-deploy/tiflash-9000

## The `data_dir` will be overwritten if you define `storage.main.dir` configurations in the `config` section.

# data_dir: /tidb-data/tiflash-9000

# numa_node: "0,1"

Did you set the http_port of several nodes to 8124?