Note:

This topic has been translated from a Chinese forum by GPT and might contain errors.

Original topic: tikv-ctl

[TiDB Usage Environment] Production Environment

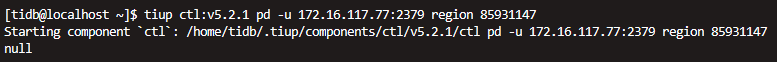

[TiDB Version] V5.2.1

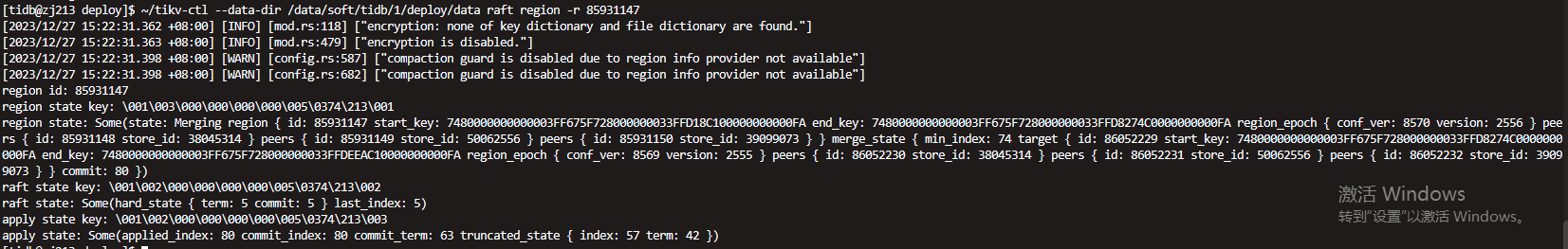

[Reproduction Path] The disk capacity of a certain TiKV node exceeded 90%. After performing two expansion operations (adding two new nodes), one of the newly added nodes was scaled down before the peers were fully balanced.

[Encountered Problem: Phenomenon and Impact]

The cluster is currently reading and writing normally, but the TiKV node that previously had over 90% disk capacity cannot be brought up.

From the images, it seems that the current node needs to execute a merge to generate a new peer, but the current node cannot be brought up.

[Resource Configuration] Go to TiDB Dashboard - Cluster Info - Hosts and take a screenshot of this page

[Attachments: Screenshots/Logs/Monitoring]

An alert should be triggered at 80%, why wait until 90? Compaction might not even be possible by then.

Observe the monitoring to see if the disk usage on this high-usage node is gradually recovering, and if the new node is receiving data.

This node is down, but the entire cluster is functioning normally for read and write operations  . I’m not sure if we can forcibly take this node down.

. I’m not sure if we can forcibly take this node down.

Since it’s normal read and write, don’t think about using abnormal methods to handle it. You can try clearing the logs first to free up some space and see if the node can recover.

After pruning the Tombstone node (172.16.116.213:20182), the TiKV on the same host (172.16.116.213:20181) also went down.

After deleting the logs of Raft and RocksDB, it has now dropped below 80%, and it feels like it can’t start up on its own anymore.

For tea, it must be taken offline.

If there are no issues with taking it offline, we can proceed with scaling up again and reusing the resources.

I analyzed the data from the past few days and it seems there are no missing entries. I proceeded with the scale-down directly.

I analyzed the data from the past few days and it seems there are no missing entries. I proceeded with the scale-down directly.

Try going offline and then online again.

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.